- Apr 25, 2024

-

-

Alexandru Gheorghe authored

... a few sessions too late :(, this already happened on polkadot, so as of now there are no known relay-chains without async backing enabled in runtime, but let's fix it in case someone else wants to repeat our steps. Fixes: https://github.com/paritytech/polkadot-sdk/issues/4226 --------- Signed-off-by:

Alexandru Gheorghe <[email protected]>

-

- Apr 19, 2024

-

-

Andrei Eres authored

- Returned latency (with it, results are more stable) - The threshold is weakened - Increased number of runs

-

- Apr 18, 2024

-

-

Alexandru Gheorghe authored

The `next_retry_time` gets populated when a request receives an error timeout or any other error, after thatn next_retry would check all requests in the queue returns the smallest one, which then gets used to move the main loop by creating a Delay ``` futures_timer::Delay::new(instant.saturating_duration_since(Instant::now())).await, ``` However when we retry a task for the first time we still keep it in the queue an mark it as in flight so its next_retry_time would be the oldest and it would be small than `now`, so the Delay will always triggers, so that would make the main loop essentially busy wait untill we received a response for the retry request. Fix this by excluding the tasks that are already in-flight. --------- Signed-off-by:

Alexandru Gheorghe <[email protected]> Co-authored-by:

Andrei Sandu <[email protected]>

-

Alexander Samusev authored

cc https://github.com/paritytech/ci_cd/issues/974 --------- Co-authored-by: command-bot <> Co-authored-by:

Bastian Köcher <[email protected]>

-

- Apr 11, 2024

-

-

Andrei Eres authored

Implements the idea from https://github.com/paritytech/polkadot-sdk/pull/3899 - Removed latencies - Number of runs reduced from 50 to 5, according to local runs it's quite enough - Network message is always sent in a spawned task, even if latency is zero. Without it, CPU time sometimes spikes. - Removed the `testnet` profile because we probably don't need that debug additions. After the local tests I can't say that it brings a significant improvement in the stability of the results. However, I belive it is worth trying and looking at the results over time.

-

- Apr 10, 2024

-

-

Alexandru Vasile authored

This tiny PR extends the `on_validated_block_announce` log with the bad PeerID. Used to identify if the peerID is malicious by correlating with other logs (ie peer-set). While at it, have removed the `\n` from a multiline log, which did not play well with [sub-triage-logs](https://github.com/lexnv/sub-triage-logs/tree/master ). cc @paritytech/networking --------- Signed-off-by:

Alexandru Vasile <[email protected]> Co-authored-by:

Bastian Köcher <[email protected]>

-

- Apr 08, 2024

-

-

Aaro Altonen authored

[litep2p](https://github.com/altonen/litep2p) is a libp2p-compatible P2P networking library. It supports all of the features of `rust-libp2p` that are currently being utilized by Polkadot SDK. Compared to `rust-libp2p`, `litep2p` has a quite different architecture which is why the new `litep2p` network backend is only able to use a little of the existing code in `sc-network`. The design has been mainly influenced by how we'd wish to structure our networking-related code in Polkadot SDK: independent higher-levels protocols directly communicating with the network over links that support bidirectional backpressure. A good example would be `NotificationHandle`/`RequestResponseHandle` abstractions which allow, e.g., `SyncingEngine` to directly communicate with peers to announce/request blocks. I've tried running `polkadot --network-backend litep2p` with a few different peer configurations and there is a noticeable reduction in networking CPU usage. For high load (`--out-peers 200`), networking CPU usage goes down from ~110% to ~30% (80 pp) and for normal load (`--out-peers 40`), the usage goes down from ~55% to ~18% (37 pp). These should not be taken as final numbers because: a) there are still some low-hanging optimization fruits, such as enabling [receive window auto-tuning](https://github.com/libp2p/rust-yamux/pull/176 ), integrating `Peerset` more closely with `litep2p` or improving memory usage of the WebSocket transport b) fixing bugs/instabilities that incorrectly cause `litep2p` to do less work will increase the networking CPU usage c) verification in a more diverse set of tests/conditions is needed Nevertheless, these numbers should give an early estimate for CPU usage of the new networking backend. This PR consists of three separate changes: * introduce a generic `PeerId` (wrapper around `Multihash`) so that we don't have use `NetworkService::PeerId` in every part of the code that uses a `PeerId` * introduce `NetworkBackend` trait, implement it for the libp2p network stack and make Polkadot SDK generic over `NetworkBackend` * implement `NetworkBackend` for litep2p The new library should be considered experimental which is why `rust-libp2p` will remain as the default option for the time being. This PR currently depends on the master branch of `litep2p` but I'll cut a new release for the library once all review comments have been addresses. --------- Signed-off-by:

Alexandru Vasile <[email protected]> Co-authored-by:

Dmitry Markin <[email protected]> Co-authored-by:

Alexandru Vasile <[email protected]> Co-authored-by:

Alexandru Vasile <[email protected]>

-

Tsvetomir Dimitrov authored

With Coretime enabled we can no longer assume there is a static 1:1 mapping between core index and para id. This mapping should be obtained from the scheduler/claimqueue on block by block basis. This PR modifies `para_id()` (from `CoreState`) to return the scheduled `ParaId` for occupied cores and removes its usages in the code. Closes https://github.com/paritytech/polkadot-sdk/issues/3948 --------- Co-authored-by:

Andrei Sandu <[email protected]>

-

- Apr 03, 2024

-

-

Andrei Sandu authored

Remove `fetch_next_scheduled_on_core` in favor of new wrapper and methods for accessing it. --------- Signed-off-by:Andrei Sandu <[email protected]>

-

Andrei Sandu authored

fixes https://github.com/paritytech/polkadot-sdk/issues/3775 Additionally moves the claim queue fetch utilities into `subsystem-util`. TODO: - [x] fix tests - [x] add elastic scaling tests --------- Signed-off-by:

Andrei Sandu <[email protected]>

-

- Apr 02, 2024

-

-

Serban Iorga authored

Working towards migrating the `parity-bridges-common` repo inside `polkadot-sdk`. This PR upgrades some dependencies in order to align them with the versions used in `parity-bridges-common` Related to https://github.com/paritytech/parity-bridges-common/issues/2538

-

- Apr 01, 2024

-

-

Alexandru Gheorghe authored

Runtime release 1.2 includes bumping of the ParachainHost APIs up to v10, so let's move all the released APIs out of vstaging folder, this PR does not include any logic changes only renaming of the modules and some moving around. Signed-off-by:Alexandru Gheorghe <[email protected]>

-

Alexandru Gheorghe authored

The metric records the current protocol_version of the validator that just connected with the peer_map.len(), which contains all peers that connected, that has the effect the metric will be wrong since it won't tell us how many peers we have connected per version because it will always record the total number of peers Fix this by counting by version inside peer_map, additionally because that might be a bit heavier than len(), publish it only on-active leaves. --------- Signed-off-by:Alexandru Gheorghe <[email protected]>

-

- Mar 31, 2024

-

-

Bastian Köcher authored

Closes: https://github.com/paritytech/polkadot-sdk/issues/3906

-

- Mar 27, 2024

-

-

Andrei Sandu authored

This works only for collators that implement the `collator_fn` allowing `collation-generation` subsystem to pull collations triggered on new heads. Also enables `request_v2::CollationFetchingResponse::CollationWithParentHeadData` for test adder/undying collators. TODO: - [x] fix tests - [x] new tests - [x] PR doc --------- Signed-off-by:Andrei Sandu <[email protected]>

-

- Mar 26, 2024

-

-

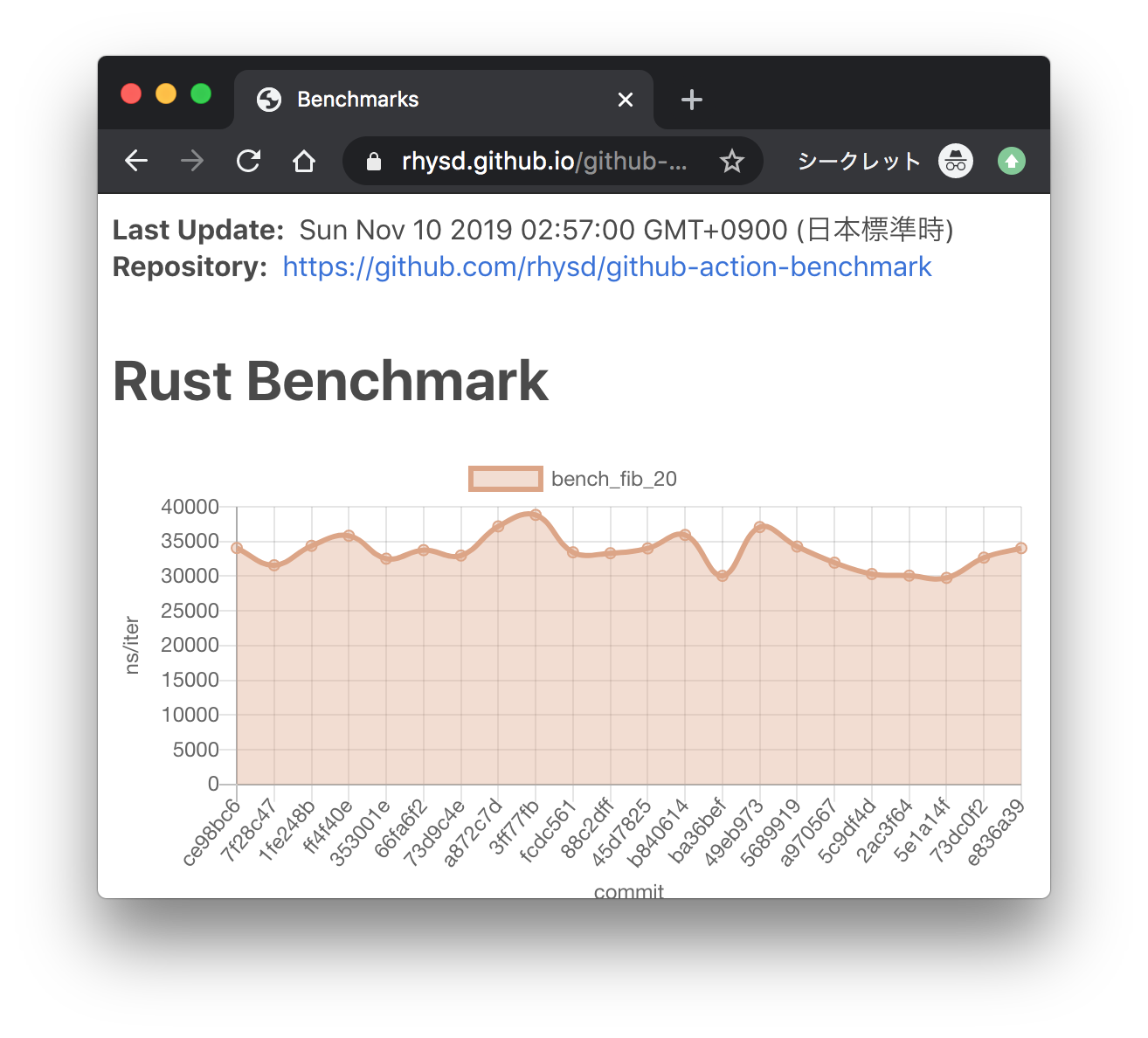

Andrei Eres authored

Here we add the ability to save subsystem benchmark results in JSON format to display them as graphs To draw graphs, CI team will use [github-action-benchmark](https://github.com/benchmark-action/github-action-benchmark). Since we are using custom benchmarks, we need to prepare [a specific data type](https://github.com/benchmark-action/github-action-benchmark?tab=readme-ov-file#examples): ``` [ { "name": "CPU Load", "unit": "Percent", "value": 50 } ] ``` Then we'll get graphs like this:  [A live page with graphs](https://benchmark-action.github.io/github-action-benchmark/dev/bench/ ) --------- Co-authored-by:

ordian <[email protected]>

-

Dcompoze authored

**Update:** Pushed additional changes based on the review comments. **This pull request fixes various spelling mistakes in this repository.** Most of the changes are contained in the first **3** commits: - `Fix spelling mistakes in comments and docs` - `Fix spelling mistakes in test names` - `Fix spelling mistakes in error messages, panic messages, logs and tracing` Other source code spelling mistakes are separated into individual commits for easier reviewing: - `Fix the spelling of 'authority'` - `Fix the spelling of 'REASONABLE_HEADERS_IN_JUSTIFICATION_ANCESTRY'` - `Fix the spelling of 'prev_enqueud_messages'` - `Fix the spelling of 'endpoint'` - `Fix the spelling of 'children'` - `Fix the spelling of 'PenpalSiblingSovereignAccount'` - `Fix the spelling of 'PenpalSudoAccount'` - `Fix the spelling of 'insufficient'` - `Fix the spelling of 'PalletXcmExtrinsicsBenchmark'` - `Fix the spelling of 'subtracted'` - `Fix the spelling of 'CandidatePendingAvailability'` - `Fix the spelling of 'exclusive'` - `Fix the spelling of 'until'` - `Fix the spelling of 'discriminator'` - `Fix the spelling of 'nonexistent'` - `Fix the spelling of 'subsystem'` - `Fix the spelling of 'indices'` - `Fix the spelling of 'committed'` - `Fix the spelling of 'topology'` - `Fix the spelling of 'response'` - `Fix the spelling of 'beneficiary'` - `Fix the spelling of 'formatted'` - `Fix the spelling of 'UNKNOWN_PROOF_REQUEST'` - `Fix the spelling of 'succeeded'` - `Fix the spelling of 'reopened'` - `Fix the spelling of 'proposer'` - `Fix the spelling of 'InstantiationNonce'` - `Fix the spelling of 'depositor'` - `Fix the spelling of 'expiration'` - `Fix the spelling of 'phantom'` - `Fix the spelling of 'AggregatedKeyValue'` - `Fix the spelling of 'randomness'` - `Fix the spelling of 'defendant'` - `Fix the spelling of 'AquaticMammal'` - `Fix the spelling of 'transactions'` - `Fix the spelling of 'PassingTracingSubscriber'` - `Fix the spelling of 'TxSignaturePayload'` - `Fix the spelling of 'versioning'` - `Fix the spelling of 'descendant'` - `Fix the spelling of 'overridden'` - `Fix the spelling of 'network'` Let me know if this structure is adequate. **Note:** The usage of the words `Merkle`, `Merkelize`, `Merklization`, `Merkelization`, `Merkleization`, is somewhat inconsistent but I left it as it is. ~~**Note:** In some places the term `Receival` is used to refer to message reception, IMO `Reception` is the correct word here, but I left it as it is.~~ ~~**Note:** In some places the term `Overlayed` is used instead of the more acceptable version `Overlaid` but I also left it as it is.~~ ~~**Note:** In some places the term `Applyable` is used instead of the correct version `Applicable` but I also left it as it is.~~ **Note:** Some usage of British vs American english e.g. `judgement` vs `judgment`, `initialise` vs `initialize`, `optimise` vs `optimize` etc. are both present in different places, but I suppose that's understandable given the number of contributors. ~~**Note:** There is a spelling mistake in `.github/CODEOWNERS` but it triggers errors in CI when I make changes to it, so I left it as it is.~~

-

- Mar 25, 2024

-

-

Andrei Eres authored

Adds availability-write regression tests. The results for the `availability-distribution` subsystem are volatile, so I had to reduce the precision of the test.

-

- Mar 19, 2024

-

-

ordian authored

On top of #3302. We want the validators to upgrade first before we add changes to the collation side to send the new variants, which is why this part is extracted into a separate PR. The detection of when to send the parent head is based on the core assignments at the relay parent of the candidate. We probably want to make it more flexible in the future, but for now, it will work for a simple use case when a para always has multiple cores assigned to it. --------- Signed-off-by:

Matteo Muraca <[email protected]> Signed-off-by:

dependabot[bot] <[email protected]> Co-authored-by:

Matteo Muraca <[email protected]> Co-authored-by:

dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com> Co-authored-by:

Juan Ignacio Rios <[email protected]> Co-authored-by:

Branislav Kontur <[email protected]> Co-authored-by:

Bastian Köcher <[email protected]>

-

Bastian Köcher authored

Closes: https://github.com/paritytech/polkadot-sdk/issues/3704

-

- Mar 15, 2024

-

-

ordian authored

Fixes #3128. This introduces a new variant for the collation response from the collator that includes the parent head data. For now, collators won't send this new variant. We'll need to change the collator side of the collator protocol to detect all the cores assigned to a para and send the parent head data in the case when it's more than 1 core. - [x] validate approach - [x] check head data hash

-

- Mar 13, 2024

-

-

-

Alexandru Gheorghe authored

This is printed every 10 minutes, I see no reason why it shouldn't be in all the logs, it would give us valuable information about what is going on with node connectivity when validators come-back to us to report issues. Signed-off-by:Alexandru Gheorghe <[email protected]>

-

- Mar 11, 2024

-

-

Andrei Eres authored

Fixes https://github.com/paritytech/polkadot-sdk/issues/3528 ```rust latency: mean_latency_ms = 30 // common sense std_dev = 2.0 // common sense n_validators = 300 // max number of validators, from chain config n_cores = 60 // 300/5 max_validators_per_core = 5 // default min_pov_size = 5120 // max max_pov_size = 5120 // max peer_bandwidth = 44040192 // from the Parity's kusama validators bandwidth = 44040192 // from the Parity's kusama validators connectivity = 90 // we need to be connected to 90-95% of peers ```

-

- Mar 08, 2024

-

-

Alexandru Gheorghe authored

Looking at rococo-asset-hub https://github.com/paritytech/polkadot-sdk/issues/3519 there seems to be a lot of instances where collator did not advertise their collations, while there are multiple problems there, one of it is that we are connecting and disconnecting to our assigned validators every block, because on reconnect_timeout every 4s we call connect_to_validators and that will produce 0 validators when all went well, so set_reseverd_peers called from validator discovery will disconnect all our peers. More details here: https://github.com/paritytech/polkadot-sdk/issues/3519#issuecomment-1972667343 Now, this shouldn't be a problem, but it stacks with an existing bug in our network stack where if disconnect from a peer the peer might not notice it, so it won't detect the reconnect either and it won't send us the necessary view updates, so we won't advertise the collation to it more details here: https://github.com/paritytech/polkadot-sdk/issues/3519#issuecomment-1972958276 To avoid hitting this condition that often, let's keep the peers in the reserved set for the entire duration we are allocated to a backing group. Backing group sizes(1 rococo, 3 kusama, 5 polkadot) are really small, so this shouldn't lead to that many connections. Additionally, the validators would disconnect us any way if we don't advertise anything for 4 blocks. ## TODO - [x] More testing. - [x] Confirm on rococo that this is improving the situation. (It doesn't but just because other things are going wrong there). --------- Signed-off-by:

Alexandru Gheorghe <[email protected]>

-

- Mar 01, 2024

-

-

Andrei Eres authored

### What's been done - `subsystem-bench` has been split into two parts: a cli benchmark runner and a library. - The cli runner is quite simple. It just allows us to run `.yaml` based test sequences. Now it should only be used to run benchmarks during development. - The library is used in the cli runner and in regression tests. Some code is changed to make the library independent of the runner. - Added first regression tests for availability read and write that replicate existing test sequences. ### How we run regression tests - Regression tests are simply rust integration tests without the harnesses. - They should only be compiled under the `subsystem-benchmarks` feature to prevent them from running with other tests. - This doesn't work when running tests with `nextest` in CI, so additional filters have been added to the `nextest` runs. - Each benchmark run takes a different time in the beginning, so we "warm up" the tests until their CPU usage differs by only 1%. - After the warm-up, we run the benchmarks a few more times and compare the average with the exception using a precision. ### What is still wrong? - I haven't managed to set up approval voting tests. The spread of their results is too large and can't be narrowed down in a reasonable amount of time in the warm-up phase. - The tests start an unconfigurable prometheus endpoint inside, which causes errors because they use the same 9999 port. I disable it with a flag, but I think it's better to extract the endpoint launching outside the test, as we already do with `valgrind` and `pyroscope`. But we still use `prometheus` inside the tests. ### Future work * https://github.com/paritytech/polkadot-sdk/issues/3528 * https://github.com/paritytech/polkadot-sdk/issues/3529 * https://github.com/paritytech/polkadot-sdk/issues/3530 * https://github.com/paritytech/polkadot-sdk/issues/3531 --------- Co-authored-by:

Alexander Samusev <[email protected]>

-

- Feb 26, 2024

-

-

Alexandru Gheorghe authored

Add more debug logs to understand if statement-distribution is in a bad state, should be useful for debugging https://github.com/paritytech/polkadot-sdk/issues/3314 on production networks. Additionally, increase the number of parallel requests should make, since I notice that requests take around 100ms on kusama, and the 5 parallel request was picked mostly random, no reason why we can do more than that. --------- Signed-off-by:

Alexandru Gheorghe <[email protected]> Co-authored-by:

ordian <[email protected]>

-

- Feb 20, 2024

-

-

Oliver Tale-Yazdi authored

Lifting some more dependencies to the workspace. Just using the most-often updated ones for now. It can be reproduced locally. ```sh # First you can check if there would be semver incompatible bumps (looks good in this case): $ zepter transpose dependency lift-to-workspace --ignore-errors syn quote thiserror "regex:^serde.*" # Then apply the changes: $ zepter transpose dependency lift-to-workspace --version-resolver=highest syn quote thiserror "regex:^serde.*" --fix # And format the changes: $ taplo format --config .config/taplo.toml ``` --------- Signed-off-by:Oliver Tale-Yazdi <[email protected]>

-

- Feb 19, 2024

-

-

Alexandru Gheorghe authored

~The previous fix was actually incomplete because we update the authorties only on the situation where we decided to reconnect because we had a low connectivity issue. Now the problem is that update_authority_ids use the list of connected peers, so on restart that does contain anything, so calling immediately after issue_connection_request won't detect all authorities, so we need to also check every block as the comment said, but that did not match the code.~ Actually the fix was correct the flow is follow if more than 1/3 of the authorities can not be resolved we set last_failure and call `ConnectToResolvedValidators`. We will call UpdateAuthorities for all the authorities already connected and for which we already know the address and for the ones that will connect later on `PeerConnected` will have the AuthorityId field set, because it is already known, so approval-distribution will update its cache topology. --------- Signed-off-by:

Alexandru Gheorghe <[email protected]> Co-authored-by:

Alexander Samusev <[email protected]>

-

- Feb 12, 2024

-

-

Oliver Tale-Yazdi authored

Changes (partial https://github.com/paritytech/polkadot-sdk/issues/994): - Set log to `0.4.20` everywhere - Lift `log` to the workspace Starting with a simpler one after seeing https://github.com/paritytech/polkadot-sdk/pull/2065 from @jsdw . This sets the `default-features` to `false` in the root and then overwrites that in each create to its original value. This is necessary since otherwise the `default` features are additive and its impossible to disable them in the crate again once they are enabled in the workspace. I am using a tool to do this, so its mostly a test to see that it works as expected. --------- Signed-off-by:

Oliver Tale-Yazdi <[email protected]>

-

Alexandru Gheorghe authored

On grid distribution messages have two paths of reaching a node, so there is the possiblity of a race when two peers send each other the same statement around the same time. Statement local_knowledge will tell us that the peer should have not send the statement because we sent it to it. Fix it by also keeping track only of the statement we received from a given peer and penalize it only if it sends it to us more than once. Fixes: https://github.com/paritytech/polkadot-sdk/issues/2346 Additionally, also use different Cost labels for different paths to make it easier to debug things. --------- Signed-off-by:

Alexandru Gheorghe <[email protected]>

-

- Jan 31, 2024

-

-

Alexandru Gheorghe authored

Signed-off-by:Alexandru Gheorghe <[email protected]>

-

- Jan 30, 2024

-

-

Andrei Sandu authored

fixes #675 --------- Signed-off-by:Andrei Sandu <[email protected]>

-

- Jan 29, 2024

-

-

Alexandru Gheorghe authored

Topology is coming only at the beginning of each session, so we might lose it if prospective parachains was not enabled at the begining of the session, so cache it for later use. Fixes: https://github.com/paritytech/polkadot-sdk/issues/3058 --------- Signed-off-by:

Alexandru Gheorghe <[email protected]>

-

- Jan 26, 2024

-

-

Liam Aharon authored

Related https://github.com/paritytech/polkadot-sdk/issues/3032 --- Using https://github.com/liamaharon/cargo-workspace-version-tools/ `cargo run -- sync --path ../polkadot-sdk` --------- Signed-off-by:

Oliver Tale-Yazdi <[email protected]> Co-authored-by:

Oliver Tale-Yazdi <[email protected]>

-

- Jan 25, 2024

-

-

Alexandru Gheorghe authored

Fixes: https://github.com/paritytech/polkadot-sdk/issues/2138. Especially on restart AuthorithyDiscovery cache is not populated so we create an invalid topology and messages won't be routed correctly for the entire session. This PR proposes to try to fix this by updating the topology as soon as we now the Authority/PeerId mapping, that should impact the situation dramatically. [This issue was hit yesterday](https://grafana.teleport.parity.io/goto/o9q2625Sg?orgId=1 ), on Westend and resulted in stalling the finality. # TODO - [x] Unit tests - [x] Test impact on versi --------- Signed-off-by:

Alexandru Gheorghe <[email protected]>

-

- Jan 24, 2024

-

-

asynchronous rob authored

https://github.com/paritytech/polkadot-sdk/pull/3042/files#r1465115145 --------- Co-authored-by: command-bot <>

-

Bastian Köcher authored

As activation can fail, we ensure that we don't miss deactivation of leaves.

-

- Jan 23, 2024

-

-

Andrei Sandu authored

Found the issue while investigating the recent finality stall on Westend after upgrading to 1.6.0. Approval distribution aggression is supposed to trade off bandwidth and re-send assignemnts/approvals until enough approvals are be received by at least 2/3 validators. This is supposed to be a catch all mechanism when network connectivity goes south or many validators reboot at the same time. This fix ensures that we always resend approvals starting with the first unfinalized block even in the case when it appears approved from the node's perspective. TODO: - [x] Versi test --------- Signed-off-by:Andrei Sandu <[email protected]>

-

- Jan 22, 2024

-

-

Davide Galassi authored

Step towards https://github.com/paritytech/polkadot-sdk/issues/1975 As reported https://github.com/paritytech/polkadot-sdk/issues/1975#issuecomment-1774534225 I'd like to encapsulate crypto related stuff in a dedicated folder. Currently all cryptographic primitive wrappers are all sparsed in `substrate/core` which contains "misc core" stuff. To simplify the process, as the first step with this PR I propose to move the cryptographic hashing there. The `substrate/crypto` folder was already created to contains `ec-utils` crate. Notes: - rename `sp-core-hashing` to `sp-crypto-hashing` - rename `sp-core-hashing-proc-macro` to `sp-crypto-hashing-proc-macro` - As the crates name is changed I took the freedom to restart fresh from version 0.1.0 for both crates --------- Co-authored-by:

Robert Hambrock <[email protected]>

-