[subsystem-benchmarks] Save results to json (#3829)

Here we add the ability to save subsystem benchmark results in JSON

format to display them as graphs

To draw graphs, CI team will use

[github-action-benchmark](https://github.com/benchmark-action/github-action-benchmark).

Since we are using custom benchmarks, we need to prepare [a specific

data

type](https://github.com/benchmark-action/github-action-benchmark?tab=readme-ov-file#examples):

```

[

{

"name": "CPU Load",

"unit": "Percent",

"value": 50

}

]

```

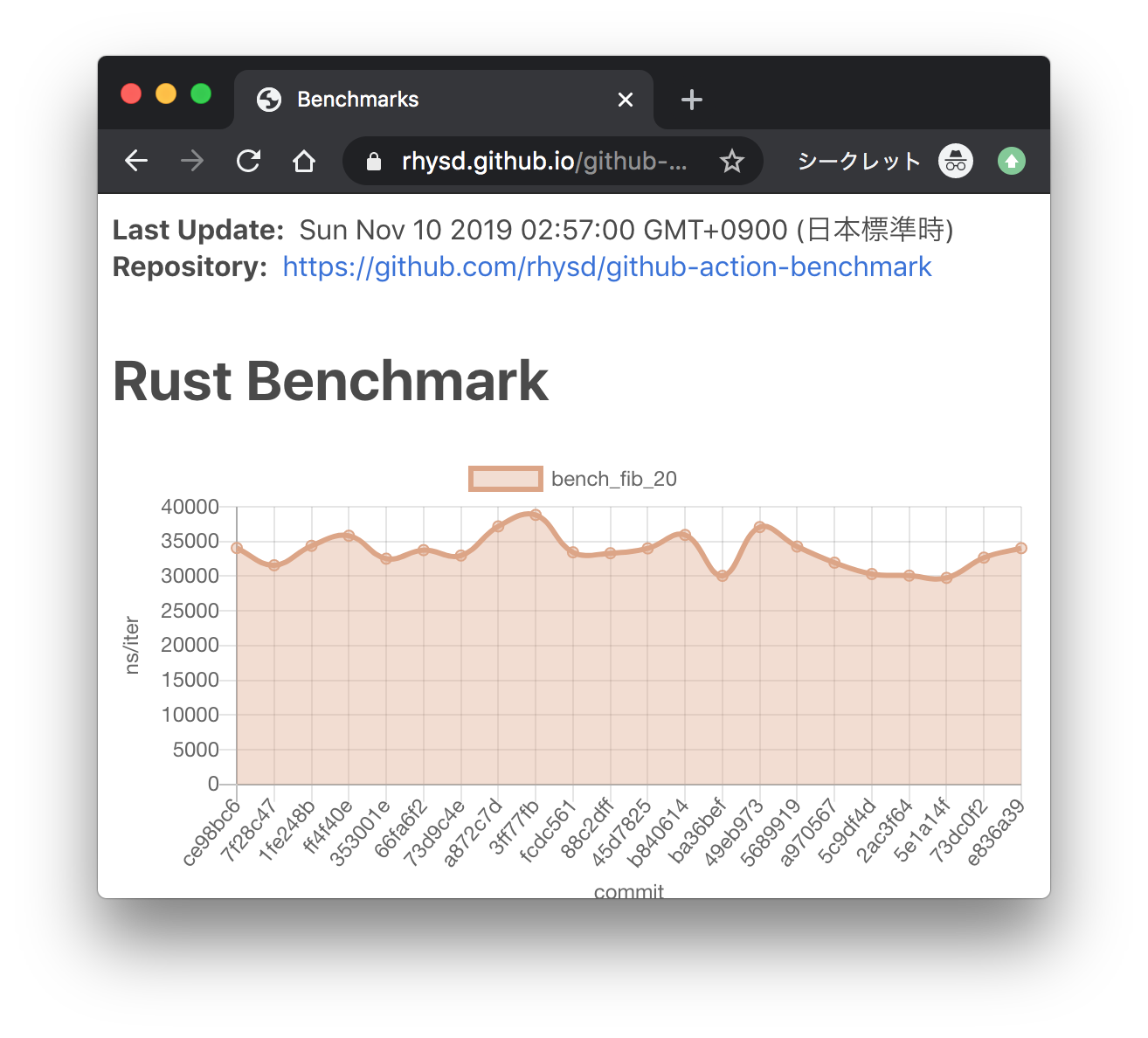

Then we'll get graphs like this:

[A live page with

graphs](https://benchmark-action.github.io/github-action-benchmark/dev/bench/)

---------

Co-authored-by:  ordian <write@reusable.software>

ordian <write@reusable.software>

parent

002d9260

Showing

- Cargo.lock 1 addition, 0 deletionsCargo.lock

- polkadot/node/network/availability-distribution/benches/availability-distribution-regression-bench.rs 7 additions, 0 deletions...ion/benches/availability-distribution-regression-bench.rs

- polkadot/node/network/availability-recovery/benches/availability-recovery-regression-bench.rs 7 additions, 0 deletions...ecovery/benches/availability-recovery-regression-bench.rs

- polkadot/node/subsystem-bench/Cargo.toml 1 addition, 0 deletionspolkadot/node/subsystem-bench/Cargo.toml

- polkadot/node/subsystem-bench/src/lib/environment.rs 1 addition, 1 deletionpolkadot/node/subsystem-bench/src/lib/environment.rs

- polkadot/node/subsystem-bench/src/lib/lib.rs 1 addition, 0 deletionspolkadot/node/subsystem-bench/src/lib/lib.rs

- polkadot/node/subsystem-bench/src/lib/usage.rs 28 additions, 0 deletionspolkadot/node/subsystem-bench/src/lib/usage.rs

- polkadot/node/subsystem-bench/src/lib/utils.rs 20 additions, 55 deletionspolkadot/node/subsystem-bench/src/lib/utils.rs

Please register or sign in to comment