- Mar 27, 2024

-

-

Alexander Samusev authored

PR adds CI jobs that collect subsystem-benchmarks results and publishes them to gh-pages. cc https://github.com/paritytech/ci_cd/issues/934 cc @AndreiEres

-

Alexandru Vasile authored

The authority-discovery mechanism has implemented a few exponential timers for: - publishing the authority records - goes from 2 seconds (when freshly booted) to 1 hour if the node is long-running - set to 1 hour after successfully publishing the authority record - discovering other authority records - goes from 2 seconds (when freshly booted) to 10 minutes if the node is long-running This PR resets the exponential publishing and discovery interval to defaults ensuring that long-running nodes: - will retry publishing the authority records as aggressively as freshly booted nodes - Currently, if a long-running node fails to publish the DHT record when the keys change (ie DhtEvent::ValuePutFailed), it will only retry after 1 hour - will rediscover other authorities faster (since there is a chance that other authority keys changed) The subp2p-explorer has difficulties discovering the authorities when the authority set changes in the first few hours. This might be entirely due to the recursive nature of the DHT and the needed time to propagate the records. However, there is a small chance that the authority publishing failed and is only retried in 1h. Let me know if this makes sense

Alexandru Vasile <alexandru.vasile@parity.io> Co-authored-by:

Dmitry Markin <dmitry@markin.tech>

-

Francisco Aguirre authored

`execute` and `send` try to decode the xcm in the parameters before reaching the filter line. The new extrinsics decode only after the filter line. These should be used instead of the old ones. ## TODO - [x] Tests - [x] Generate weights - [x] Deprecation issue -> https://github.com/paritytech/polkadot-sdk/issues/3771 - [x] PRDoc - [x] Handle error in pallet-contracts This would make writing XCMs in PJS Apps more difficult, but here's the fix for that: https://github.com/polkadot-js/apps/pull/10350. Already deployed! https://polkadot.js.org/apps/#/utilities/xcm Supersedes https://github.com/paritytech/polkadot-sdk/pull/1798/ --------- Co-authored-by:

PG Herveou <pgherveou@gmail.com> Co-authored-by: command-bot <> Co-authored-by:

Adrian Catangiu <adrian@parity.io>

-

Andrei Sandu authored

This is a long due chore ... --------- Signed-off-by:

Andrei Sandu <andrei-mihail@parity.io> Co-authored-by:

ordian <write@reusable.software>

-

Javier Viola authored

bump version to `1.3.97` (follow up from https://github.com/paritytech/polkadot-sdk/pull/3805) --------- Co-authored-by:Bastian Köcher <git@kchr.de>

-

- Mar 26, 2024

-

-

ordian authored

Fixes #3826. The docs on the `candidates` field of `BlockEntry` were incorrectly stating that they are sorted by core index. The (incorrect) optimization was introduced in #3747 based on this assumption. The actual ordering is based on `CandidateIncluded` events ordering in the runtime. We revert this optimization here. - [x] verify the underlying issue - [x] add a regression test --------- Co-authored-by:Bastian Köcher <git@kchr.de>

-

Derek Colley authored

-

Pavel Orlov authored

The PR provides API for obtaining: - the weight required to execute an XCM message, - a list of acceptable `AssetId`s for message execution payment, - the cost of the weight in the specified acceptable `AssetId`. It is meant to address an issue where one has to guess how much fee to pay for execution. Also, at the moment, a client has to guess which assets are acceptable for fee execution payment. See the related issue https://github.com/paritytech/polkadot-sdk/issues/690. With this API, a client is supposed to query the list of the supported asset IDs (in the XCM version format the client understands), weigh the XCM program the client wants to execute and convert the weight into one of the acceptable assets. Note that the client is supposed to know what program will be executed on what chains. However, having a small companion JS library for the pallet-xcm and xtokens should be enough to determine what XCM programs will be executed and where (since these pallets compo...

-

Bastian Köcher authored

In preparation for the merkleized metadata, we need to ensure that constants are actually constant. If we want to test the unsigned phase we could for example just disable signed voter. Or we add some extra mechanism to the pallet to disable the signed phase from time to time. --------- Co-authored-by:

Ankan <10196091+Ank4n@users.noreply.github.com> Co-authored-by:

Kian Paimani <5588131+kianenigma@users.noreply.github.com>

-

Tsvetomir Dimitrov authored

This PR notifies broker pallet for any parachain slot swaps performed on the relay chain. This is achieved by registering an `OnSwap` for the the `coretime` pallet. The hook sends XCM message to the broker chain and invokes a new extrinsic `swap_leases` which updates `Leases` storage item (which keeps the legacy parachain leases). I made two assumptions in this PR: 1. [`Leases`](https://github.com/paritytech/polkadot-sdk/blob/4987d798/substrate/frame/broker/src/lib.rs#L120) in `broker` pallet and [`Leases`](https://github.com/paritytech/polkadot-sdk/blob/4987d798 /polkadot/runtime/common/src/slots/mod.rs#L118) in `slots` pallet are in sync. 2. `swap_leases` extrinsic from `broker` pallet can be triggered only by root or by the XCM message from the relay chain. If not - the extrinsic will generate an error and do nothing. As a side effect from the changes `OnSwap` trait is moved from runtime/common/traits.rs to runtime/parachains. Otherwise it is not accessible from `broker` pallet. Closes https://github.com/paritytech/polkadot-sdk/issues/3552 TODOs: - [x] Weights - [x] Tests --------- Co-authored-by: command-bot <> Co-authored-by:

eskimor <eskimor@users.noreply.github.com> Co-authored-by:

Bastian Köcher <git@kchr.de>

-

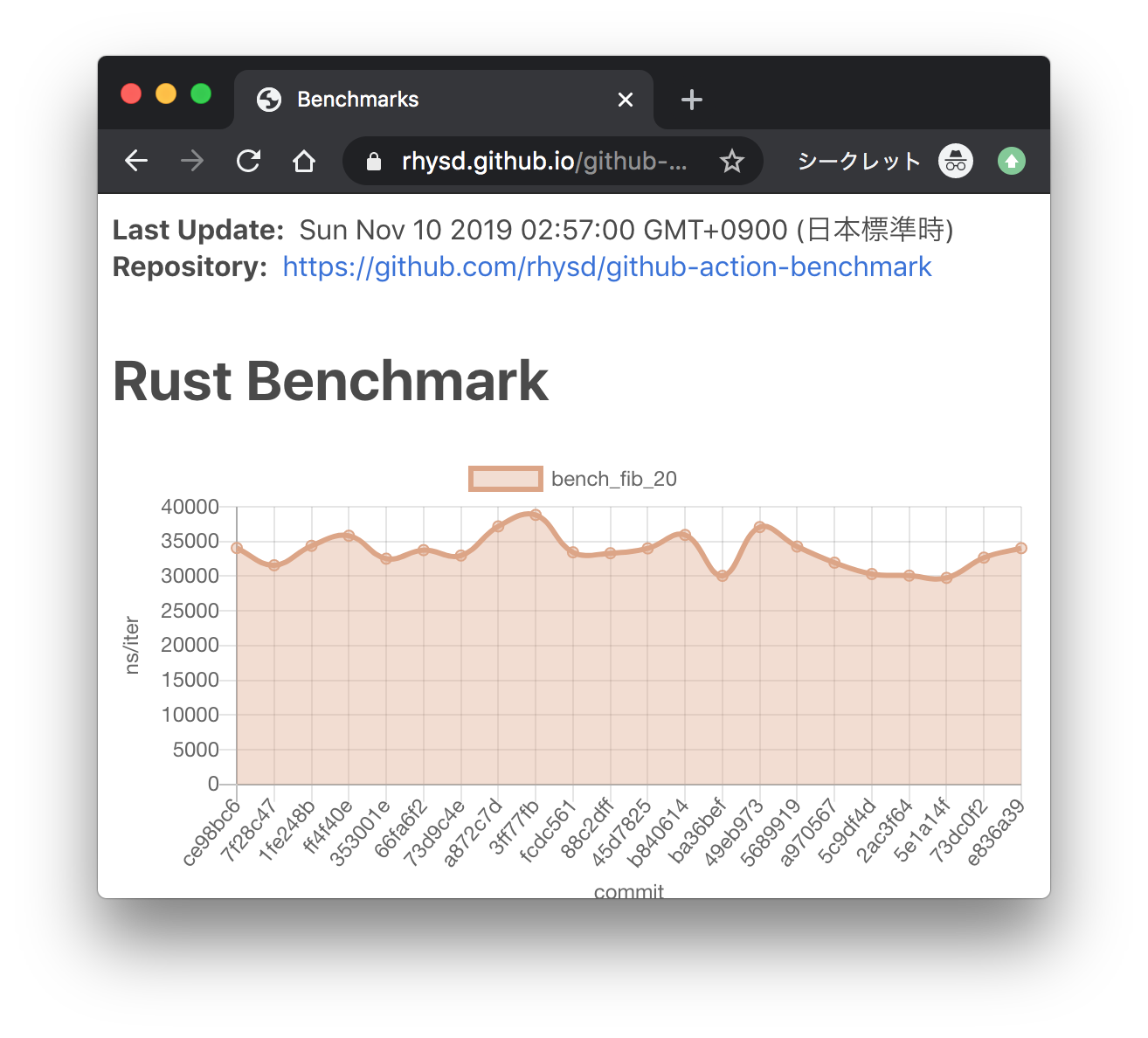

Andrei Eres authored

Here we add the ability to save subsystem benchmark results in JSON format to display them as graphs To draw graphs, CI team will use [github-action-benchmark](https://github.com/benchmark-action/github-action-benchmark). Since we are using custom benchmarks, we need to prepare [a specific data type](https://github.com/benchmark-action/github-action-benchmark?tab=readme-ov-file#examples): ``` [ { "name": "CPU Load", "unit": "Percent", "value": 50 } ] ``` Then we'll get graphs like this:  [A live page with graphs](https://benchmark-action.github.io/github-action-benchmark/dev/bench/) --------- Co-authored-by:ordian <write@reusable.software>

-

Dcompoze authored

**Update:** Pushed additional changes based on the review comments. **This pull request fixes various spelling mistakes in this repository.** Most of the changes are contained in the first **3** commits: - `Fix spelling mistakes in comments and docs` - `Fix spelling mistakes in test names` - `Fix spelling mistakes in error messages, panic messages, logs and tracing` Other source code spelling mistakes are separated into individual commits for easier reviewing: - `Fix the spelling of 'authority'` - `Fix the spelling of 'REASONABLE_HEADERS_IN_JUSTIFICATION_ANCESTRY'` - `Fix the spelling of 'prev_enqueud_messages'` - `Fix the spelling of 'endpoint'` - `Fix the spelling of 'children'` - `Fix the spelling of 'PenpalSiblingSovereignAccount'` - `Fix the spelling of 'PenpalSudoAccount'` - `Fix the spelling of 'insufficient'` - `Fix the spelling of 'PalletXcmExtrinsicsBenchmark'` - `Fix the spelling of 'subtracted'` - `Fix the spelling of 'CandidatePendingAvailability'` - `Fix the spelling of 'exclusive'` - `Fix the spelling of 'until'` - `Fix the spelling of 'discriminator'` - `Fix the spelling of 'nonexistent'` - `Fix the spelling of 'subsystem'` - `Fix the spelling of 'indices'` - `Fix the spelling of 'committed'` - `Fix the spelling of 'topology'` - `Fix the spelling of 'response'` - `Fix the spelling of 'beneficiary'` - `Fix the spelling of 'formatted'` - `Fix the spelling of 'UNKNOWN_PROOF_REQUEST'` - `Fix the spelling of 'succeeded'` - `Fix the spelling of 'reopened'` - `Fix the spelling of 'proposer'` - `Fix the spelling of 'InstantiationNonce'` - `Fix the spelling of 'depositor'` - `Fix the spelling of 'expiration'` - `Fix the spelling of 'phantom'` - `Fix the spelling of 'AggregatedKeyValue'` - `Fix the spelling of 'randomness'` - `Fix the spelling of 'defendant'` - `Fix the spelling of 'AquaticMammal'` - `Fix the spelling of 'transactions'` - `Fix the spelling of 'PassingTracingSubscriber'` - `Fix the spelling of 'TxSignaturePayload'` - `Fix the spelling of 'versioning'` - `Fix the spelling of 'descendant'` - `Fix the spelling of 'overridden'` - `Fix the spelling of 'network'` Let me know if this structure is adequate. **Note:** The usage of the words `Merkle`, `Merkelize`, `Merklization`, `Merkelization`, `Merkleization`, is somewhat inconsistent but I left it as it is. ~~**Note:** In some places the term `Receival` is used to refer to message reception, IMO `Reception` is the correct word here, but I left it as it is.~~ ~~**Note:** In some places the term `Overlayed` is used instead of the more acceptable version `Overlaid` but I also left it as it is.~~ ~~**Note:** In some places the term `Applyable` is used instead of the correct version `Applicable` but I also left it as it is.~~ **Note:** Some usage of British vs American english e.g. `judgement` vs `judgment`, `initialise` vs `initialize`, `optimise` vs `optimize` etc. are both present in different places, but I suppose that's understandable given the number of contributors. ~~**Note:** There is a spelling mistake in `.github/CODEOWNERS` but it triggers errors in CI when I make changes to it, so I left it as it is.~~

-

Serban Iorga authored

Updating the bridges subtree hopefully just one last time in this formula in order to make the final migration less verbose.

-

Dcompoze authored

Fixes formatting for https://github.com/paritytech/polkadot-sdk/pull/3698

-

- Mar 25, 2024

-

-

Andrei Eres authored

Adds availability-write regression tests. The results for the `availability-distribution` subsystem are volatile, so I had to reduce the precision of the test.

-

Davide Galassi authored

Another simple refactory to prune some duplicate code Follow up of: https://github.com/paritytech/polkadot-sdk/pull/3684

-

Oliver Tale-Yazdi authored

Devs seem to not realize that this should be filled out manually. The default is also often wrong.

-

Alin Dima authored

https://github.com/paritytech/polkadot-sdk/issues/3742

-

Serban Iorga authored

Related to https://github.com/paritytech/parity-bridges-common/issues/2538 This PR doesn't contain any functional changes. The PR moves specific bridged chain definitions from `bridges/primitives` to `bridges/chains` folder in order to facilitate the migration of the `parity-bridges-repo` into `polkadot-sdk` as discussed in https://hackmd.io/LprWjZ0bQXKpFeveYHIRXw?view Apart from this it also includes some cosmetic changes to some `Cargo.toml` files as a result of running `diener workspacify`.

-

Alexander Samusev authored

cc https://github.com/paritytech/ci_cd/issues/943

-

- Mar 24, 2024

-

-

Bastian Köcher authored

-

- Mar 23, 2024

-

-

eskimor authored

Fixes https://github.com/paritytech/polkadot-sdk/issues/3762 . --------- Co-authored-by:

eskimor <eskimor@no-such-url.com> Co-authored-by:

Adrian Catangiu <adrian@parity.io>

-

- Mar 22, 2024

-

-

girazoki authored

Currently `transfer_assets` from pallet-xcm covers 4 main different transfer types: - `localReserve` - `DestinationReserve` - `Teleport` - `RemoteReserve` For the first three, the local execution and the remote message sending are separated, and fees are deducted in pallet-xcm itself: https://github.com/paritytech/polkadot-sdk/blob/3410dfb3 /polkadot/xcm/pallet-xcm/src/lib.rs#L1758. For the 4th case `RemoteReserve`, pallet-xcm is still relying on the xcm-executor itself to send the message (through the `initiateReserveWithdraw` instruction). In this case, if delivery fees need to be charged, it is not possible to do so because the `jit_withdraw` mode has not being set. This PR proposes to still use the `initiateReserveWithdraw` but prepending a `setFeesMode { jit_withdraw: true }` to make sure delivery fees can be paid. A test-case is also added to present the aforementioned case --------- Co-authored-by:

Adrian Catangiu <adrian@parity.io>

-

PG Herveou authored

We do not need to make these traits generic over QueryId type, we can just use the QueryId alias everywhere

-

Dmitry Markin authored

Make sure explicitly set by the operator public addresses go first in the authority discovery DHT records. Also update `Discovery` behavior to eliminate duplicates in the returned addresses. This PR should improve situation with https://github.com/paritytech/polkadot-sdk/issues/3519. Obsoletes https://github.com/paritytech/polkadot-sdk/pull/3657.

-

Vincent Geddes authored

Bridging fees are calculated using a static ETH/DOT exchange rate that can deviate significantly from the real-world exchange rate. We therefore need to add a safety margin to the fee so that users almost aways cover the cost of relaying. # FAQ > Why introduce a `multiplier` parameter instead of configuring an exchange rate which already has a safety factor applied? When converting from ETH to DOT, we need to _divide_ the multiplier by the exchange rate, and to convert from DOT to ETH we need to _multiply_ the multiplier by the exchange rate. > Other input parameters to the fee calculation can also deviate from real-world values. These include substrate weights, gas prices, and so on. Why does the multiplier introduced here not adjust those? A single scalar multiplier won't be able to accommodate the different volatilities efficiently. For example, gas prices are much more volatile than exchange rates, and substrate weights hardly ever change. So the pricing config relating to weights and gas prices should already have some appropriate safety margin pre-applied. # Detailed Changes: * Added `multiplier` field to `PricingParameters` * Outbound-queue fee is multiplied by `multiplier` * This `multiplier` is synced to the Ethereum side * Improved Runtime API for calculating outbound-queue fees. This API makes it much easier to for configure parts of the system in preparation for launch. * Improve and clarify code documentation Upstreamed from https://github.com/Snowfork/polkadot-sdk/pull/127 --------- Co-authored-by:

Clara van Staden <claravanstaden64@gmail.com> Co-authored-by:

Adrian Catangiu <adrian@parity.io>

-

Clara van Staden authored

## Bug Explanation Adds a check that prevents finalized headers with a gap larger than the sync committee period being imported, which could cause execution headers in the gap being unprovable. The current version of the Ethereum client checks that there is a header at least every sync committee, but it doesn't check that the headers are within a sync period of each other. For example: Header 100 (sync committee period 1) Header 9000 (sync committee period 2) (8900 blocks apart) These headers are in adjacent sync committees, but more than the sync committee period (8192 blocks) apart. The reason we need a header every 8192 slots at least, is the header is used to prove messages within the last 8192 blocks. If we import header 9000, and we receive a message to be verified at header 200, the `block_roots` field of header 9000 won't contain the header in order to do the ancestry check. ## Environment While running in Rococo, this edge case was discovered after the relayer was offline for a few days. It is unlikely, but not impossible, to happen again and so it should be backported to polkadot-sdk 1.7.0 (so that [polkadot-fellows/runtimes](https://github.com/polkadot-fellows/runtimes) can be updated with the fix). Our Ethereum client has been operational on Rococo for the past few months, and this been the only major issue discovered so far. ### Unrelated Change An unrelated nit: Removes a left over file that should have been deleted when the `parachain` directory was removed. --------- Co-authored-by: claravanstaden <Cats 4 life!>

-

Will | Paradox | ParaNodes.io authored

Good day, I'm seeking to add the following bootnodes for Kusama and Polkadot's relay and system chains. The following commands can be used to test connectivity. All node keys are backed up. Polkadot: ``` polkadot --chain polkadot --base-path /tmp/node --name "Boot" --reserved-only --reserved-nodes "/dns/boot-polkadot.luckyfriday.io/tcp/443/wss/p2p/12D3KooWAdyiVAaeGdtBt6vn5zVetwA4z4qfm9Fi2QCSykN1wTBJ" --no-hardware-benchmarks ``` Assethub-Polkadot: ``` polkadot-parachain --chain asset-hub-polkadot --base-path /tmp/node --name "Boot" --reserved-only --reserved-nodes "/dns/boot-polkadot-assethub.luckyfriday.io/tcp/443/wss/p2p/12D3KooWDR9M7CjV1xdjCRbRwkFn1E7sjMaL4oYxGyDWxuLrFc2J" --no-hardware-benchmarks ``` Bridgehub-Polkadot: ``` polkadot-parachain --chain bridge-hub-polkadot --base-path /tmp/node --name "Boot" --reserved-only --reserved-nodes "/dns/boot-polkadot-bridgehub.luckyfriday.io/tcp/443/wss/p2p/12D3KooWKf3mBXHjLbwtPqv1BdbQuwbFNcQQYxASS7iQ25264AXH" --no-hardware-benchmarks ``` Collectives-Polkadot ``` polkadot-parachain --chain collectives-polkadot --base-path /tmp/node --name "Boot" --reserved-only --reserved-nodes "/dns/boot-polkadot-collectives.luckyfriday.io/tcp/443/wss/p2p/12D3KooWCzifnPooTt4kvTnXT7FTKTymVL7xn7DURQLsS2AKpf6w" --no-hardware-benchmarks ``` Kusama: ``` polkadot --chain kusama --base-path /tmp/node --name "Boot" --reserved-only --reserved-nodes "/dns/boot-kusama.luckyfriday.io/tcp/443/wss/p2p/12D3KooWS1Lu6DmK8YHSvkErpxpcXmk14vG6y4KVEFEkd9g62PP8" --no-hardware-benchmarks ``` Assethub-Kusama: ``` polkadot-parachain --chain asset-hub-kusama --base-path /tmp/node --name "Boot" --reserved-only --reserved-nodes "/dns/boot-kusama-assethub.luckyfriday.io/tcp/443/wss/p2p/12D3KooWSwaeFs6FNgpgh54fdoxSDAA4nJNaPE3PAcse2GRrG7b3" --no-hardware-benchmarks ``` Bridgehub-Kusama: ``` polkadot-parachain --chain bridge-hub-kusama --base-path /tmp/node --name "Boot" --reserved-only --reserved-nodes "/dns/boot-kusama-bridgehub.luckyfriday.io/tcp/443/wss/p2p/12D3KooWQybw6AFmAvrFfwUQnNxUpS12RovapD6oorh2mAJr4xyd" --no-hardware-benchmarks ``` Co-authored-by:Bastian Köcher <git@kchr.de>

-

- Mar 21, 2024

-

-

Alejandro Martinez Andres authored

Based on issue [#2512](https://github.com/paritytech/polkadot-sdk/issues/2512), it seems that some ecosystem teams are using these networks to set up their staging environments and test certain use cases, some of them involving sending XCMs from the relay with origins not allowed in the current configuration. This change reverts the configuration of `SendXcmOrigin`. --------- Co-authored-by:Adrian Catangiu <adrian@parity.io>

-

Tsvetomir Dimitrov authored

Make taplo happy

-

kvalerio authored

There was a typo, so now, there's no more typo. Co-authored-by:Liam Aharon <liam.aharon@hotmail.com>

-

ordian authored

Small refactoring to reduce the algorithmic complexity of the initial message distribution in approval voting after a sync from O(n_candidates ^ 2) to O(n_candidates).

-

Alin Dima authored

Changes needed to implement the runtime part of elastic scaling: https://github.com/paritytech/polkadot-sdk/issues/3131, https://github.com/paritytech/polkadot-sdk/issues/3132, https://github.com/paritytech/polkadot-sdk/issues/3202 Also fixes https://github.com/paritytech/polkadot-sdk/issues/3675 TODOs: - [x] storage migration - [x] optimise process_candidates from O(N^2) - [x] drop backable candidates which form cycles - [x] fix unit tests - [x] add more unit tests - [x] check the runtime APIs which use the pending availability storage. We need to expose all of them, see https://github.com/paritytech/polkadot-sdk/issues/3576 - [x] optimise the candidate selection. we're currently picking randomly until we satisfy the weight limit. we need to be smart about not breaking candidate chains while being fair to all paras - https://github.com/paritytech/polkadot-sdk/pull/3573 Relies on the changes made in https://github.com/paritytech/polkadot-sdk/pull/3233 in terms of the inclusion policy and the candidate ordering --------- Signed-off-by:

alindima <alin@parity.io> Co-authored-by: command-bot <> Co-authored-by:

eskimor <eskimor@users.noreply.github.com>

-

Egor_P authored

This PR backports small reformatting of the release notes templates.

-

Egor_P authored

This PR backports: - node version bump - `spec_vesion` bump - reordering of the `prdocs` to the appropriate folder from the `1.9.0` release branch

-

gupnik authored

Step in https://github.com/paritytech/polkadot-sdk/issues/3688

-

- Mar 20, 2024

-

-

s0me0ne-unkn0wn authored

This PR proposes enabling PoV reclaim on the `rococo-parachain` testchain to streamline testing and development of high-TPS stuff.

-

eskimor authored

We witnessed really poor performance on Rococo, where we ended up with 50 on-demand cores. This was due to the fact that for each core the full queue was processed. With this change full queue processing will happen way less often (most of the time complexity is O(1) or O(log(n))) and if it happens then only for one core (in expectation). Also spot price is now updated before each order to ensure economic back pressure. TODO: - [x] Implement - [x] Basic tests - [x] Add more tests (see todos) - [x] Run benchmark to confirm better performance, first results suggest > 100x faster. - [x] Write migrations - [x] Bump scale-info version and remove patch in Cargo.toml - [x] Write PR docs: on-demand performance improved, more on-demand cores are now non problematic anymore. If need by also the max queue size can be increased again. (Maybe not to 10k) Optional: Performance can be improved even more, if we called `pop_assignment_for_core()`, before calling `report_processed` (Avoid needless affinity drops). The effect gets smaller the larger the claim queue and I would only go for it, if it does not add complexity to the scheduler. --------- Co-authored-by:

eskimor <eskimor@no-such-url.com> Co-authored-by:

antonva <anton.asgeirsson@parity.io> Co-authored-by: command-bot <> Co-authored-by:

Anton Vilhelm Ásgeirsson <antonva@users.noreply.github.com> Co-authored-by:

ordian <write@reusable.software>

-

bader y authored

_This PR is being continued from https://github.com/paritytech/polkadot-sdk/pull/2206, which was closed when the developer_hub was merged._ closes https://github.com/paritytech/polkadot-sdk-docs/issues/44 --- # Description This PR adds a reference document to the `developer-hub` crate (see https://github.com/paritytech/polkadot-sdk/pull/2102). This specific reference document covers defensive programming practices common within the context of developing a runtime with Substrate. In particular, this covers the following areas: - Default behavior of how Rust deals with numbers in general - How to deal with floating point numbers in runtime / fixed point arithmetic - How to deal with Integer overflows - General "safe math" / defensive programming practices for common pallet development scenarios - Defensive traits that exist within Substrate, i.e., `defensive_saturating_add `, `defensive_unwrap_or` - More general defensive programming examples (keep it concise) - Link to relevant examples where these practices are actually in production / being used - Unwrapping (or rather lack thereof) 101 todo -- - [x] Apply feedback from previous PR - [x] This may warrant a PR to append some of these docs to `sp_arithmetic` --------- Co-authored-by:

Oliver Tale-Yazdi <oliver.tale-yazdi@parity.io> Co-authored-by:

Gonçalo Pestana <g6pestana@gmail.com> Co-authored-by:

Kian Paimani <5588131+kianenigma@users.noreply.github.com> Co-authored-by:

Francisco Aguirre <franciscoaguirreperez@gmail.com> Co-authored-by:

Radha <86818441+DrW3RK@users.noreply.github.com>

-

slicejoke authored

-