- Apr 12, 2024

-

-

Branislav Kontur authored

This PR mainly removes `xcm::v3` stuff from `assets-common` to make it more generic and facilitate the transition to newer XCM versions. Some of the implementations here used hard-coded `xcm::v3::Location`, but now it's up to the runtime to configure according to its needs. Additional/consequent changes: - `penpal` runtime uses now `xcm::latest::Location` for `pallet_assets` as `AssetId`, because we don't care about migrations here - it pretty much simplify xcm-emulator integration tests, where we don't need now a lots of boilerplate conversions: ``` v3::Location::try_from(...).expect("conversion works")` ``` - xcm-emulator tests - split macro `impl_assets_helpers_for_parachain` to the `impl_assets_helpers_for_parachain` and `impl_foreign_assets_helpers_for_parachain` (avoids using hard-coded `xcm::v3::Location`)

-

- Apr 11, 2024

-

-

Liam Aharon authored

Completes the removal of `try-runtime-cli` logic from `polkadot-sdk`.

-

- Apr 10, 2024

-

-

- Apr 09, 2024

-

-

Sebastian Kunert authored

Cumulus test-parachain node and test runtime were still using relay chain consensus and 12s blocktimes. With async backing around the corner on the major chains we should switch our tests too. Also needed to nicely test the changes coming to collators in #3168. ### Changes Overview - Followed the [migration guide](https://wiki.polkadot.network/docs/maintain-guides-async-backing) for async backing for the cumulus-test-runtime - Adjusted the cumulus-test-service to use the correct import-queue, lookahead collator etc. - The block validation function now uses the Aura Ext Executor so that the seal of the block is validated - Previous point requires that we seal block before calling into `validate_block`, I introduced a helper function for that - Test client adjusted to provide a slot to the relay chain proof and the aura pre-digest

-

Facundo Farall authored

# Description - What does this PR do? 1. Upgrades `trie-db`'s version to the latest release. This release includes, among others, an implementation of `DoubleEndedIterator` for the `TrieDB` struct, allowing to iterate both backwards and forwards within the leaves of a trie. 2. Upgrades `trie-bench` to `0.39.0` for compatibility. 3. Upgrades `criterion` to `0.5.1` for compatibility. - Why are these changes needed? Besides keeping up with the upgrade of `trie-db`, this specifically adds the functionality of iterating back on the leafs of a trie, with `sp-trie`. In a project we're currently working on, this comes very handy to verify a Merkle proof that is the response to a challenge. The challenge is a random hash that (most likely) will not be an existing leaf in the trie. So the challenged user, has to provide a Merkle proof of the previous and next existing leafs in the trie, that surround the random challenged hash. Without having DoubleEnded iterators, we're forced to iterate until we find the first existing leaf, like so: ```rust // ************* VERIFIER (RUNTIME) ************* // Verify proof. This generates a partial trie based on the proof and // checks that the root hash matches the `expected_root`. let (memdb, root) = proof.to_memory_db(Some(&root)).unwrap(); let trie = TrieDBBuilder::<LayoutV1<RefHasher>>::new(&memdb, &root).build(); // Print all leaf node keys and values. println!("\nPrinting leaf nodes of partial tree..."); for key in trie.key_iter().unwrap() { if key.is_ok() { println!("Leaf node key: {:?}", key.clone().unwrap()); let val = trie.get(&key.unwrap()); if val.is_ok() { println!("Leaf node value: {:?}", val.unwrap()); } else { println!("Leaf node value: None"); } } } println!("RECONSTRUCTED TRIE {:#?}", trie); // Create an iterator over the leaf nodes. let mut iter = trie.iter().unwrap(); // First element with a value should be the previous existing leaf to the challenged hash. let mut prev_key = None; for element in &mut iter { if element.is_ok() { let (key, _) = element.unwrap(); prev_key = Some(key); break; } } assert!(prev_key.is_some()); // Since hashes are `Vec<u8>` ordered in big-endian, we can compare them directly. assert!(prev_key.unwrap() <= challenge_hash.to_vec()); // The next element should exist (meaning there is no other existing leaf between the // previous and next leaf) and it should be greater than the challenged hash. let next_key = iter.next().unwrap().unwrap().0; assert!(next_key >= challenge_hash.to_vec()); ``` With DoubleEnded iterators, we can avoid that, like this: ```rust // ************* VERIFIER (RUNTIME) ************* // Verify proof. This generates a partial trie based on the proof and // checks that the root hash matches the `expected_root`. let (memdb, root) = proof.to_memory_db(Some(&root)).unwrap(); let trie = TrieDBBuilder::<LayoutV1<RefHasher>>::new(&memdb, &root).build(); // Print all leaf node keys and values. println!("\nPrinting leaf nodes of partial tree..."); for key in trie.key_iter().unwrap() { if key.is_ok() { println!("Leaf node key: {:?}", key.clone().unwrap()); let val = trie.get(&key.unwrap()); if val.is_ok() { println!("Leaf node value: {:?}", val.unwrap()); } else { println!("Leaf node value: None"); } } } // println!("RECONSTRUCTED TRIE {:#?}", trie); println!("\nChallenged key: {:?}", challenge_hash); // Create an iterator over the leaf nodes. let mut double_ended_iter = trie.into_double_ended_iter().unwrap(); // First element with a value should be the previous existing leaf to the challenged hash. double_ended_iter.seek(&challenge_hash.to_vec()).unwrap(); let next_key = double_ended_iter.next_back().unwrap().unwrap().0; let prev_key = double_ended_iter.next_back().unwrap().unwrap().0; // Since hashes are `Vec<u8>` ordered in big-endian, we can compare them directly. println!("Prev key: {:?}", prev_key); assert!(prev_key <= challenge_hash.to_vec()); println!("Next key: {:?}", next_key); assert!(next_key >= challenge_hash.to_vec()); ``` - How were these changes implemented and what do they affect? All that is needed for this functionality to be exposed is changing the version number of `trie-db` in all the `Cargo.toml`s applicable, and re-exporting some additional structs from `trie-db` in `sp-trie`. --------- Co-authored-by:Bastian Köcher <[email protected]>

-

Branislav Kontur authored

Co-authored-by:Bastian Köcher <[email protected]>

-

- Apr 08, 2024

-

-

Aaro Altonen authored

[litep2p](https://github.com/altonen/litep2p) is a libp2p-compatible P2P networking library. It supports all of the features of `rust-libp2p` that are currently being utilized by Polkadot SDK. Compared to `rust-libp2p`, `litep2p` has a quite different architecture which is why the new `litep2p` network backend is only able to use a little of the existing code in `sc-network`. The design has been mainly influenced by how we'd wish to structure our networking-related code in Polkadot SDK: independent higher-levels protocols directly communicating with the network over links that support bidirectional backpressure. A good example would be `NotificationHandle`/`RequestResponseHandle` abstractions which allow, e.g., `SyncingEngine` to directly communicate with peers to announce/request blocks. I've tried running `polkadot --network-backend litep2p` with a few different peer configurations and there is a noticeable reduction in networking CPU usage. For high load (`--out-peers 200`), networking CPU usage goes down from ~110% to ~30% (80 pp) and for normal load (`--out-peers 40`), the usage goes down from ~55% to ~18% (37 pp). These should not be taken as final numbers because: a) there are still some low-hanging optimization fruits, such as enabling [receive window auto-tuning](https://github.com/libp2p/rust-yamux/pull/176), integrating `Peerset` more closely with `litep2p` or improving memory usage of the WebSocket transport b) fixing bugs/instabilities that incorrectly cause `litep2p` to do less work will increase the networking CPU usage c) verification in a more diverse set of tests/conditions is needed Nevertheless, these numbers should give an early estimate for CPU usage of the new networking backend. This PR consists of three separate changes: * introduce a generic `PeerId` (wrapper around `Multihash`) so that we don't have use `NetworkService::PeerId` in every part of the code that uses a `PeerId` * introduce `NetworkBackend` trait, implement it for the libp2p network stack and make Polkadot SDK generic over `NetworkBackend` * implement `NetworkBackend` for litep2p The new library should be considered experimental which is why `rust-libp2p` will remain as the default option for the time being. This PR currently depends on the master branch of `litep2p` but I'll cut a new release for the library once all review comments have been addresses. --------- Signed-off-by:

Alexandru Vasile <[email protected]> Co-authored-by:

Dmitry Markin <[email protected]> Co-authored-by:

Alexandru Vasile <[email protected]> Co-authored-by:

Alexandru Vasile <[email protected]>

-

Oliver Tale-Yazdi authored

This MR contains two major changes and some maintenance cleanup. ## 1. Free Standing Pallet Benchmark Runner Closes https://github.com/paritytech/polkadot-sdk/issues/3045, depends on your runtime exposing the `GenesisBuilderApi` (like https://github.com/paritytech/polkadot-sdk/pull/1492). Introduces a new binary crate: `frame-omni-bencher`. It allows to directly benchmark a WASM blob - without needing a node or chain spec. This makes it much easier to generate pallet weights and should allow us to remove bloaty code from the node. It should work for all FRAME runtimes that dont use 3rd party host calls or non `BlakeTwo256` block hashing (basically all polkadot parachains should work). It is 100% backwards compatible with the old CLI args, when the `v1` compatibility command is used. This is done to allow for forwards compatible addition of new commands. ### Example (full example in the Rust docs) Installing the CLI: ```sh cargo install --locked --path substrate/utils/frame/omni-bencher frame-omni-bencher --help ``` Building the Westend runtime: ```sh cargo build -p westend-runtime --release --features runtime-benchmarks ``` Benchmarking the runtime: ```sh frame-omni-bencher v1 benchmark pallet --runtime target/release/wbuild/westend-runtime/westend_runtime.compact.compressed.wasm --all ``` ## 2. Building the Benchmark Genesis State in the Runtime Closes https://github.com/paritytech/polkadot-sdk/issues/2664 This adds `--runtime` and `--genesis-builder=none|runtime|spec` arguments to the `benchmark pallet` command to make it possible to generate the genesis storage by the runtime. This can be used with both the node and the freestanding benchmark runners. It utilizes the new `GenesisBuilder` RA and depends on having https://github.com/paritytech/polkadot-sdk/pull/3412 deployed. ## 3. Simpler args for `PalletCmd::run` You can do three things here to integrate the changes into your node: - nothing: old code keeps working as before but emits a deprecated warning - delete: remove the pallet benchmarking code from your node and use the omni-bencher instead - patch: apply the patch below and keep using as currently. This emits a deprecated warning at runtime, since it uses the old way to generate a genesis state, but is the smallest change. ```patch runner.sync_run(|config| cmd - .run::<HashingFor<Block>, ReclaimHostFunctions>(config) + .run_with_spec::<HashingFor<Block>, ReclaimHostFunctions>(Some(config.chain_spec)) ) ``` ## 4. Maintenance Change - `pallet-nis` get a `BenchmarkSetup` config item to prepare its counterparty asset. - Add percent progress print when running benchmarks. - Dont immediately exit on benchmark error but try to run as many as possible and print errors last. --------- Signed-off-by:

Oliver Tale-Yazdi <[email protected]> Co-authored-by:

Liam Aharon <[email protected]>

-

- Apr 06, 2024

-

-

Squirrel authored

I don't think there are any more releases to the 0.2.x versions, so best we're on the 0.3.x release. No change on the benchmarks, fast local time is still just as fast as before: new version bench: ``` fast_local_time time: [30.551 ns 30.595 ns 30.668 ns] ``` old version bench: ``` fast_local_time time: [30.598 ns 30.646 ns 30.723 ns] ``` --------- Co-authored-by:Bastian Köcher <[email protected]>

-

- Apr 05, 2024

-

-

Sergej Sakac authored

Defines a runtime api for `pallet-broker` for getting the current price of a core if there is an ongoing sale. Closes: #3413 --------- Co-authored-by:Bastian Köcher <[email protected]>

-

dependabot[bot] authored

Bumps [h2](https://github.com/hyperium/h2) from 0.3.24 to 0.3.26. <details> <summary>Release notes</summary> <p><em>Sourced from <a href="https://github.com/hyperium/h2/releases">h2's releases</a>.</em></p> <blockquote> <h2>v0.3.26</h2> <h2>What's Changed</h2> <ul> <li>Limit number of CONTINUATION frames for misbehaving connections.</li> </ul> <p>See <a href="https://seanmonstar.com/blog/hyper-http2-continuation-flood/">https://seanmonstar.com/blog/hyper-http2-continuation-flood/</a> for more info.</p> <h2>v0.3.25</h2> <h2>What's Changed</h2> <ul> <li>perf: optimize header list size calculations by <a href="https://github.com/Noah-Kennedy"><code>@Noah-Kennedy</code></a> in <a href="https://redirect.github.com/hyperium/h2/pull/750">hyperium/h2#750</a></li> </ul> <p><strong>Full Changelog</strong>: <a href="https://github.com/hyperium/h2/compare/v0.3.24...v0.3.25">https://github.com/hyperium/h2/compare/v0.3.24...v0.3.25</a></p> </blockquote> </details> <details> <summary>Changelog</summary> <p><em>Sourced from <a href="https://github.com/hyperium/h2/blob/v0.3.26/CHANGELOG.md">h2's changelog</a>.</em></p> <blockquote> <h1>0.3.26 (April 3, 2024)</h1> <ul> <li>Limit number of CONTINUATION frames for misbehaving connections.</li> </ul> <h1>0.3.25 (March 15, 2024)</h1> <ul> <li>Improve performance decoding many headers.</li> </ul> </blockquote> </details> <details> <summary>Commits</summary> <ul> <li><a href="https://github.com/hyperium/h2/commit/357127e279c06935830fe2140378312eac801494"><code>357127e</code></a> v0.3.26</li> <li><a href="https://github.com/hyperium/h2/commit/1a357aaefc7243fdfa9442f45d90be17794a4004"><code>1a357aa</code></a> fix: limit number of CONTINUATION frames allowed</li> <li><a href="https://github.com/hyperium/h2/commit/5b6c9e0da092728d702dff3607626aafb7809d77"><code>5b6c9e0</code></a> refactor: cleanup new unused warnings (<a href="https://redirect.github.com/hyperium/h2/issues/757">#757</a>)</li> <li><a href="https://github.com/hyperium/h2/commit/3a798327211345b9b2bf797e2e4f3aca4e0ddfee"><code>3a79832</code></a> v0.3.25</li> <li><a href="https://github.com/hyperium/h2/commit/94e80b1c72bec282bb5d13596803e6fb341fec4c"><code>94e80b1</code></a> perf: optimize header list size calculations (<a href="https://redirect.github.com/hyperium/h2/issues/750">#750</a>)</li> <li>See full diff in <a href="https://github.com/hyperium/h2/compare/v0.3.24...v0.3.26">compare view</a></li> </ul> </details> <br /> [](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores) Dependabot will resolve any conflicts with this PR as long as you don't alter it yourself. You can also trigger a rebase manually by commenting `@dependabot rebase`. [//]: # (dependabot-automerge-start) [//]: # (dependabot-automerge-end) --- <details> <summary>Dependabot commands and options</summary> <br /> You can trigger Dependabot actions by commenting on this PR: - `@dependabot rebase` will rebase this PR - `@dependabot recreate` will recreate this PR, overwriting any edits that have been made to it - `@dependabot merge` will merge this PR after your CI passes on it - `@dependabot squash and merge` will squash and merge this PR after your CI passes on it - `@dependabot cancel merge` will cancel a previously requested merge and block automerging - `@dependabot reopen` will reopen this PR if it is closed - `@dependabot close` will close this PR and stop Dependabot recreating it. You can achieve the same result by closing it manually - `@dependabot show <dependency name> ignore conditions` will show all of the ignore conditions of the specified dependency - `@dependabot ignore this major version` will close this PR and stop Dependabot creating any more for this major version (unless you reopen the PR or upgrade to it yourself) - `@dependabot ignore this minor version` will close this PR and stop Dependabot creating any more for this minor version (unless you reopen the PR or upgrade to it yourself) - `@dependabot ignore this dependency` will close this PR and stop Dependabot creating any more for this dependency (unless you reopen the PR or upgrade to it yourself) You can disable automated security fix PRs for this repo from the [Security Alerts page](https://github.com/paritytech/polkadot-sdk/network/alerts). </details> Signed-off-by:

dependabot[bot] <[email protected]> Co-authored-by:

dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

-

- Apr 04, 2024

-

-

Michal Kucharczyk authored

The runtime now can provide a number of predefined presets of `RuntimeGenesisConfig` struct. This presets are intended to be used in different deployments, e.g.: `local`, `staging`, etc, and should be included into the corresponding chain-specs. Having `GenesisConfig` presets in runtime allows to fully decouple node from runtime types (the problem is described in #1984). **Summary of changes:** - The `GenesisBuilder` API was adjusted to enable this functionality (and provide better naming - #150): ```rust fn preset_names() -> Vec<PresetId>; fn get_preset(id: Option<PresetId>) -> Option<serde_json::Value>; //`None` means default fn build_state(value: serde_json::Value); pub struct PresetId(Vec<u8>); ``` - **Breaking change**: Old `create_default_config` method was removed, `build_config` was renamed to `build_state`. As a consequence a node won't be able to interact with genesis config for older runtimes. The cleanup was made for sake of API simplicity. Also IMO maintaining compatibility with old API is not so crucial. - Reference implementation was provided for `substrate-test-runtime` and `rococo` runtimes. For rococo new [`genesis_configs_presets`](https://github.com/paritytech/polkadot-sdk/blob/3b41d66b/polkadot/runtime/rococo/src/genesis_config_presets.rs#L530) module was added and is used in `GenesisBuilder` [_presets-related_](https://github.com/paritytech/polkadot-sdk/blob/3b41d66b/polkadot/runtime/rococo/src/lib.rs#L2462-L2485) methods. - The `chain-spec-builder` util was also improved and allows to ([_doc_](https://github.com/paritytech/polkadot-sdk/blob/3b41d66b/substrate/bin/utils/chain-spec-builder/src/lib.rs#L19)): - list presets provided by given runtime (`list-presets`), - display preset or default config provided by the runtime (`display-preset`), - build chain-spec using named preset (`create ... named-preset`), - The `ChainSpecBuilder` is extended with [`with_genesis_config_preset_name`](https://github.com/paritytech/polkadot-sdk/blob/3b41d66b/substrate/client/chain-spec/src/chain_spec.rs#L447) method which allows to build chain-spec using named preset provided by the runtime. Sample usage on the node side [here](https://github.com/paritytech/polkadot-sdk/blob/2caffaae /polkadot/node/service/src/chain_spec.rs#L404). Implementation of #1984. fixes: #150 part of: #25 --------- Co-authored-by:Sebastian Kunert <[email protected]> Co-authored-by:

Kian Paimani <[email protected]> Co-authored-by:

Oliver Tale-Yazdi <[email protected]>

-

juangirini authored

## Basic example showcasing a migration using the MBM framework This PR has been built on top of https://github.com/paritytech/polkadot-sdk/pull/1781 and adds two new example crates to the `examples` pallet ### Changes Made: Added the `pallet-example-mbm` crate: This crate provides a minimal example of a pallet that uses MBM. It showcases a storage migration where values are migrated from a `u32` to a `u64`. --------- Signed-off-by:

Oliver Tale-Yazdi <[email protected]> Co-authored-by:

Oliver Tale-Yazdi <[email protected]> Co-authored-by:

Liam Aharon <[email protected]>

-

Lulu authored

CI will be enforcing this with next parity-publish release

-

gupnik authored

Step in https://github.com/paritytech/polkadot-sdk/issues/3155 Needed for https://github.com/paritytech/eng-automation/issues/6 This PR renames `frame` crate to `polkadot-sdk-frame` as `frame` is not available on crates.io --------- Co-authored-by:Kian Paimani <[email protected]>

-

- Apr 03, 2024

-

-

Sebastian Kunert authored

Enables pov-reclaim on the rococo/westend parachains, part of https://github.com/paritytech/polkadot-sdk/issues/3622

-

Alexandru Vasile authored

This PR ensure that the distance between any leaf and the finalized block is within a reasonable distance. For a new subscription, the chainHead has to provide all blocks between the leaves of the chain and the finalized block. When the distance between a leaf and the finalized block is large: - The tree route is costly to compute - We could deliver an unbounded number of blocks (potentially millions) (For more details see https://github.com/paritytech/polkadot-sdk/pull/3445#discussion_r1507210283) The configuration of the ChainHead is extended with: - suspend on lagging distance: When the distance between any leaf and the finalized block is greater than this number, the subscriptions are suspended for a given duration. - All active subscriptions are terminated with the `Stop` event, all blocks are unpinned and data discarded. - For incoming subscriptions, until the suspended period expires the subscriptions will immediately receive the `Stop` event. - Defaults to 128 blocks - suspended duration: The amount of time for which subscriptions are suspended - Defaults to 30 seconds cc @paritytech/subxt-team --------- Signed-off-by:Alexandru Vasile <[email protected]> Co-authored-by:

Sebastian Kunert <[email protected]>

-

- Apr 02, 2024

-

-

Serban Iorga authored

Working towards migrating the `parity-bridges-common` repo inside `polkadot-sdk`. This PR upgrades some dependencies in order to align them with the versions used in `parity-bridges-common` Related to https://github.com/paritytech/parity-bridges-common/issues/2538

-

Alexandru Vasile authored

chainHead: Allow methods to be called from within a single connection context and limit connections (#3481) This PR ensures that the chainHead RPC class can be called only from within the same connection context. The chainHead methods are now registered as raw methods. - https://github.com/paritytech/jsonrpsee/pull/1297 The concept of raw methods is introduced in jsonrpsee, which is an async method that exposes the connection ID: The raw method doesn't have the concept of a blocking method. Previously blocking methods are now spawning a blocking task to handle their blocking (ie DB) access. We spawn the same number of tasks as before, however we do that explicitly. Another approach would be implementing a RPC middleware that captures and decodes the method parameters: - https://github.com/paritytech/polkadot-sdk/pull/3343 However, that approach is prone to errors since the methods are hardcoded by name. Performace is affected by the double deserialization that needs to happen to extract the subscription ID we'd like to limit. Once from the middleware, and once from the methods itself. This PR paves the way to implement the chainHead connection limiter: - https://github.com/paritytech/polkadot-sdk/issues/1505 Registering tokens (subscription ID / operation ID) on the `RpcConnections` could be extended to return an error when the maximum number of operations is reached. While at it, have added an integration-test to ensure that chainHead methods can be called from within the same connection context. Before this is merged, a new JsonRPC release should be made to expose the `raw-methods`: - [x] Use jsonrpsee from crates io (blocked by: https://github.com/paritytech/jsonrpsee/pull/1297) Closes: https://github.com/paritytech/polkadot-sdk/issues/3207 cc @paritytech/subxt-team --------- Signed-off-by:

Alexandru Vasile <[email protected]> Co-authored-by:

Niklas Adolfsson <[email protected]>

-

Serban Iorga authored

Pulling the latest changes from `parity-bridges-common`

-

Sam Johnson authored

derive-syn-parse v0.2.0 came out recently which (finally) adds support for syn 2x. Upgrading to this will remove many of the places where syn 1x was still compiling alongside syn 2x in the polkadot-sdk workspace. This also upgrades `docify` to 0.2.8 which is the version that upgrades derive-syn-pasre to 0.2.0. Additionally, this consolidates the `docify` versions in the repo to all use the latest, and in one case upgrades to the 0.2x syntax where 0.1.x was still being used. --------- Co-authored-by:Liam Aharon <[email protected]>

-

- Apr 01, 2024

-

-

s0me0ne-unkn0wn authored

Rejoice! Rejoice! The story is nearly over. This PR removes stale migrations, auxiliary structures, and package dependencies, thus making Rococo and Westend totally free from any `im-online`-related stuff. `im-online` still stays a part of the Substrate node and its runtime: https://github.com/paritytech/polkadot-sdk/blob/0d932484/substrate/bin/node/runtime/src/lib.rs#L2276-L2277 I'm not sure if it makes sense to remove it from there considering that we're not removing `im-online` from FRAME. Please share your opinion.

-

- Mar 31, 2024

-

-

Liam Aharon authored

Closes https://github.com/paritytech/polkadot-sdk-docs/issues/70 WIP PR for an overview of how to develop tokens in FRAME. - [x] Tokens in Substrate Ref Doc - High-level overview of the token-related logic in FRAME - Improve docs with better explanation of how holds, freezes, ed, free balance, etc, all work - [x] Update `pallet_balances` docs - Clearly mark what is deprecated (currency) - [x] Write fungible trait docs - [x] Evaluate and if required update `pallet_assets`, `pallet_uniques`, `pallet_nfts` docs - [x] Absorb https://github.com/paritytech/polkadot-sdk/pull/2683/ - [x] Audit individual trait method docs, and improve if possible Feel free to suggest additional TODOs for this PR in the comments --------- Co-authored-by:

Bill Laboon <[email protected]> Co-authored-by:

Francisco Aguirre <[email protected]> Co-authored-by:

Kian Paimani <[email protected]> Co-authored-by:

Sebastian Kunert <[email protected]>

-

- Mar 28, 2024

-

-

Sebastian Kunert authored

This PR exports unified hostfunctions needed for parachains. Basicaly `SubstrateHostFunctions` + `storage_proof_size::HostFunctions`. Also removes the native executor from the parachain template. --------- Co-authored-by:Michal Kucharczyk <[email protected]>

-

PG Herveou authored

Cleanup tests (-2.7k lines !) using some builder patterns to build pallet_contracts api calls

-

- Mar 27, 2024

-

-

Francisco Aguirre authored

`execute` and `send` try to decode the xcm in the parameters before reaching the filter line. The new extrinsics decode only after the filter line. These should be used instead of the old ones. ## TODO - [x] Tests - [x] Generate weights - [x] Deprecation issue -> https://github.com/paritytech/polkadot-sdk/issues/3771 - [x] PRDoc - [x] Handle error in pallet-contracts This would make writing XCMs in PJS Apps more difficult, but here's the fix for that: https://github.com/polkadot-js/apps/pull/10350. Already deployed! https://polkadot.js.org/apps/#/utilities/xcm Supersedes https://github.com/paritytech/polkadot-sdk/pull/1798/ --------- Co-authored-by:

PG Herveou <[email protected]> Co-authored-by: command-bot <> Co-authored-by:

Adrian Catangiu <[email protected]>

-

- Mar 26, 2024

-

-

Pavel Orlov authored

The PR provides API for obtaining: - the weight required to execute an XCM message, - a list of acceptable `AssetId`s for message execution payment, - the cost of the weight in the specified acceptable `AssetId`. It is meant to address an issue where one has to guess how much fee to pay for execution. Also, at the moment, a client has to guess which assets are acceptable for fee execution payment. See the related issue https://github.com/paritytech/polkadot-sdk/issues/690. With this API, a client is supposed to query the list of the supported asset IDs (in the XCM version format the client understands), weigh the XCM program the client wants to execute and convert the weight into one of the acceptable assets. Note that the client is supposed to know what program will be executed on what chains. However, having a small companion JS library for the pallet-xcm and xtokens should be enough to determine what XCM programs will be executed and where (since these pallets compose a known small set of programs). ```Rust pub trait XcmPaymentApi<Call> where Call: Codec, { /// Returns a list of acceptable payment assets. /// /// # Arguments /// /// * `xcm_version`: Version. fn query_acceptable_payment_assets(xcm_version: Version) -> Result<Vec<VersionedAssetId>, Error>; /// Returns a weight needed to execute a XCM. /// /// # Arguments /// /// * `message`: `VersionedXcm`. fn query_xcm_weight(message: VersionedXcm<Call>) -> Result<Weight, Error>; /// Converts a weight into a fee for the specified `AssetId`. /// /// # Arguments /// /// * `weight`: convertible `Weight`. /// * `asset`: `VersionedAssetId`. fn query_weight_to_asset_fee(weight: Weight, asset: VersionedAssetId) -> Result<u128, Error>; /// Get delivery fees for sending a specific `message` to a `destination`. /// These always come in a specific asset, defined by the chain. /// /// # Arguments /// * `message`: The message that'll be sent, necessary because most delivery fees are based on the /// size of the message. /// * `destination`: The destination to send the message to. Different destinations may use /// different senders that charge different fees. fn query_delivery_fees(destination: VersionedLocation, message: VersionedXcm<()>) -> Result<VersionedAssets, Error>; } ``` An [example](https://gist.github.com/PraetorP/4bc323ff85401abe253897ba990ec29d) of a client side code. --------- Co-authored-by:Francisco Aguirre <[email protected]> Co-authored-by:

Adrian Catangiu <[email protected]> Co-authored-by:

Daniel Shiposha <[email protected]>

-

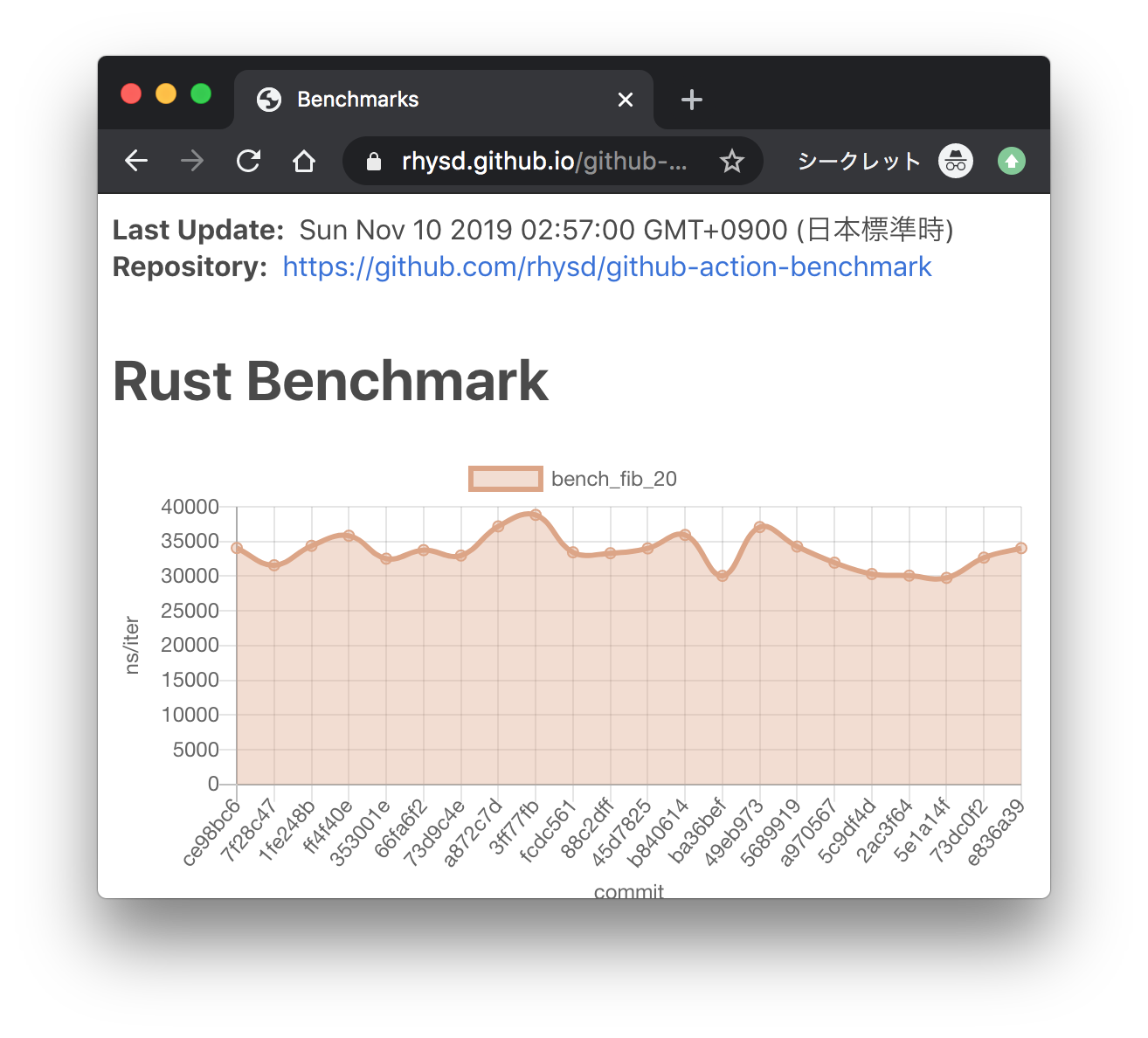

Andrei Eres authored

Here we add the ability to save subsystem benchmark results in JSON format to display them as graphs To draw graphs, CI team will use [github-action-benchmark](https://github.com/benchmark-action/github-action-benchmark). Since we are using custom benchmarks, we need to prepare [a specific data type](https://github.com/benchmark-action/github-action-benchmark?tab=readme-ov-file#examples): ``` [ { "name": "CPU Load", "unit": "Percent", "value": 50 } ] ``` Then we'll get graphs like this:  [A live page with graphs](https://benchmark-action.github.io/github-action-benchmark/dev/bench/) --------- Co-authored-by:ordian <[email protected]>

-

- Mar 22, 2024

-

-

Dmitry Markin authored

Make sure explicitly set by the operator public addresses go first in the authority discovery DHT records. Also update `Discovery` behavior to eliminate duplicates in the returned addresses. This PR should improve situation with https://github.com/paritytech/polkadot-sdk/issues/3519. Obsoletes https://github.com/paritytech/polkadot-sdk/pull/3657.

-

- Mar 20, 2024

-

-

s0me0ne-unkn0wn authored

This PR proposes enabling PoV reclaim on the `rococo-parachain` testchain to streamline testing and development of high-TPS stuff.

-

eskimor authored

We witnessed really poor performance on Rococo, where we ended up with 50 on-demand cores. This was due to the fact that for each core the full queue was processed. With this change full queue processing will happen way less often (most of the time complexity is O(1) or O(log(n))) and if it happens then only for one core (in expectation). Also spot price is now updated before each order to ensure economic back pressure. TODO: - [x] Implement - [x] Basic tests - [x] Add more tests (see todos) - [x] Run benchmark to confirm better performance, first results suggest > 100x faster. - [x] Write migrations - [x] Bump scale-info version and remove patch in Cargo.toml - [x] Write PR docs: on-demand performance improved, more on-demand cores are now non problematic anymore. If need by also the max queue size can be increased again. (Maybe not to 10k) Optional: Performance can be improved even more, if we called `pop_assignment_for_core()`, before calling `report_processed` (Avoid needless affinity drops). The effect gets smaller the larger the claim queue and I would only go for it, if it does not add complexity to the scheduler. --------- Co-authored-by:

eskimor <[email protected]> Co-authored-by:

antonva <[email protected]> Co-authored-by: command-bot <> Co-authored-by:

Anton Vilhelm Ásgeirsson <[email protected]> Co-authored-by:

ordian <[email protected]>

-

bader y authored

_This PR is being continued from https://github.com/paritytech/polkadot-sdk/pull/2206, which was closed when the developer_hub was merged._ closes https://github.com/paritytech/polkadot-sdk-docs/issues/44 --- # Description This PR adds a reference document to the `developer-hub` crate (see https://github.com/paritytech/polkadot-sdk/pull/2102). This specific reference document covers defensive programming practices common within the context of developing a runtime with Substrate. In particular, this covers the following areas: - Default behavior of how Rust deals with numbers in general - How to deal with floating point numbers in runtime / fixed point arithmetic - How to deal with Integer overflows - General "safe math" / defensive programming practices for common pallet development scenarios - Defensive traits that exist within Substrate, i.e., `defensive_saturating_add `, `defensive_unwrap_or` - More general defensive programming examples (keep it concise) - Link to relevant examples where these practices are actually in production / being used - Unwrapping (or rather lack thereof) 101 todo -- - [x] Apply feedback from previous PR - [x] This may warrant a PR to append some of these docs to `sp_arithmetic` --------- Co-authored-by:

Oliver Tale-Yazdi <[email protected]> Co-authored-by:

Gonçalo Pestana <[email protected]> Co-authored-by:

Kian Paimani <[email protected]> Co-authored-by:

Francisco Aguirre <[email protected]> Co-authored-by:

Radha <[email protected]>

-

dependabot[bot] authored

Bumps [anyhow](https://github.com/dtolnay/anyhow) from 1.0.75 to 1.0.81. <details> <summary>Release notes</summary> <p><em>Sourced from <a href="https://github.com/dtolnay/anyhow/releases">anyhow's releases</a>.</em></p> <blockquote> <h2>1.0.81</h2> <ul> <li>Make backtrace support available when using -Dwarnings (<a href="https://redirect.github.com/dtolnay/anyhow/issues/354">#354</a>)</li> </ul> <h2>1.0.80</h2> <ul> <li>Fix unused_imports warnings when compiled by rustc 1.78</li> </ul> <h2>1.0.79</h2> <ul> <li>Work around improperly cached build script result by sccache (<a href="https://redirect.github.com/dtolnay/anyhow/issues/340">#340</a>)</li> </ul> <h2>1.0.78</h2> <ul> <li>Reduce spurious rebuilds under RustRover IDE when using a nightly toolchain (<a href="https://redirect.github.com/dtolnay/anyhow/issues/337">#337</a>)</li> </ul> <h2>1.0.77</h2> <ul> <li>Make <code>anyhow::Error::backtrace</code> available on stable Rust compilers 1.65+ (<a href="https://redirect.github.com/dtolnay/anyhow/issues/293">#293</a>, thanks <a href="https://github.com/LukasKalbertodt"><code>@LukasKalbertodt</code></a>)</li> </ul> <h2>1.0.76</h2> <ul> <li>Opt in to <code>unsafe_op_in_unsafe_fn</code> lint (<a href="https://redirect.github.com/dtolnay/anyhow/issues/329">#329</a>)</li> </ul> </blockquote> </details> <details> <summary>Commits</summary> <ul> <li><a href="https://github.com/dtolnay/anyhow/commit/4aad4edebd9f09247d6c6b6784419a74bb116829"><code>4aad4ed</code></a> Release 1.0.81</li> <li><a href="https://github.com/dtolnay/anyhow/commit/8be90917c603199c5d1fdd73984237f023768e22"><code>8be9091</code></a> Merge pull request <a href="https://redirect.github.com/dtolnay/anyhow/issues/354">#354</a> from dtolnay/deadcode</li> <li><a href="https://github.com/dtolnay/anyhow/commit/a2eb7dd5e13add83f254b6dac0f68e043effc521"><code>a2eb7dd</code></a> Make compatible with -Dwarnings</li> <li><a href="https://github.com/dtolnay/anyhow/commit/54437197ee79c20678db433d98616fab7ddff1a5"><code>5443719</code></a> Release 1.0.80</li> <li><a href="https://github.com/dtolnay/anyhow/commit/dfc7bc07d4c41b61093c3251ed82becb51810bd4"><code>dfc7bc0c </code></a> Work around prelude redundant import warnings</li> <li><a href="https://github.com/dtolnay/anyhow/commit/6e4f86b48b5182ec71dbc8e308db9dc91e2ec8a5"><code>6e4f86b</code></a> Import from alloc not std, where possible</li> <li><a href="https://github.com/dtolnay/anyhow/commit/f885a133ede579c45e90ab489455126403d05db1"><code>f885a13</code></a> Ignore incompatible_msrv clippy false positives in test</li> <li><a href="https://github.com/dtolnay/anyhow/commit/fefbcbcb0b336a2d6c2ce6f0ee6d3fd02ef2cd3b"><code>fefbcbc</code></a> Ignore incompatible_msrv clippy lint</li> <li><a href="https://github.com/dtolnay/anyhow/commit/78f2d81cc71b79050a2fda270c45ff267557d853"><code>78f2d81</code></a> Update ui test suite to nightly-2024-02-08</li> <li><a href="https://github.com/dtolnay/anyhow/commit/edd88d3a43f11f1931330d3dd54189353ef00203"><code>edd88d3</code></a> Update ui test suite to nightly-2024-01-31</li> <li>Additional commits viewable in <a href="https://github.com/dtolnay/anyhow/compare/1.0.75...1.0.81">compare view</a></li> </ul> </details> <br /> [](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores) Dependabot will resolve any conflicts with this PR as long as you don't alter it yourself. You can also trigger a rebase manually by commenting `@dependabot rebase`. [//]: # (dependabot-automerge-start) [//]: # (dependabot-automerge-end) --- <details> <summary>Dependabot commands and options</summary> <br /> You can trigger Dependabot actions by commenting on this PR: - `@dependabot rebase` will rebase this PR - `@dependabot recreate` will recreate this PR, overwriting any edits that have been made to it - `@dependabot merge` will merge this PR after your CI passes on it - `@dependabot squash and merge` will squash and merge this PR after your CI passes on it - `@dependabot cancel merge` will cancel a previously requested merge and block automerging - `@dependabot reopen` will reopen this PR if it is closed - `@dependabot close` will close this PR and stop Dependabot recreating it. You can achieve the same result by closing it manually - `@dependabot show <dependency name> ignore conditions` will show all of the ignore conditions of the specified dependency - `@dependabot ignore this major version` will close this PR and stop Dependabot creating any more for this major version (unless you reopen the PR or upgrade to it yourself) - `@dependabot ignore this minor version` will close this PR and stop Dependabot creating any more for this minor version (unless you reopen the PR or upgrade to it yourself) - `@dependabot ignore this dependency` will close this PR and stop Dependabot creating any more for this dependency (unless you reopen the PR or upgrade to it yourself) </details> Signed-off-by:

dependabot[bot] <[email protected]> Co-authored-by:

dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

-

Tsvetomir Dimitrov authored

The PR adds two things: 1. Runtime API exposing the whole claim queue 2. Consumes the API in `collation-generation` to fetch the next scheduled `ParaEntry` for an occupied core. Related to https://github.com/paritytech/polkadot-sdk/issues/1797

-

- Mar 19, 2024

-

-

dependabot[bot] authored

Bumps the known_good_semver group with 2 updates: [serde_yaml](https://github.com/dtolnay/serde-yaml) and [syn](https://github.com/dtolnay/syn). Updates `serde_yaml` from 0.9.32 to 0.9.33 <details> <summary>Release notes</summary> <p><em>Sourced from <a href="https://github.com/dtolnay/serde-yaml/releases">serde_yaml's releases</a>.</em></p> <blockquote> <h2>0.9.33</h2> <ul> <li>Fix quadratic parse time for YAML containing deeply nested flow collections (<a href="https://redirect.github.com/dtolnay/unsafe-libyaml/issues/26">dtolnay/unsafe-libyaml#26</a>)</li> </ul> </blockquote> </details> <details> <summary>Commits</summary> <ul> <li><a href="https://github.com/dtolnay/serde-yaml/commit/f4c9ed92385c827a677dae60c2b5a894e24709f0"><code>f4c9ed9</code></a> Release 0.9.33</li> <li><a href="https://github.com/dtolnay/serde-yaml/commit/b4edaee907373ee69c6389687a35fced63d8addf"><code>b4edaee</code></a> Pull in yaml_parser_fetch_more_tokens fix from libyaml</li> <li><a href="https://github.com/dtolnay/serde-yaml/commit/8a5542ced61ae21d0772e504fac01bd1dbfaaa6b"><code>8a5542c</code></a> Resolve non_local_definitions warning in test</li> <li>See full diff in <a href="https://github.com/dtolnay/serde-yaml/compare/0.9.32...0.9.33">compare view</a></li> </ul> </details> <br /> Updates `syn` from 2.0.52 to 2.0.53 <details> <summary>Release notes</summary> <p><em>Sourced from <a href="https://github.com/dtolnay/syn/releases">syn's releases</a>.</em></p> <blockquote> <h2>2.0.53</h2> <ul> <li>Implement Copy, Clone, and ToTokens for syn::parse::Nothing (<a href="https://redirect.github.com/dtolnay/syn/issues/1597">#1597</a>)</li> </ul> </blockquote> </details> <details> <summary>Commits</summary> <ul> <li><a href="https://github.com/dtolnay/syn/commit/32dcf8df303c7181d95325f159ffbb1870a73e70"><code>32dcf8d</code></a> Release 2.0.53</li> <li><a href="https://github.com/dtolnay/syn/commit/fd1f2aa9979c557e5b95c85f35b8949b8059153f"><code>fd1f2aa</code></a> Merge pull request <a href="https://redirect.github.com/dtolnay/syn/issues/1597">#1597</a> from dtolnay/copyprintnothing</li> <li><a href="https://github.com/dtolnay/syn/commit/4f6c0528d63874495ce23d197a898cd63cb04d60"><code>4f6c052</code></a> Implement ToTokens for syn::parse::Nothing</li> <li><a href="https://github.com/dtolnay/syn/commit/3f37543794e5d43952399847a2ecbc1eb39f609f"><code>3f37543</code></a> Implement Copy for syn::parse::Nothing</li> <li><a href="https://github.com/dtolnay/syn/commit/36a412217d5217fab5657ab03ebcf29102d87a64"><code>36a4122</code></a> Update test suite to nightly-2024-03-16</li> <li><a href="https://github.com/dtolnay/syn/commit/bd931069f5adb81168e7e6186ead0663cc605257"><code>bd93106</code></a> Revert "Temporarily disable nightly testing due to libLLVM link issue"</li> <li><a href="https://github.com/dtolnay/syn/commit/06166a77b7d465b0e4c45e1a2162f15031d7f722"><code>06166a7</code></a> Update test suite to nightly-2024-03-09</li> <li><a href="https://github.com/dtolnay/syn/commit/ed545e75d8fdcab5078789ad25fc0efadc14e59b"><code>ed545e7</code></a> Work around doc_markdown lint in test_precedence</li> <li><a href="https://github.com/dtolnay/syn/commit/7aef1edbba28afe1bb6f9ddc0b5095dcd5135849"><code>7aef1ed</code></a> Temporarily disable nightly testing due to libLLVM link issue</li> <li><a href="https://github.com/dtolnay/syn/commit/556b10bf201264172e4b840f8cf1be38b8d75738"><code>556b10b</code></a> Update test suite to nightly-2024-03-06</li> <li>Additional commits viewable in <a href="https://github.com/dtolnay/syn/compare/2.0.52...2.0.53">compare view</a></li> </ul> </details> <br /> Dependabot will resolve any conflicts with this PR as long as you don't alter it yourself. You can also trigger a rebase manually by commenting `@dependabot rebase`. [//]: # (dependabot-automerge-start) [//]: # (dependabot-automerge-end) --- <details> <summary>Dependabot commands and options</summary> <br /> You can trigger Dependabot actions by commenting on this PR: - `@dependabot rebase` will rebase this PR - `@dependabot recreate` will recreate this PR, overwriting any edits that have been made to it - `@dependabot merge` will merge this PR after your CI passes on it - `@dependabot squash and merge` will squash and merge this PR after your CI passes on it - `@dependabot cancel merge` will cancel a previously requested merge and block automerging - `@dependabot reopen` will reopen this PR if it is closed - `@dependabot close` will close this PR and stop Dependabot recreating it. You can achieve the same result by closing it manually - `@dependabot show <dependency name> ignore conditions` will show all of the ignore conditions of the specified dependency - `@dependabot ignore <dependency name> major version` will close this group update PR and stop Dependabot creating any more for the specific dependency's major version (unless you unignore this specific dependency's major version or upgrade to it yourself) - `@dependabot ignore <dependency name> minor version` will close this group update PR and stop Dependabot creating any more for the specific dependency's minor version (unless you unignore this specific dependency's minor version or upgrade to it yourself) - `@dependabot ignore <dependency name>` will close this group update PR and stop Dependabot creating any more for the specific dependency (unless you unignore this specific dependency or upgrade to it yourself) - `@dependabot unignore <dependency name>` will remove all of the ignore conditions of the specified dependency - `@dependabot unignore <dependency name> <ignore condition>` will remove the ignore condition of the specified dependency and ignore conditions </details> Signed-off-by:

dependabot[bot] <[email protected]> Co-authored-by:

dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

-

- Mar 18, 2024

-

-

Squirrel authored

This PR removes sp-std crate from substrate/primitives sub-directories. For now crates that have `pub use` of sp-std or export macros that would necessitate users of the macros to `extern crate alloc` have been excluded from this PR. There should be no breaking changes in this PR. --------- Co-authored-by:Koute <[email protected]>

-

- Mar 17, 2024

-

-

dependabot[bot] authored

Bumps the known_good_semver group with 3 updates: [log](https://github.com/rust-lang/log), [syn](https://github.com/dtolnay/syn) and [clap](https://github.com/clap-rs/clap). Updates `log` from 0.4.20 to 0.4.21 <details> <summary>Changelog</summary> <p><em>Sourced from <a href="https://github.com/rust-lang/log/blob/master/CHANGELOG.md">log's changelog</a>.</em></p> <blockquote> <h2>[0.4.21] - 2024-02-27</h2> <h2>What's Changed</h2> <ul> <li>Minor clippy nits by <a href="https://github.com/nyurik"><code>@nyurik</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/578">rust-lang/log#578</a></li> <li>Simplify Display impl by <a href="https://github.com/nyurik"><code>@nyurik</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/579">rust-lang/log#579</a></li> <li>Set all crates to 2021 edition by <a href="https://github.com/nyurik"><code>@nyurik</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/580">rust-lang/log#580</a></li> <li>Various changes based on review by <a href="https://github.com/Thomasdezeeuw"><code>@Thomasdezeeuw</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/583">rust-lang/log#583</a></li> <li>Fix typo in file_static() method doc by <a href="https://github.com/dimo414"><code>@dimo414</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/590">rust-lang/log#590</a></li> <li>Specialize empty key value pairs by <a href="https://github.com/EFanZh"><code>@EFanZh</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/576">rust-lang/log#576</a></li> <li>Fix incorrect lifetime in Value::to_str() by <a href="https://github.com/peterjoel"><code>@peterjoel</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/587">rust-lang/log#587</a></li> <li>Remove some API of the key-value feature by <a href="https://github.com/Thomasdezeeuw"><code>@Thomasdezeeuw</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/585">rust-lang/log#585</a></li> <li>Add logcontrol-log and log-reload by <a href="https://github.com/swsnr"><code>@swsnr</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/595">rust-lang/log#595</a></li> <li>Add Serialization section to kv::Value docs by <a href="https://github.com/Thomasdezeeuw"><code>@Thomasdezeeuw</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/593">rust-lang/log#593</a></li> <li>Rename Value::to_str to to_cow_str by <a href="https://github.com/Thomasdezeeuw"><code>@Thomasdezeeuw</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/592">rust-lang/log#592</a></li> <li>Clarify documentation and simplify initialization of <code>STATIC_MAX_LEVEL</code> by <a href="https://github.com/ptosi"><code>@ptosi</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/594">rust-lang/log#594</a></li> <li>Update docs to 2021 edition, test by <a href="https://github.com/nyurik"><code>@nyurik</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/577">rust-lang/log#577</a></li> <li>Add "alterable_logger" link to README.md by <a href="https://github.com/brummer-simon"><code>@brummer-simon</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/589">rust-lang/log#589</a></li> <li>Normalize line ending by <a href="https://github.com/EFanZh"><code>@EFanZh</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/602">rust-lang/log#602</a></li> <li>Remove <code>ok_or</code> in favor of <code>Option::ok_or</code> by <a href="https://github.com/AngelicosPhosphoros"><code>@AngelicosPhosphoros</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/607">rust-lang/log#607</a></li> <li>Use <code>Acquire</code> ordering for initialization check by <a href="https://github.com/AngelicosPhosphoros"><code>@AngelicosPhosphoros</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/610">rust-lang/log#610</a></li> <li>Get structured logging API ready for stabilization by <a href="https://github.com/KodrAus"><code>@KodrAus</code></a> in <a href="https://redirect.github.com/rust-lang/log/pull/613">rust-lang/log#613</a></li> </ul> <h2>New Contributors</h2> <ul> <li><a href="https://github.com/nyurik"><code>@nyurik</code></a> made their first contribution in <a href="https://redirect.github.com/rust-lang/log/pull/578">rust-lang/log#578</a></li> <li><a href="https://github.com/dimo414"><code>@dimo414</code></a> made their first contribution in <a href="https://redirect.github.com/rust-lang/log/pull/590">rust-lang/log#590</a></li> <li><a href="https://github.com/peterjoel"><code>@peterjoel</code></a> made their first contribution in <a href="https://redirect.github.com/rust-lang/log/pull/587">rust-lang/log#587</a></li> <li><a href="https://github.com/ptosi"><code>@ptosi</code></a> made their first contribution in <a href="https://redirect.github.com/rust-lang/log/pull/594">rust-lang/log#594</a></li> <li><a href="https://github.com/brummer-simon"><code>@brummer-simon</code></a> made their first contribution in <a href="https://redirect.github.com/rust-lang/log/pull/589">rust-lang/log#589</a></li> <li><a href="https://github.com/AngelicosPhosphoros"><code>@AngelicosPhosphoros</code></a> made their first contribution in <a href="https://redirect.github.com/rust-lang/log/pull/607">rust-lang/log#607</a></li> </ul> </blockquote> </details> <details> <summary>Commits</summary> <ul> <li><a href="https://github.com/rust-lang/log/commit/3ccdc286fef3076747fe18a2a93658ea4d4ae012"><code>3ccdc28</code></a> Merge pull request <a href="https://redirect.github.com/rust-lang/log/issues/617">#617</a> from rust-lang/cargo/0.4.21</li> <li><a href="https://github.com/rust-lang/log/commit/6153cb289f0e7b80f00ae07dbe5ee41cf3d3fcb0"><code>6153cb2</code></a> prepare for 0.4.21 release</li> <li><a href="https://github.com/rust-lang/log/commit/f0f74946a4bfb02cfc407795a3499c4b69d7a290"><code>f0f7494</code></a> Merge pull request <a href="https://redirect.github.com/rust-lang/log/issues/613">#613</a> from rust-lang/feat/kv-cleanup</li> <li><a href="https://github.com/rust-lang/log/commit/2b220bf3b705f2abc0ee591c7eb17972a979da3a"><code>2b220bf</code></a> clean up structured logging example</li> <li><a href="https://github.com/rust-lang/log/commit/646e9ab9917fb79e44b6b36b8375106a1a09766c"><code>646e9ab</code></a> use original Visitor name for VisitValue</li> <li><a href="https://github.com/rust-lang/log/commit/cf85c38d3519745d60e7b891c4b2025050a8389f"><code>cf85c38</code></a> add needed subfeatures to kv_unstable</li> <li><a href="https://github.com/rust-lang/log/commit/73e953905b970ef765a86bf6cbd69bc2c5e2bac4"><code>73e9539</code></a> fix up capturing of :err</li> <li><a href="https://github.com/rust-lang/log/commit/31bb4b0ff36e458c6bef304a336b71f6342ddcc7"><code>31bb4b0</code></a> move error macros together</li> <li><a href="https://github.com/rust-lang/log/commit/ad917118a5e781d0dd60b3a75ba519ce9839ba70"><code>ad91711</code></a> support field shorthand in macros</li> <li><a href="https://github.com/rust-lang/log/commit/90a347bd836873264a393a35bfd90fe478fadae2"><code>90a347b</code></a> restore removed APIs as deprecated</li> <li>Additional commits viewable in <a href="https://github.com/rust-lang/log/compare/0.4.20...0.4.21">compare view</a></li> </ul> </details> <br /> Updates `syn` from 2.0.50 to 2.0.52 <details> <summary>Release notes</summary> <p><em>Sourced from <a href="https://github.com/dtolnay/syn/releases">syn's releases</a>.</em></p> <blockquote> <h2>2.0.52</h2> <ul> <li>Add an expression parser that uses match-arm's boundary rules (<a href="https://redirect.github.com/dtolnay/syn/issues/1593">#1593</a>)</li> </ul> <h2>2.0.51</h2> <ul> <li>Resolve non_local_definitions warnings in generated code under rustc 1.78-nightly</li> </ul> </blockquote> </details> <details> <summary>Commits</summary> <ul> <li><a href="https://github.com/dtolnay/syn/commit/07ede6a6b31adeb3a18899ada1f352f63b3a36b9"><code>07ede6a</code></a> Release 2.0.52</li> <li><a href="https://github.com/dtolnay/syn/commit/acbcfbc8c113fa1603469c9ad329d061ee74662e"><code>acbcfbc</code></a> Merge pull request <a href="https://redirect.github.com/dtolnay/syn/issues/1593">#1593</a> from dtolnay/boundary</li> <li><a href="https://github.com/dtolnay/syn/commit/4924a993dce23abe65128ac318dd662d1e2ceef2"><code>4924a99</code></a> Add an expression parser that uses match-arm's boundary rules</li> <li><a href="https://github.com/dtolnay/syn/commit/e06122bf2cfd31bd7f70304694477dd292fe7e1e"><code>e06122b</code></a> Resolve unnecessary_get_then_check clippy lint</li> <li><a href="https://github.com/dtolnay/syn/commit/018fc5a6298491525387910cb359a9ec618abe54"><code>018fc5a</code></a> Update test suite to nightly-2024-02-27</li> <li><a href="https://github.com/dtolnay/syn/commit/5e15a9b412cb1e2df481e3470e1be8defaee4495"><code>5e15a9b</code></a> Release 2.0.51</li> <li><a href="https://github.com/dtolnay/syn/commit/7e0d4e1f43a879078595f0a3876484a1920ab8f8"><code>7e0d4e1</code></a> Resolve non_local_definitions warning in debug impls</li> <li><a href="https://github.com/dtolnay/syn/commit/8667ad97c1d4e75ac1bb323fb5c7849269814145"><code>8667ad9</code></a> Ignore module_name_repetitions pedantic clippy lint in codegen</li> <li><a href="https://github.com/dtolnay/syn/commit/1fc32000e25bf8fda7371071073f91e012ddf808"><code>1fc3200</code></a> Update test suite to nightly-2024-02-26</li> <li><a href="https://github.com/dtolnay/syn/commit/07a2065576b27dcf0c104f56379cc446d2f3824b"><code>07a2065</code></a> Update test suite to nightly-2024-02-23</li> <li>See full diff in <a href="https://github.com/dtolnay/syn/compare/2.0.50...2.0.52">compare view</a></li> </ul> </details> <br /> Updates `clap` from 4.5.1 to 4.5.3 <details> <summary>Release notes</summary> <p><em>Sourced from <a href="https://github.com/clap-rs/clap/releases">clap's releases</a>.</em></p> <blockquote> <h2>v4.5.3</h2> <h2>[4.5.3] - 2024-03-15</h2> <h3>Internal</h3> <ul> <li><em>(derive)</em> Update <code>heck</code></li> </ul> <h2>v4.5.2</h2> <h2>[4.5.2] - 2024-03-06</h2> <h3>Fixes</h3> <ul> <li><em>(macros)</em> Silence a warning</li> </ul> </blockquote> </details> <details> <summary>Changelog</summary> <p><em>Sourced from <a href="https://github.com/clap-rs/clap/blob/master/CHANGELOG.md">clap's changelog</a>.</em></p> <blockquote> <h2>[4.5.3] - 2024-03-15</h2> <h3>Internal</h3> <ul> <li><em>(derive)</em> Update <code>heck</code></li> </ul> <h2>[4.5.2] - 2024-03-06</h2> <h3>Fixes</h3> <ul> <li><em>(macros)</em> Silence a warning</li> </ul> </blockquote> </details> <details> <summary>Commits</summary> <ul> <li><a href="https://github.com/clap-rs/clap/commit/4e07b438584bb8a19e37599d4c5b11797bec5579"><code>4e07b43</code></a> chore: Release</li> <li><a href="https://github.com/clap-rs/clap/commit/8247c7ddf05d8023729ac180d8e8df260f1da5ff"><code>8247c7d</code></a> docs: Update changelog</li> <li><a href="https://github.com/clap-rs/clap/commit/677c52ce0870115845a4c42e204f6c049b81a1e7"><code>677c52c</code></a> chore: Update <code>heck</code> requirement (<a href="https://redirect.github.com/clap-rs/clap/issues/5396">#5396</a>)</li> <li><a href="https://github.com/clap-rs/clap/commit/f65d421607ba16c3175ffe76a20820f123b6c4cb"><code>f65d421</code></a> chore: Release</li> <li><a href="https://github.com/clap-rs/clap/commit/886b2729e419114bf42f1a92c66d346c81aa8f33"><code>886b272</code></a> docs: Update changelog</li> <li><a href="https://github.com/clap-rs/clap/commit/3ba429752fdb19b7a1c2e151c41d5141ad5b9295"><code>3ba4297</code></a> Merge pull request <a href="https://redirect.github.com/clap-rs/clap/issues/5386">#5386</a> from amaanq/static-var-name</li> <li><a href="https://github.com/clap-rs/clap/commit/2aea9504c4894b3bddf9cd4d2d6cba889307c157"><code>2aea950</code></a> fix: Use SCREAMING_SNAKE_CASE for static variable <code>authors</code></li> <li><a href="https://github.com/clap-rs/clap/commit/690f5557d7f25904c31ec9f2a3c3657cbb68c98e"><code>690f555</code></a> Merge pull request <a href="https://redirect.github.com/clap-rs/clap/issues/5382">#5382</a> from clap-rs/renovate/pre-commit-action-3.x</li> <li><a href="https://github.com/clap-rs/clap/commit/a2aa644368ec19026b16b870ec32dc57b325ba9b"><code>a2aa644</code></a> chore(deps): update compatible (dev) (<a href="https://redirect.github.com/clap-rs/clap/issues/5381">#5381</a>)</li> <li><a href="https://github.com/clap-rs/clap/commit/c233de53c0cca4281f444cf16d16d161bc9c3cab"><code>c233de5</code></a> chore(deps): update pre-commit/action action to v3.0.1</li> <li>Additional commits viewable in <a href="https://github.com/clap-rs/clap/compare/clap_complete-v4.5.1...v4.5.3">compare view</a></li> </ul> </details> <br /> Dependabot will resolve any conflicts with this PR as long as you don't alter it yourself. You can also trigger a rebase manually by commenting `@dependabot rebase`. [//]: # (dependabot-automerge-start) [//]: # (dependabot-automerge-end) --- <details> <summary>Dependabot commands and options</summary> <br /> You can trigger Dependabot actions by commenting on this PR: - `@dependabot rebase` will rebase this PR - `@dependabot recreate` will recreate this PR, overwriting any edits that have been made to it - `@dependabot merge` will merge this PR after your CI passes on it - `@dependabot squash and merge` will squash and merge this PR after your CI passes on it - `@dependabot cancel merge` will cancel a previously requested merge and block automerging - `@dependabot reopen` will reopen this PR if it is closed - `@dependabot close` will close this PR and stop Dependabot recreating it. You can achieve the same result by closing it manually - `@dependabot show <dependency name> ignore conditions` will show all of the ignore conditions of the specified dependency - `@dependabot ignore <dependency name> major version` will close this group update PR and stop Dependabot creating any more for the specific dependency's major version (unless you unignore this specific dependency's major version or upgrade to it yourself) - `@dependabot ignore <dependency name> minor version` will close this group update PR and stop Dependabot creating any more for the specific dependency's minor version (unless you unignore this specific dependency's minor version or upgrade to it yourself) - `@dependabot ignore <dependency name>` will close this group update PR and stop Dependabot creating any more for the specific dependency (unless you unignore this specific dependency or upgrade to it yourself) - `@dependabot unignore <dependency name>` will remove all of the ignore conditions of the specified dependency - `@dependabot unignore <dependency name> <ignore condition>` will remove the ignore condition of the specified dependency and ignore conditions </details> Signed-off-by:

dependabot[bot] <[email protected]> Co-authored-by:

dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

-

- Mar 15, 2024

-

-

PG Herveou authored

-

- Mar 14, 2024

-

-

Ignacio Palacios authored

Issues addressed in this PR: - Improve *Penpal* runtime: - Properly handled received assets. Previously, it treated `(1, Here)` as the local native currency, whereas it should be treated as a `ForeignAsset`. This wasn't a great example of standard Parachain behaviour, as no Parachain treats the system asset as the local currency. - Remove `AllowExplicitUnpaidExecutionFrom` the system. Again, this wasn't a great example of standard Parachain behaviour. - Move duplicated `ForeignAssetFeeAsExistentialDepositMultiplierFeeCharger` to `assets_common` crate. - Improve emulated tests: - Update *Penpal* tests to new runtime. - To simplify tests, register the reserve transferred, teleported, and system assets in *Penpal* and *AssetHub* genesis. This saves us from having to create the assets repeatedly for each test - Add missing test case: `reserve_transfer_assets_from_para_to_system_para`. - Cleanup. - Prevent integration tests crates imports from being re-exported, as they were polluting the `polkadot-sdk` docs. There is still a test case missing for reserve transfers: - Reserve transfer of system asset from *Parachain* to *Parachain* trough *AssetHub*. - This is not yet possible with `pallet-xcm` due to the reasons explained in https://github.com/paritytech/polkadot-sdk/pull/3339 --------- Co-authored-by: command-bot <>

-

- Mar 13, 2024

-

-

georgepisaltu authored

Revert "FRAME: Create `TransactionExtension` as a replacement for `SignedExtension` (#2280)" (#3665) This PR reverts #2280 which introduced `TransactionExtension` to replace `SignedExtension`. As a result of the discussion [here](https://github.com/paritytech/polkadot-sdk/pull/3623#issuecomment-1986789700), the changes will be reverted for now with plans to reintroduce the concept in the future. --------- Signed-off-by:georgepisaltu <[email protected]>

-