- Jul 17, 2024

-

-

Egor_P authored

This PR contains adjustments of the node release pipelines so that it will be possible to use those to trigger release actions based on the `stable` branch. Previously the whole pipeline of the flows from [creation of the `rc-tag`](https://github.com/paritytech/polkadot-sdk/blob/master/.github/workflows/release-10_rc-automation.yml) (v1.15.0-rc1, v1.15.0-rc2, etc) till [the release draft creation](https://github.com/paritytech/polkadot-sdk/blob/master/.github/workflows/release-30_publish_release_draft.yml) was triggered on push to the node release branch. As we had the node release branch and the crates release branch separately, it worked fine. From now on, as we are switching to the one branch approach, for the first iteration I would like to keep things simple to see how the new release process will work with both parts (crates and node) made from one branch. Changes made: - The first step in the pipeline (rc-tag creation) will be triggered manually instead of the push to the branch - The tag version will be set manually from the input instead of to be taken from the branch name - Docker image will be additionally tagged as `stable` Closes: https://github.com/paritytech/release-engineering/issues/214

-

Alin Dima authored

Resolves https://github.com/paritytech/polkadot-sdk/issues/4468 Gives instructions on how to enable elastic scaling MVP to parachain teams. Still a draft because it depends on further changes we make to the slot-based collator: https://github.com/paritytech/polkadot-sdk/pull/4097 Parachains cannot use this yet because the collator was not released and no relay chain network has been configured for elastic scaling yet

-

- Jul 16, 2024

-

-

Andrei Eres authored

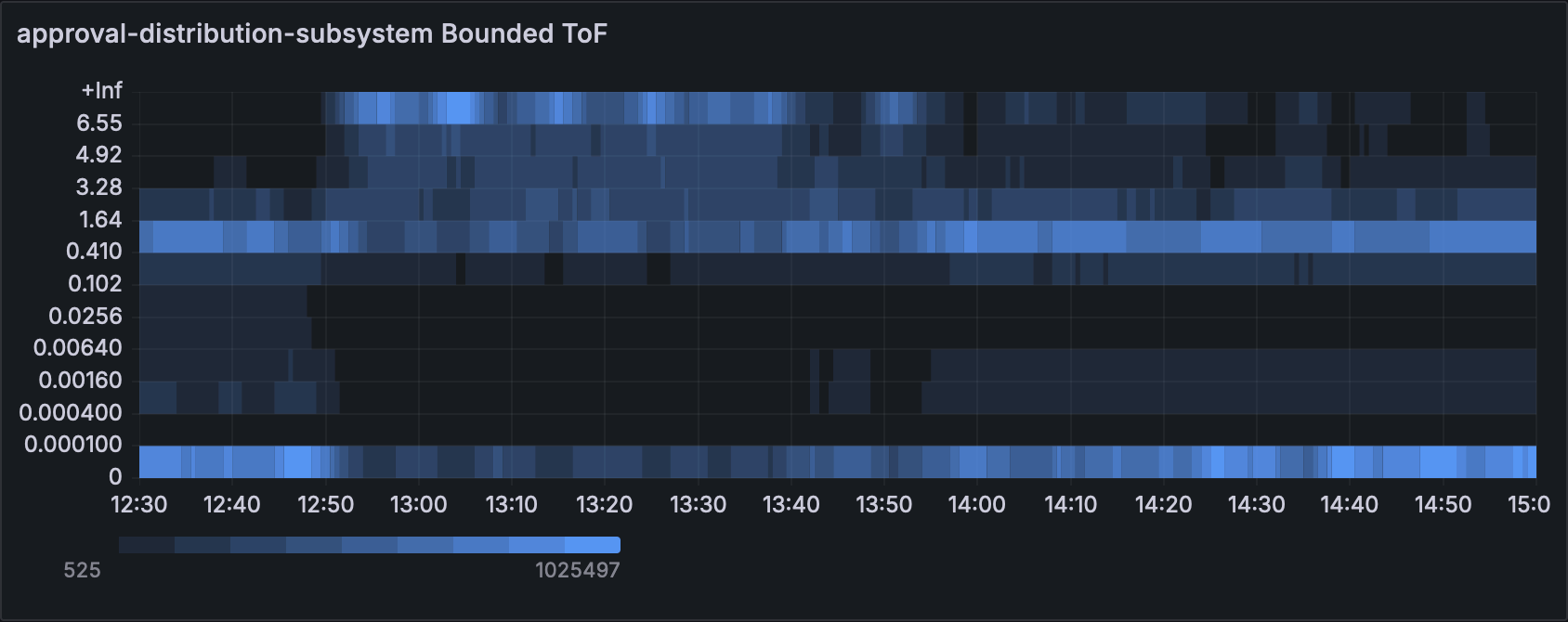

Closes https://github.com/paritytech/polkadot-sdk/issues/577 ### Changed - `orchestra` updated to 0.4.0 - `PeerViewChange` sent with high priority and should be processed first in a queue. - To count them in tests added tracker to TestSender and TestOverseer. It acts more like a smoke test though. ### Testing on Versi The changes were tested on Versi with two objectives: 1. Make sure the node functionality does not change. 2. See how the changes affect performance. Test setup: - 2.5 hours for each case - 100 validators - 50 parachains - validatorsPerCore = 2 - neededApprovals = 100 - nDelayTranches = 89 - relayVrfModuloSamples = 50 During the test period, all nodes ran without any crashes, which satisfies the first objective. To estimate the change in performance we used ToF charts. The graphs show that there are no spikes in the top as before. This proves that our hypothesis is correct. ### Normalized charts with ToF  [Before](https://grafana.teleport.parity.io/goto/ZoR53ClSg?orgId=1)  [After](https://grafana.teleport.parity.io/goto/6ux5qC_IR?orgId=1) ### Conclusion The prioritization of subsystem messages reduces the ToF of the networking subsystem, which helps faster propagation of gossip messages.

-

Alexander Samusev authored

cc https://github.com/paritytech/ci_cd/issues/939

-

Andrei Eres authored

A baseline for the statement-distribution regression test was set only in the beginning and now we see that the actual values a bit lower. <img width="1001" alt="image" src="https://github.com/user-attachments/assets/40b06eec-e38f-43ad-b437-89eca502aa66"> [Source](https://paritytech.github.io/polkadot-sdk/bench/statement-distribution-regression-bench)

-

Sebastian Miasojed authored

Introduce transient storage, which behaves identically to regular storage but is kept only in memory and discarded after every transaction. This functionality is similar to the `TSTORE` and `TLOAD` operations used in Ethereum. The following new host functions have been introduced: `get_transient_storage` `set_transient_storage` `take_transient_storage` `clear_transient_storage` `contains_transient_storage` Note: These functions are declared as `unstable` and thus are not activated. --------- Co-authored-by: command-bot <> Co-authored-by:

PG Herveou <pgherveou@gmail.com> Co-authored-by:

Alexander Theißen <alex.theissen@me.com>

-

Alexandru Gheorghe authored

This is part of the work to further optimize the approval subsystems, if you want to understand the full context start with reading https://github.com/paritytech/polkadot-sdk/pull/4849#issue-2364261568, however that's not necessary, as this change is self-contained and nodes would benefit from it regardless of subsequent changes landing or not. While testing with 1000 validators I found out that the logic for determining the validators an assignment should be gossiped to is taking a lot of time, because it always iterated through all the peers, to determine which are X and Y neighbours and to which we should randomly gossip(4 samples). This could be actually optimised, so we don't have to iterate through all peers for each new assignment, by fetching the list of X and Y peer ids from the topology first and then stopping the loop once we took the 4 random samples. With this improvements we reduce the total CPU time spent in approval-distribution with 15% on networks with 500 validators and 20% on networks with 1000 validators. ## Test coverage: `propagates_assignments_along_unshared_dimension` and `propagates_locally_generated_assignment_to_both_dimensions` cover already logic and they passed, confirm that there is no breaking change. Additionally, the approval voting benchmark measure the traffic sent to other peers, so I confirmed that for various network size there is no difference in the size of the traffic sent to other peers. --------- Signed-off-by:Alexandru Gheorghe <alexandru.gheorghe@parity.io>

-

Parth Mittal authored

As per #3326, removes usage of the `pallet::getter` macro from the `session` pallet. The syntax `StorageItem::<T, I>::get()` should be used instead. Also, adds public functions for compatibility. NOTE: The `./historical` directory has not been modified. cc @muraca polkadot address: 5GsLutpKjbzsbTphebs9Uy4YK6gTN47MAaz6njPktidjR5cp --------- Co-authored-by:

Kian Paimani <5588131+kianenigma@users.noreply.github.com> Co-authored-by:

Bastian Köcher <git@kchr.de>

-

Javyer authored

This will ensure that malicious code can not access other parts of the project. Co-authored-by:

Oliver Tale-Yazdi <oliver.tale-yazdi@parity.io> Co-authored-by:

Bastian Köcher <git@kchr.de>

-

Alexandru Vasile authored

The `DhtEvent::ValuePut` was not propagated back to the higher levels. This PR ensures we'll send the ValuePut event similarly to `DhtEvent::ValuePutFailed` ### Next Steps - [ ] A bit more testing Thanks @alexggh for catching this

Alexandru Vasile <alexandru.vasile@parity.io>

-

- Jul 15, 2024

-

-

Niklas Adolfsson authored

Co-authored-by:Bastian Köcher <git@kchr.de>

-

Branislav Kontur authored

## Testing Both Bridges zombienet tests passed, e.g.: https://gitlab.parity.io/parity/mirrors/polkadot-sdk/-/jobs/6698640 https://gitlab.parity.io/parity/mirrors/polkadot-sdk/-/jobs/6698641 https://gitlab.parity.io/parity/mirrors/polkadot-sdk/-/jobs/6700072 https://gitlab.parity.io/parity/mirrors/polkadot-sdk/-/jobs/6700073

-

Jun Jiang authored

This should remove nearly all usage of `sp-std` except: - bridge and bridge-hubs - a few of frames re-export `sp-std`, keep them for now - there is a usage of `sp_std::Writer`, I don't have an idea how to move it Please review proc-macro carefully. I'm not sure I'm doing it the right way. Note: need `/bot fmt` --------- Co-authored-by:Bastian Köcher <git@kchr.de> Co-authored-by: command-bot <>

-

Jun Jiang authored

It says `Will be removed after July 2023` but that's not true

Oliver Tale-Yazdi <oliver.tale-yazdi@parity.io> Co-authored-by:

Bastian Köcher <git@kchr.de>

-

Alexandru Vasile authored

A malicious peer can submit random bytes on transaction protocol. In this case, the peer is not disconnected or reported back to the peerstore. This PR ensures the peer's reputation is properly reported. Discovered during testing: - https://github.com/paritytech/polkadot-sdk/pull/4977 cc @paritytech/networking Signed-off-by:Alexandru Vasile <alexandru.vasile@parity.io>

-

- Jul 12, 2024

-

-

dharjeezy authored

Part of: https://github.com/paritytech/polkadot-sdk/issues/239 Polkadot address: 12GyGD3QhT4i2JJpNzvMf96sxxBLWymz4RdGCxRH5Rj5agKW --------- Signed-off-by:

Oliver Tale-Yazdi <oliver.tale-yazdi@parity.io> Co-authored-by:

Oliver Tale-Yazdi <oliver.tale-yazdi@parity.io> Co-authored-by:

Bastian Köcher <git@kchr.de>

-

Andrei Eres authored

Part of https://github.com/paritytech/polkadot-sdk/issues/4334

-

Javyer authored

Added the command sync. Follow up on #4701 This PR is blocked until a new machine for the sync gets deployed.

-

Branislav Kontur authored

## Summary This PR contains migrated code from the Bridges V2 [branch](https://github.com/paritytech/polkadot-sdk/pull/4427) from the old `parity-bridges-common` [repo](https://github.com/paritytech/parity-bridges-common/tree/bridges-v2). Even though the PR looks large, it does not (or should not) contain any significant changes (also not relevant for audit). This PR is a requirement for permissionless lanes, as they were implemented on top of these changes. ## TODO - [x] generate fresh weights for BridgeHubs - [x] run `polkadot-fellows` bridges zombienet tests with actual runtime 1.2.5. or 1.2.6 to check compatibility -

Branislav Kontur <bkontur@gmail.com> Co-authored-by:

Serban Iorga <serban@parity.io> Co-authored-by:

Svyatoslav Nikolsky <svyatonik@gmail.com> Co-authored-by: command-bot <>

-

Bastian Köcher authored

This improves logging in the xcm-executor to have better debugability when executing a XCM message.

-

- Jul 11, 2024

-

-

Jun Jiang authored

Following PR for https://github.com/paritytech/polkadot-sdk/pull/4941 that removes usage of `sp-std` on templates `sp-std` crate was proposed to deprecate on https://github.com/paritytech/polkadot-sdk/issues/2101 @Kianenigma --------- Co-authored-by:

Kian Paimani <5588131+kianenigma@users.noreply.github.com>

-

Javyer authored

Fixed the mentioned issue: https://github.com/paritytech/command-bot/issues/113#issuecomment-2222277552 Now it will properly comment when the old bot gets triggered.

-

- Jul 10, 2024

-

-

Kian Paimani authored

Explains one of the annoying parts of FRAME storage that we have seen multiple times in PBA everyone gets stuck on. I have not updated the other two templates for now, and only reflected it in the parachain template. That can happen in a follow-up. - [x] Update possible answers in SE about the same topic. --------- Co-authored-by:Serban Iorga <serban@parity.io> Co-authored-by: command-bot <>

-

James Wilson authored

Enabling this feature when building the `polkadot ` crate will lead it to being enabled for the builtin westend and rococo runtimes. The result of that is that a merkleized metadata hash will be computed (at some time cost) in those runtimes, which will allow transactions which include a hash via the `CheckMetadataHash` extension to work. The idea is that this is useful for being able to test/experiment with the `CheckMetadataHash` extension against local nodes. --------- Co-authored-by: command-bot <> Co-authored-by:Bastian Köcher <git@kchr.de>

-

- Jul 09, 2024

-

-

Francisco Aguirre authored

It was added to v4 and v3 but was missing from v2

-

Alin Dima authored

The update is tracked by: https://github.com/paritytech/polkadot-sdk/issues/3699 However, this is not worth doing at this point since it will change in the future for phase 2 of the implementation. Still, it's useful to let people know that the information is not the most up to date.

-

Serban Iorga authored

`polkadot-parachain` simplifications and deduplications Details in the commit messages. Just copy-pasting the last commit description since it introduces the biggest changes: ``` Implement a more structured way to define a node spec - use traits instead of bounds for `rpc_ext_builder()`, `build_import_queue()`, `start_consensus()` - add a `NodeSpec` trait for defining the specifications of a node - deduplicate the code related to building a node's components / starting a node ``` The other changes are much smaller, most of them trivial and are isolated in separate commits. -

Radha authored

Some of the commands needed update for seamless "newbie" Polkadot SDK templates experience

-

Or Grinberg authored

Fixes #3770 Added `clear_origin` as an allowed command after commands that load the holdings register, in the safe xcm builder. Checklist - [x] My PR includes a detailed description as outlined in the "Description" section above - [x] My PR follows the [labeling requirements](https://github.com/paritytech/polkadot-sdk/blob/master/docs/contributor/CONTRIBUTING.md#Process) of this project (at minimum one label for T required) - [x] I have made corresponding changes to the documentation (if applicable) - [x] I have added tests that prove my fix is effective or that my feature works (if applicable) --------- Co-authored-by:

Francisco Aguirre <franciscoaguirreperez@gmail.com> Co-authored-by:

gupnik <mail.guptanikhil@gmail.com>

-

- Jul 08, 2024

-

-

Alexandru Vasile authored

This PR bumps the last time of a reputation update of a peer. Doing so ensures the peer remains in the peerstore for longer than 1 hour. Libp2p updates the `last_updated` field as well. Small summary for the peerstore: - A: when peers are reported the `last_updated` time is set to current time (not done before this PR) - B: peers that were not updated for 1 hour are removed from the peerstore - the reputation of the peers is decaying to zero over time - peers are reported with a reputation change (positive or negative depending on the behavior) Because, (A) was not updating the `last_updated` time, we might lose the reputation of peers that are constantly updated after 1hour because of (B). cc @paritytech/networking Signed-off-by:Alexandru Vasile <alexandru.vasile@parity.io>

-

Bastian Köcher authored

Co-authored-by: command-bot <>

-

Bastian Köcher authored

Now:  Before:  We sadly can not remove the `_macro_name`, but this way we at least show the correct macro plus docs.

-

Egor_P authored

This PR backports regular version bumps and prdocs reordering from the 1.14.0 release branch to master

-

- Jul 07, 2024

-

-

Muharem Ismailov authored

Functions `can_decrease` and `can_increase` do not return successful consequence results for assets undergoing destruction; instead, they return the `UnknownAsset` consequence variant. This update aligns their behavior with similar functions, such as `reducible_balance`, `increase_balance`, `decrease_balance`, and `burn`, which return an `AssetNotLive` error for assets in the process of being destroyed.

-

- Jul 06, 2024

-

-

Deepak Chaudhary authored

### ISSUE Link to the issue: https://github.com/paritytech/polkadot-sdk/issues/3326 cc @muraca Deliverables - [Deprecation] remove pallet::getter usage from all pallet-babe ### Test Outcomes ___ Successful tests by running `cargo test -p pallet-babe --features runtime-benchmarks` running 32 tests test mock::__pallet_staking_reward_curve_test_module::reward_curve_piece_count ... ok test mock::__construct_runtime_integrity_test::runtime_integrity_tests ... ok test mock::test_genesis_config_builds ... ok 2024-06-28T17:02:11.158812Z ERROR runtime::storage: Corrupted state at `0x1cb6f36e027abb2091cfb5110ab5087f9aab0a5b63b359512deee557c9f4cf63`: Error { cause: Some(Error { cause: None, desc: "Could not decode `NextConfigDescriptor`, variant doesn't exist" }), desc: "Could not decode `Option::Some(T)`" } 2024-06-28T17:02:11.159752Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::add_epoch_configurations_migration_works ... ok test tests::author_vrf_output_for_secondary_vrf ... ok test benchmarking::bench_check_equivocation_proof ... ok 2024-06-28T17:02:11.160537Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::can_estimate_current_epoch_progress ... ok test tests::author_vrf_output_for_primary ... ok test tests::authority_index ... ok 2024-06-28T17:02:11.162327Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::empty_randomness_is_correct ... ok test tests::check_module ... ok 2024-06-28T17:02:11.163492Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::current_slot_is_processed_on_initialization ... ok test tests::can_enact_next_config ... ok 2024-06-28T17:02:11.164987Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.165007Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::can_predict_next_epoch_change ... ok test tests::first_block_epoch_zero_start ... ok test tests::initial_values ... ok 2024-06-28T17:02:11.168430Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.168685Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.170982Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.171220Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::only_root_can_enact_config_change ... ok test tests::no_author_vrf_output_for_secondary_plain ... ok test tests::can_fetch_current_and_next_epoch_data ... ok 2024-06-28T17:02:11.172960Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::report_equivocation_has_valid_weight ... ok 2024-06-28T17:02:11.173873Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.177084Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::report_equivocation_after_skipped_epochs_works ... 2024-06-28T17:02:11.177694Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.177703Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.177925Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.177927Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 ok 2024-06-28T17:02:11.179678Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.181446Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.183665Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.183874Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.185732Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.185951Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.189332Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.189559Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.189587Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::generate_equivocation_report_blob ... ok test tests::disabled_validators_cannot_author_blocks - should panic ... ok 2024-06-28T17:02:11.190552Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.192279Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.194735Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.196136Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.197240Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::skipping_over_epochs_works ... ok 2024-06-28T17:02:11.202783Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.202846Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.203029Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.205242Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::tracks_block_numbers_when_current_and_previous_epoch_started ... ok 2024-06-28T17:02:11.208965Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::report_equivocation_current_session_works ... ok test tests::report_equivocation_invalid_key_owner_proof ... ok 2024-06-28T17:02:11.216431Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 2024-06-28T17:02:11.216855Z ERROR runtime::timestamp: `pallet_timestamp::UnixTime::now` is called at genesis, invalid value returned: 0 test tests::report_equivocation_validate_unsigned_prevents_duplicates ... ok test tests::report_equivocation_invalid_equivocation_proof ... ok test tests::valid_equivocation_reports_dont_pay_fees ... ok test tests::report_equivocation_old_session_works ... ok test mock::__pallet_staking_reward_curve_test_module::reward_curve_precision ... ok test result: ok. 32 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.20s Doc-tests pallet-babe running 0 tests test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s --- Polkadot Address: 16htXkeVhfroBhL6nuqiwknfXKcT6WadJPZqEi2jRf9z4XPY -

Deepak Chaudhary authored

### ISSUE Link to the issue: https://github.com/paritytech/polkadot-sdk/issues/3326 cc @muraca Deliverables - [Deprecation] remove pallet::getter usage from pallet-transaction-storage ### Test Outcomes ___ cargo test -p pallet-transaction-storage --features runtime-benchmarks running 9 tests test mock::test_genesis_config_builds ... ok test tests::burns_fee ... ok test mock::__construct_runtime_integrity_test::runtime_integrity_tests ... ok test tests::discards_data ... ok test tests::renews_data ... ok test benchmarking::bench_renew ... ok test benchmarking::bench_store ... ok test tests::checks_proof ... ok test benchmarking::bench_check_proof_max has been running for over 60 seconds test benchmarking::bench_check_proof_max ... ok test result: ok. 9 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 72.57s Doc-tests pallet-transaction-storage running 0 tests test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s --- Polkadot Address: 16htXkeVhfroBhL6nuqiwknfXKcT6WadJPZqEi2jRf9z4XPY

-

Tomás Senovilla Polo authored

Hi! In the course of a talk with @shawntabrizi in Singapore, we realized the documentation related to freeze balances' a little bit confusing. It stated that a frozen amount is released at some specified block number, which isn't true in general. This PR fixes that typo and further specifies that the frozen balance may exceed the available balance, according to what we learned at the PBA. This feature was not specified in the documentation AFAIK. This is the first time I submit something to the polkadot SDK repo, so please feel free to rephrase the docs I added in case I messed up! --------- Co-authored-by:

Shawn Tabrizi <shawntabrizi@gmail.com> Co-authored-by: command-bot <>

-

Sam Johnson authored

Release notes here: https://github.com/sam0x17/macro_magic/releases/tag/v0.5.1 Some performance improvements + upgrades to `derive-syn-parse` 2.0 which means polkadot-sdk now fully upgrades this crate within the workspace

-

- Jul 05, 2024

-

-

Nazar Mokrynskyi authored

This PR largely fixes https://github.com/paritytech/polkadot-sdk/issues/4903 by addressing it from a few different directions. The high-level observation is that complexity of finalization was unfortunately roughly `O(n^3)`. Not only `displaced_leaves_after_finalizing` was extremely inefficient on its own, especially when large ranges of blocks were involved, it was called once upfront and then on every single block that was finalized over and over again. The first commit refactores code adjacent to `displaced_leaves_after_finalizing` to optimize memory allocations. For example things like `BTreeMap<_, Vec<_>>` were very bad in terms of number of allocations and after analyzing code paths was completely unnecessary and replaced with `Vec<(_, _)>`. In other places allocations of known size were not done upfront and some APIs required unnecessary cloning of vectors. I checked invariants and didn't find anything that was violated after refactoring. Second commit completely replaces `displaced_leaves_after_finalizing` implementation with a much more efficient one. In my case with ~82k blocks and ~13k leaves it takes ~5.4s to finish `client.apply_finality()` now. The idea is to avoid querying the same blocks over and over again as well as introducing temporary local cache for blocks related to leaves above block that is being finalized as well as local cache of the finalized branch of the chain. I left some comments in the code and wrote tests that I belive should check all code invariants for correctness. `lowest_common_ancestor_multiblock` was removed as unnecessary and not great in terms of performance API, domain-specific code should be written instead like done in `displaced_leaves_after_finalizing`. After these changes I noticed finalization is still horribly slow, turned out that even though `displaced_leaves_after_finalizing` was way faster that before (probably order of magnitude), it was called for every single of those 82k blocks

Sebastian Kunert <skunert49@gmail.com>

-

Deepak Chaudhary authored

### ISSUE Link to the issue: https://github.com/paritytech/polkadot-sdk/issues/3326 cc @muraca Deliverables - [Deprecation] remove pallet::getter usage from all pallet-vesting ### Test Outcomes ___ Successful tests by running `cargo test -p pallet-vesting --features runtime-benchmarks` running 45 tests test benchmarking::bench_force_vested_transfer ... ok test benchmarking::bench_vest_other_locked ... ok test mock::__construct_runtime_integrity_test::runtime_integrity_tests ... ok test benchmarking::bench_not_unlocking_merge_schedules ... ok test benchmarking::bench_unlocking_merge_schedules ... ok test mock::test_genesis_config_builds ... ok test tests::build_genesis_has_storage_version_v1 ... ok test tests::check_vesting_status ... ok test benchmarking::bench_force_remove_vesting_schedule ... ok test tests::check_vesting_status_for_multi_schedule_account ... ok test benchmarking::bench_vest_locked ... ok test tests::extra_balance_should_transfer ... ok test tests::generates_multiple_schedules_from_genesis_config ... ok test tests::force_vested_transfer_allows_max_schedules ... ok test tests::force_vested_transfer_correctly_fails ... ok test tests::force_vested_transfer_works ... ok test tests::liquid_funds_should_transfer_with_delayed_vesting ... ok test tests::merge_finished_and_ongoing_schedules ... ok test benchmarking::bench_vest_unlocked ... ok test tests::merge_finished_and_yet_to_be_started_schedules ... ok test tests::merge_finishing_schedules_does_not_create_a_new_one ... ok test tests::merge_ongoing_and_yet_to_be_started_schedules ... ok test benchmarking::bench_vest_other_unlocked ... ok test tests::merge_ongoing_schedules ... ok test tests::merge_schedules_that_have_not_started ... ok test tests::merge_vesting_handles_per_block_0 ... ok test tests::per_block_works ... ok test tests::merge_schedules_throws_proper_errors ... ok test tests::multiple_schedules_from_genesis_config_errors - should panic ... ok test tests::merging_shifts_other_schedules_index ... ok test tests::non_vested_cannot_vest_other ... ok test tests::unvested_balance_should_not_transfer ... ok test tests::non_vested_cannot_vest ... ok test tests::vested_balance_should_transfer ... ok test tests::remove_vesting_schedule ... ok test tests::vested_transfer_correctly_fails ... ok test tests::vested_balance_should_transfer_with_multi_sched ... ok test tests::vested_balance_should_transfer_using_vest_other ... ok test tests::vested_transfer_less_than_existential_deposit_fails ... ok test tests::vesting_info_ending_block_as_balance_works ... ok test tests::vesting_info_validate_works ... ok test tests::vested_balance_should_transfer_using_vest_other_with_multi_sched ... ok test tests::vested_transfer_works ... ok test tests::vested_transfer_allows_max_schedules ... ok test benchmarking::bench_vested_transfer ... ok test result: ok. 45 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.10s --- Polkadot Address: 16htXkeVhfroBhL6nuqiwknfXKcT6WadJPZqEi2jRf9z4XPY

-