From fd79b3b08a9bd8f57cd6183b84fd34705e83a7a0 Mon Sep 17 00:00:00 2001

From: Andrei Eres <eresav@me.com>

Date: Tue, 26 Mar 2024 16:51:47 +0100

Subject: [PATCH] [subsystem-benchmarks] Save results to json (#3829)

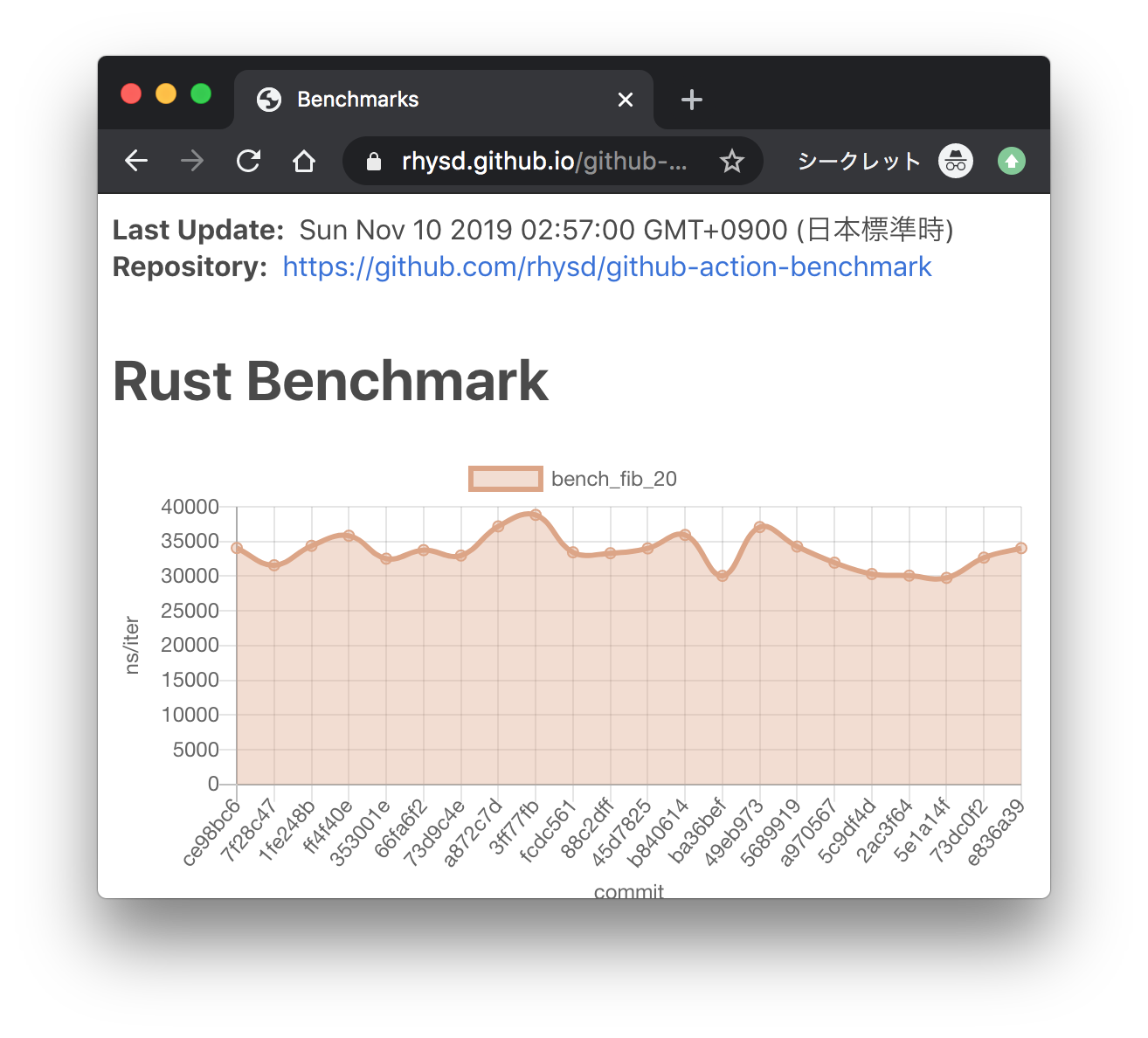

Here we add the ability to save subsystem benchmark results in JSON

format to display them as graphs

To draw graphs, CI team will use

[github-action-benchmark](https://github.com/benchmark-action/github-action-benchmark).

Since we are using custom benchmarks, we need to prepare [a specific

data

type](https://github.com/benchmark-action/github-action-benchmark?tab=readme-ov-file#examples):

```

[

{

"name": "CPU Load",

"unit": "Percent",

"value": 50

}

]

```

Then we'll get graphs like this:

[A live page with

graphs](https://benchmark-action.github.io/github-action-benchmark/dev/bench/)

---------

Co-authored-by: ordian <write@reusable.software>

---

Cargo.lock | 1 +

...ilability-distribution-regression-bench.rs | 7 ++

.../availability-recovery-regression-bench.rs | 7 ++

polkadot/node/subsystem-bench/Cargo.toml | 1 +

.../subsystem-bench/src/lib/environment.rs | 2 +-

polkadot/node/subsystem-bench/src/lib/lib.rs | 1 +

.../node/subsystem-bench/src/lib/usage.rs | 28 +++++++

.../node/subsystem-bench/src/lib/utils.rs | 75 +++++--------------

8 files changed, 66 insertions(+), 56 deletions(-)

diff --git a/Cargo.lock b/Cargo.lock

index 074b657e767..9e52bfcf9a4 100644

--- a/Cargo.lock

+++ b/Cargo.lock

@@ -13644,6 +13644,7 @@ dependencies = [

"sc-service",

"schnorrkel 0.11.4",

"serde",

+ "serde_json",

"serde_yaml",

"sha1",

"sp-application-crypto",

diff --git a/polkadot/node/network/availability-distribution/benches/availability-distribution-regression-bench.rs b/polkadot/node/network/availability-distribution/benches/availability-distribution-regression-bench.rs

index 019eb122208..c33674a8f2f 100644

--- a/polkadot/node/network/availability-distribution/benches/availability-distribution-regression-bench.rs

+++ b/polkadot/node/network/availability-distribution/benches/availability-distribution-regression-bench.rs

@@ -27,6 +27,7 @@ use polkadot_subsystem_bench::{

availability::{benchmark_availability_write, prepare_test, TestState},

configuration::TestConfiguration,

usage::BenchmarkUsage,

+ utils::save_to_file,

};

use std::io::Write;

@@ -60,7 +61,13 @@ fn main() -> Result<(), String> {

})

.collect();

println!("\rDone!{}", " ".repeat(BENCH_COUNT));

+

let average_usage = BenchmarkUsage::average(&usages);

+ save_to_file(

+ "charts/availability-distribution-regression-bench.json",

+ average_usage.to_chart_json().map_err(|e| e.to_string())?,

+ )

+ .map_err(|e| e.to_string())?;

println!("{}", average_usage);

// We expect no variance for received and sent

diff --git a/polkadot/node/network/availability-recovery/benches/availability-recovery-regression-bench.rs b/polkadot/node/network/availability-recovery/benches/availability-recovery-regression-bench.rs

index 5e8b81be82d..46a38516898 100644

--- a/polkadot/node/network/availability-recovery/benches/availability-recovery-regression-bench.rs

+++ b/polkadot/node/network/availability-recovery/benches/availability-recovery-regression-bench.rs

@@ -28,6 +28,7 @@ use polkadot_subsystem_bench::{

},

configuration::TestConfiguration,

usage::BenchmarkUsage,

+ utils::save_to_file,

};

use std::io::Write;

@@ -58,7 +59,13 @@ fn main() -> Result<(), String> {

})

.collect();

println!("\rDone!{}", " ".repeat(BENCH_COUNT));

+

let average_usage = BenchmarkUsage::average(&usages);

+ save_to_file(

+ "charts/availability-recovery-regression-bench.json",

+ average_usage.to_chart_json().map_err(|e| e.to_string())?,

+ )

+ .map_err(|e| e.to_string())?;

println!("{}", average_usage);

// We expect no variance for received and sent

diff --git a/polkadot/node/subsystem-bench/Cargo.toml b/polkadot/node/subsystem-bench/Cargo.toml

index 05907e428f9..b494f05180d 100644

--- a/polkadot/node/subsystem-bench/Cargo.toml

+++ b/polkadot/node/subsystem-bench/Cargo.toml

@@ -71,6 +71,7 @@ prometheus_endpoint = { package = "substrate-prometheus-endpoint", path = "../..

prometheus = { version = "0.13.0", default-features = false }

serde = { workspace = true, default-features = true }

serde_yaml = { workspace = true }

+serde_json = { workspace = true }

polkadot-node-core-approval-voting = { path = "../core/approval-voting" }

polkadot-approval-distribution = { path = "../network/approval-distribution" }

diff --git a/polkadot/node/subsystem-bench/src/lib/environment.rs b/polkadot/node/subsystem-bench/src/lib/environment.rs

index 2d80d75a14a..42955d03022 100644

--- a/polkadot/node/subsystem-bench/src/lib/environment.rs

+++ b/polkadot/node/subsystem-bench/src/lib/environment.rs

@@ -404,7 +404,7 @@ impl TestEnvironment {

let total_cpu = test_env_cpu_metrics.sum_by("substrate_tasks_polling_duration_sum");

usage.push(ResourceUsage {

- resource_name: "Test environment".to_string(),

+ resource_name: "test-environment".to_string(),

total: total_cpu,

per_block: total_cpu / num_blocks,

});

diff --git a/polkadot/node/subsystem-bench/src/lib/lib.rs b/polkadot/node/subsystem-bench/src/lib/lib.rs

index d06f2822a89..ef2724abc98 100644

--- a/polkadot/node/subsystem-bench/src/lib/lib.rs

+++ b/polkadot/node/subsystem-bench/src/lib/lib.rs

@@ -26,3 +26,4 @@ pub(crate) mod keyring;

pub(crate) mod mock;

pub(crate) mod network;

pub mod usage;

+pub mod utils;

diff --git a/polkadot/node/subsystem-bench/src/lib/usage.rs b/polkadot/node/subsystem-bench/src/lib/usage.rs

index 7172969a8f9..59296746ec3 100644

--- a/polkadot/node/subsystem-bench/src/lib/usage.rs

+++ b/polkadot/node/subsystem-bench/src/lib/usage.rs

@@ -82,6 +82,27 @@ impl BenchmarkUsage {

_ => None,

}

}

+

+ // Prepares a json string for a graph representation

+ // See: https://github.com/benchmark-action/github-action-benchmark?tab=readme-ov-file#examples

+ pub fn to_chart_json(&self) -> color_eyre::eyre::Result<String> {

+ let chart = self

+ .network_usage

+ .iter()

+ .map(|v| ChartItem {

+ name: v.resource_name.clone(),

+ unit: "KiB".to_string(),

+ value: v.per_block,

+ })

+ .chain(self.cpu_usage.iter().map(|v| ChartItem {

+ name: v.resource_name.clone(),

+ unit: "seconds".to_string(),

+ value: v.per_block,

+ }))

+ .collect::<Vec<_>>();

+

+ Ok(serde_json::to_string(&chart)?)

+ }

}

fn check_usage(

@@ -151,3 +172,10 @@ impl ResourceUsage {

}

type ResourceUsageCheck<'a> = (&'a str, f64, f64);

+

+#[derive(Debug, Serialize)]

+pub struct ChartItem {

+ pub name: String,

+ pub unit: String,

+ pub value: f64,

+}

diff --git a/polkadot/node/subsystem-bench/src/lib/utils.rs b/polkadot/node/subsystem-bench/src/lib/utils.rs

index cd206d8f322..b3cd3a88b6c 100644

--- a/polkadot/node/subsystem-bench/src/lib/utils.rs

+++ b/polkadot/node/subsystem-bench/src/lib/utils.rs

@@ -16,61 +16,26 @@

//! Test utils

-use crate::usage::BenchmarkUsage;

-use std::io::{stdout, Write};

-

-pub struct WarmUpOptions<'a> {

- /// The maximum number of runs considered for warming up.

- pub warm_up: usize,

- /// The number of runs considered for benchmarking.

- pub bench: usize,

- /// The difference in CPU usage between runs considered as normal

- pub precision: f64,

- /// The subsystems whose CPU usage is checked during warm-up cycles

- pub subsystems: &'a [&'a str],

-}

-

-impl<'a> WarmUpOptions<'a> {

- pub fn new(subsystems: &'a [&'a str]) -> Self {

- Self { warm_up: 100, bench: 3, precision: 0.02, subsystems }

- }

-}

-

-pub fn warm_up_and_benchmark(

- options: WarmUpOptions,

- run: impl Fn() -> BenchmarkUsage,

-) -> Result<BenchmarkUsage, String> {

- println!("Warming up...");

- let mut usages = Vec::with_capacity(options.bench);

-

- for n in 1..=options.warm_up {

- let curr = run();

- if let Some(prev) = usages.last() {

- let diffs = options

- .subsystems

- .iter()

- .map(|&v| {

- curr.cpu_usage_diff(prev, v)

- .ok_or(format!("{} not found in benchmark {:?}", v, prev))

- })

- .collect::<Result<Vec<f64>, String>>()?;

- if !diffs.iter().all(|&v| v < options.precision) {

- usages.clear();

- }

- }

- usages.push(curr);

- print!("\r{}%", n * 100 / options.warm_up);

- if usages.len() == options.bench {

- println!("\rTook {} runs to warm up", n.saturating_sub(options.bench));

- break;

- }

- stdout().flush().unwrap();

- }

-

- if usages.len() != options.bench {

- println!("Didn't warm up after {} runs", options.warm_up);

- return Err("Can't warm up".to_string())

+use std::{fs::File, io::Write};

+

+// Saves a given string to a file

+pub fn save_to_file(path: &str, value: String) -> color_eyre::eyre::Result<()> {

+ let output = std::process::Command::new(env!("CARGO"))

+ .arg("locate-project")

+ .arg("--workspace")

+ .arg("--message-format=plain")

+ .output()

+ .unwrap()

+ .stdout;

+ let workspace_dir = std::path::Path::new(std::str::from_utf8(&output).unwrap().trim())

+ .parent()

+ .unwrap();

+ let path = workspace_dir.join(path);

+ if let Some(dir) = path.parent() {

+ std::fs::create_dir_all(dir)?;

}

+ let mut file = File::create(path)?;

+ file.write_all(value.as_bytes())?;

- Ok(BenchmarkUsage::average(&usages))

+ Ok(())

}

--

GitLab