- Aug 14, 2024

-

-

Morgan Adamiec authored

-

Morgan Adamiec authored

-

Morgan Adamiec authored

-

Sebastian Kunert authored

Backports #5273 & #5281 --------- Co-authored-by:Guillaume Thiolliere <gui.thiolliere@gmail.com>

-

- Aug 13, 2024

-

-

Alin Dima authored

Backport https://github.com/paritytech/polkadot-sdk/pull/4937 on the stable release

-

Alin Dima authored

Backports https://github.com/paritytech/polkadot-sdk/pull/5321 on the stable release

-

Oliver Tale-Yazdi authored

Fix compilation issue for Rust stable 1.80 and nightly 1.82 `cargo update -p time` Signed-off-by:Oliver Tale-Yazdi <oliver.tale-yazdi@parity.io>

-

- Aug 09, 2024

-

-

Egor_P authored

This PR adds the possibility to set the docker stable release tag as an input parameter to the produced docker images, so that it matches with the release version

-

Andrei Eres authored

Co-authored-by:

Oliver Tale-Yazdi <oliver.tale-yazdi@parity.io> Co-authored-by:

Jun Jiang <jasl9187@hotmail.com>

-

- Aug 06, 2024

-

-

Oliver Tale-Yazdi authored

Backport #4812 and #5189 to fix the CI. --------- Signed-off-by:

Oliver Tale-Yazdi <oliver.tale-yazdi@parity.io> Co-authored-by:

Morgan Adamiec <morgan@parity.io>

-

- Jul 29, 2024

-

-

EgorPopelyaev authored

-

Bastian Köcher authored

Fix https://github.com/paritytech/polkadot-sdk/issues/3487. --------- Co-authored-by:

André Silva <123550+andresilva@users.noreply.github.com> Co-authored-by:

Dmitry Lavrenov <39522748+dmitrylavrenov@users.noreply.github.com>

-

- Jul 26, 2024

-

-

Alin Dima authored

[stable2407 backport] runtime: make the candidate relay parent progression check more stric… (#5157) Backports https://github.com/paritytech/polkadot-sdk/pull/5113 on top of stable2407

-

- Jul 24, 2024

-

-

Egor_P authored

This PR backports hotfix from #5103 --------- Signed-off-by:

Alexandru Vasile <alexandru.vasile@parity.io> Co-authored-by:

Alexandru Vasile <60601340+lexnv@users.noreply.github.com> Co-authored-by:

Bastian Köcher <git@kchr.de>

-

- Jul 22, 2024

-

-

EgorPopelyaev authored

-

- Jul 19, 2024

-

-

Morgan Adamiec authored

-

- Jul 18, 2024

-

-

Morgan Adamiec authored

-

Morgan Adamiec authored

-

Morgan Adamiec authored

-

Morgan Adamiec authored

-

- Jul 17, 2024

-

-

EgorPopelyaev authored

-

EgorPopelyaev authored

-

EgorPopelyaev authored

-

EgorPopelyaev authored

-

Alexandru Vasile authored

This release includes: https://github.com/libp2p/rust-libp2p/pull/5482 Which fixes substrate node crashing with libp2p trace: ``` 0: sp_panic_handler::set::{{closure}} 1: std::panicking::rust_panic_with_hook 2: std::panicking::begin_panic::{{closure}} 3: std::sys_common::backtrace::__rust_end_short_backtrace 4: std::panicking::begin_panic 5: <quicksink::SinkImpl<S,F,T,A,E> as futures_sink::Sink<A>>::poll_ready 6: <rw_stream_sink::RwStreamSink<S> as futures_io::if_std::AsyncWrite>::poll_write 7: <libp2p_noise::io::framed::NoiseFramed<T,S> as futures_sink::Sink<&alloc::vec::Vec<u8>>>::poll_ready 8: <libp2p_noise::io::Output<T> as futures_io::if_std::AsyncWrite>::poll_write 9: <yamux::frame::io::Io<T> as futures_sink::Sink<yamux::frame::Frame<()>>>::poll_ready 10: yamux::connection::Connection<T>::poll_next_inbound 11: <libp2p_yamux::Muxer<C> as libp2p_core::muxing::StreamMuxer>::poll 12: <libp2p_core::muxing::boxed::Wrap<T> as libp2p_core::muxing::StreamMuxer>::poll 13: <libp2p_core::muxing::boxed::Wrap<T> as libp2p_core::muxing::StreamMuxer>::poll 14: libp2p_swarm::connection::pool::task::new_for_established_connection::{{closure}} 15: <sc_service::task_manager::prometheus_future::PrometheusFuture<T> as core::future::future::Future>::poll 16: <futures_util::future::select::Select<A,B> as core::future::future::Future>::poll 17: <tracing_futures::Instrumented<T> as core::future::future::Future>::poll 18: std::panicking::try 19: tokio::runtime::task::harness::Harness<T,S>::poll 20: tokio::runtime::scheduler::multi_thread::worker::Context::run_task 21: tokio::runtime::scheduler::multi_thread::worker::Context::run 22: tokio::runtime::context::set_scheduler 23: tokio::runtime::context::runtime::enter_runtime 24: tokio::runtime::scheduler::multi_thread::worker::run 25: tokio::runtime::task::core::Core<T,S>::poll 26: tokio::runtime::task::harness::Harness<T,S>::poll 27: std::sys_common::backtrace::__rust_begin_short_backtrace 28: core::ops::function::FnOnce::call_once{{vtable.shim}} 29: std::sys::pal::unix::thread::Thread::new::thread_start 30: <unknown> 31: <unknown> Thread 'tokio-runtime-worker' panicked at 'SinkImpl::poll_ready called after error.', /home/ubuntu/.cargo/registry/src/index.crates.io-6f17d22bba15001f/quicksink-0.1.2/src/lib.rs:158 ``` Closes: https://github.com/paritytech/polkadot-sdk/issues/4934 --------- Signed-off-by:Alexandru Vasile <alexandru.vasile@parity.io>

-

Sebastian Kunert authored

After the merge of #4922 we saw failing zombienet tests with the following error: ``` 2024-07-09 10:30:09 Error applying finality to block (0xb9e1d3d9cb2047fe61667e28a0963e0634a7b29781895bc9ca40c898027b4c09, 56685): UnknownBlock: Header was not found in the database: 0x0000000000000000000000000000000000000000000000000000000000000000 2024-07-09 10:30:09 GRANDPA voter error: could not complete a round on disk: UnknownBlock: Header was not found in the database: 0x0000000000000000000000000000000000000000000000000000000000000000 ``` [Example](https://gitlab.parity.io/parity/mirrors/polkadot-sdk/-/jobs/6662262) The crashing situation is warp-sync related. After warp syncing, it can happen that there are gaps in block ancestry where we don't have the header. At the same time, the genesis hash is in the set of leaves. In `displaced_leaves_after_finalizing` we then iterate from the finalized block backwards until we hit an unknown block, crashing the node. This PR makes the detection of displaced branches resilient against unknown block in the finalized block chain. cc @nazar-pc (github won't let me request a review from you) --------- Co-authored-by:Bastian Köcher <git@kchr.de> Co-authored-by: command-bot <>

-

Egor_P authored

This PR contains adjustments of the node release pipelines so that it will be possible to use those to trigger release actions based on the `stable` branch. Previously the whole pipeline of the flows from [creation of the `rc-tag`](https://github.com/paritytech/polkadot-sdk/blob/master/.github/workflows/release-10_rc-automation.yml) (v1.15.0-rc1, v1.15.0-rc2, etc) till [the release draft creation](https://github.com/paritytech/polkadot-sdk/blob/master/.github/workflows/release-30_publish_release_draft.yml) was triggered on push to the node release branch. As we had the node release branch and the crates release branch separately, it worked fine. From now on, as we are switching to the one branch approach, for the first iteration I would like to keep things simple to see how the new release process will work with both parts (crates and node) made from one branch. Changes made: - The first step in the pipeline (rc-tag creation) will be triggered manually instead of the push to the branch - The tag version will be set manually from the input instead of to be taken from the branch name - Docker image will be additionally tagged as `stable` Closes: https://github.com/paritytech/release-engineering/issues/214

-

Alin Dima authored

Resolves https://github.com/paritytech/polkadot-sdk/issues/4468 Gives instructions on how to enable elastic scaling MVP to parachain teams. Still a draft because it depends on further changes we make to the slot-based collator: https://github.com/paritytech/polkadot-sdk/pull/4097 Parachains cannot use this yet because the collator was not released and no relay chain network has been configured for elastic scaling yet

-

- Jul 16, 2024

-

-

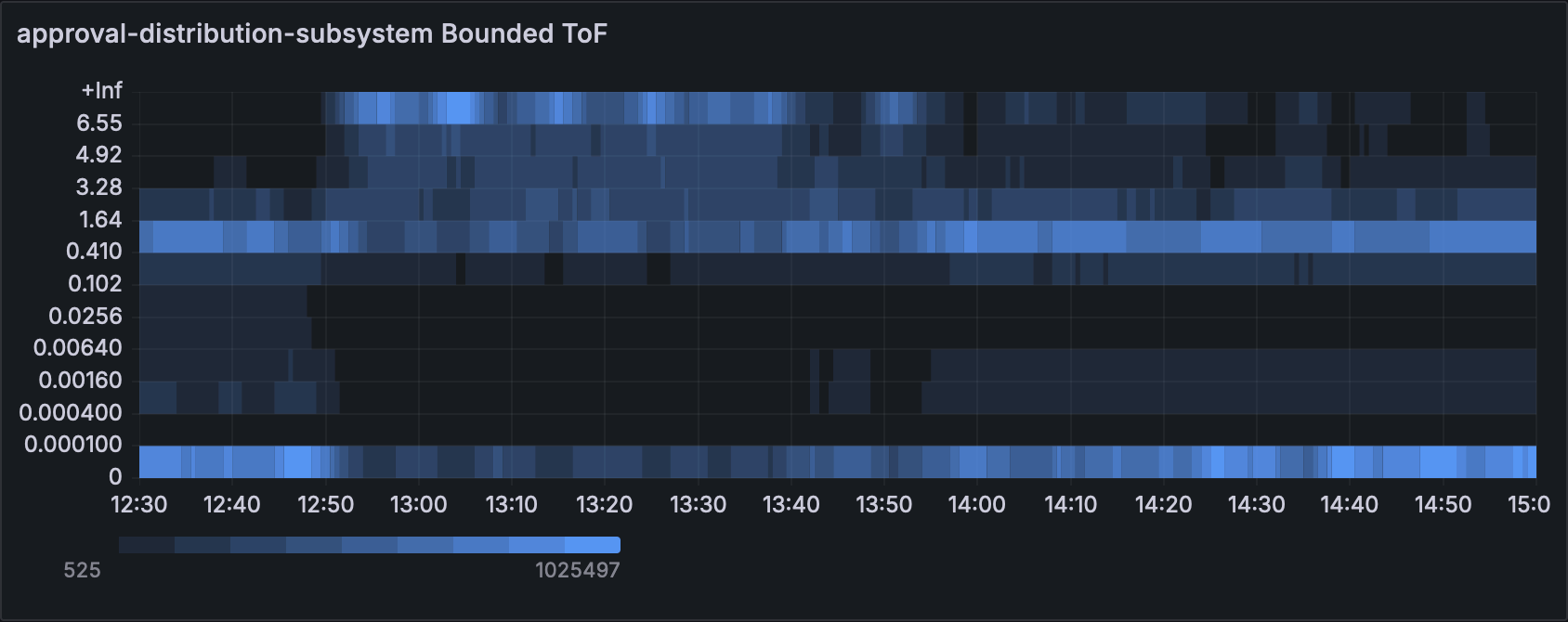

Andrei Eres authored

Closes https://github.com/paritytech/polkadot-sdk/issues/577 ### Changed - `orchestra` updated to 0.4.0 - `PeerViewChange` sent with high priority and should be processed first in a queue. - To count them in tests added tracker to TestSender and TestOverseer. It acts more like a smoke test though. ### Testing on Versi The changes were tested on Versi with two objectives: 1. Make sure the node functionality does not change. 2. See how the changes affect performance. Test setup: - 2.5 hours for each case - 100 validators - 50 parachains - validatorsPerCore = 2 - neededApprovals = 100 - nDelayTranches = 89 - relayVrfModuloSamples = 50 During the test period, all nodes ran without any crashes, which satisfies the first objective. To estimate the change in performance we used ToF charts. The graphs show that there are no spikes in the top as before. This proves that our hypothesis is correct. ### Normalized charts with ToF  [Before](https://grafana.teleport.parity.io/goto/ZoR53ClSg?orgId=1)  [After](https://grafana.teleport.parity.io/goto/6ux5qC_IR?orgId=1) ### Conclusion The prioritization of subsystem messages reduces the ToF of the networking subsystem, which helps faster propagation of gossip messages.

-

Alexander Samusev authored

cc https://github.com/paritytech/ci_cd/issues/939

-

Andrei Eres authored

A baseline for the statement-distribution regression test was set only in the beginning and now we see that the actual values a bit lower. <img width="1001" alt="image" src="https://github.com/user-attachments/assets/40b06eec-e38f-43ad-b437-89eca502aa66"> [Source](https://paritytech.github.io/polkadot-sdk/bench/statement-distribution-regression-bench)

-

Sebastian Miasojed authored

Introduce transient storage, which behaves identically to regular storage but is kept only in memory and discarded after every transaction. This functionality is similar to the `TSTORE` and `TLOAD` operations used in Ethereum. The following new host functions have been introduced: `get_transient_storage` `set_transient_storage` `take_transient_storage` `clear_transient_storage` `contains_transient_storage` Note: These functions are declared as `unstable` and thus are not activated. --------- Co-authored-by: command-bot <> Co-authored-by:

PG Herveou <pgherveou@gmail.com> Co-authored-by:

Alexander Theißen <alex.theissen@me.com>

-

Alexandru Gheorghe authored

This is part of the work to further optimize the approval subsystems, if you want to understand the full context start with reading https://github.com/paritytech/polkadot-sdk/pull/4849#issue-2364261568, however that's not necessary, as this change is self-contained and nodes would benefit from it regardless of subsequent changes landing or not. While testing with 1000 validators I found out that the logic for determining the validators an assignment should be gossiped to is taking a lot of time, because it always iterated through all the peers, to determine which are X and Y neighbours and to which we should randomly gossip(4 samples). This could be actually optimised, so we don't have to iterate through all peers for each new assignment, by fetching the list of X and Y peer ids from the topology first and then stopping the loop once we took the 4 random samples. With this improvements we reduce the total CPU time spent in approval-distribution with 15% on networks with 500 validators and 20% on networks with 1000 validators. ## Test coverage: `propagates_assignments_along_unshared_dimension` and `propagates_locally_generated_assignment_to_both_dimensions` cover already logic and they passed, confirm that there is no breaking change. Additionally, the approval voting benchmark measure the traffic sent to other peers, so I confirmed that for various network size there is no difference in the size of the traffic sent to other peers. --------- Signed-off-by:Alexandru Gheorghe <alexandru.gheorghe@parity.io>

-

Parth Mittal authored

As per #3326, removes usage of the `pallet::getter` macro from the `session` pallet. The syntax `StorageItem::<T, I>::get()` should be used instead. Also, adds public functions for compatibility. NOTE: The `./historical` directory has not been modified. cc @muraca polkadot address: 5GsLutpKjbzsbTphebs9Uy4YK6gTN47MAaz6njPktidjR5cp --------- Co-authored-by:

Kian Paimani <5588131+kianenigma@users.noreply.github.com> Co-authored-by:

Bastian Köcher <git@kchr.de>

-

Javyer authored

This will ensure that malicious code can not access other parts of the project. Co-authored-by:

Oliver Tale-Yazdi <oliver.tale-yazdi@parity.io> Co-authored-by:

Bastian Köcher <git@kchr.de>

-

Alexandru Vasile authored

The `DhtEvent::ValuePut` was not propagated back to the higher levels. This PR ensures we'll send the ValuePut event similarly to `DhtEvent::ValuePutFailed` ### Next Steps - [ ] A bit more testing Thanks @alexggh for catching this

Alexandru Vasile <alexandru.vasile@parity.io>

-

- Jul 15, 2024

-

-

Niklas Adolfsson authored

Co-authored-by:Bastian Köcher <git@kchr.de>

-

Branislav Kontur authored

## Testing Both Bridges zombienet tests passed, e.g.: https://gitlab.parity.io/parity/mirrors/polkadot-sdk/-/jobs/6698640 https://gitlab.parity.io/parity/mirrors/polkadot-sdk/-/jobs/6698641 https://gitlab.parity.io/parity/mirrors/polkadot-sdk/-/jobs/6700072 https://gitlab.parity.io/parity/mirrors/polkadot-sdk/-/jobs/6700073

-

Jun Jiang authored

This should remove nearly all usage of `sp-std` except: - bridge and bridge-hubs - a few of frames re-export `sp-std`, keep them for now - there is a usage of `sp_std::Writer`, I don't have an idea how to move it Please review proc-macro carefully. I'm not sure I'm doing it the right way. Note: need `/bot fmt` --------- Co-authored-by:Bastian Köcher <git@kchr.de> Co-authored-by: command-bot <>

-

Jun Jiang authored

It says `Will be removed after July 2023` but that's not true

Oliver Tale-Yazdi <oliver.tale-yazdi@parity.io> Co-authored-by:

Bastian Köcher <git@kchr.de>

-