- Jan 20, 2025

-

-

Sebastian Kunert authored

Follow-up to #6825, which introduced this bug. We use the `can_build_upon` method to ask the runtime if it is fine to build another block. The runtime checks this based on the [`ConsensusHook`](https://github.com/paritytech/polkadot-sdk/blob/c1b7c302/cumulus/pallets/aura-ext/src/consensus_hook.rs#L110-L110) implementation, the most popular one being the `FixedConsensusHook`. In #6825 I removed a check that would always allow us to build when we are building on an included block. Turns out this check is still required when: 1. The [`UnincludedSegment` ](https://github.com/paritytech/polkadot-sdk/blob/c1b7c302 /cumulus/pallets/parachain-system/src/lib.rs#L758-L758) storage item in pallet-parachain-system is equal or larger than the unincluded segment. 2. We are calling the `can_build_upon` runtime API where the included block has progressed offchain to the current parent block (so last entry in the `UnincludedSegment` storage item). In this scenario the last entry in `UnincludedSegment` does not have a hash assigned yet (because it was not available in `on_finalize` of the previous block). So the unincluded segment will be reported at its maximum length which will forbid building another block. Ideally we would have a more elegant solution than to rely on the node-side here. But for now the check is reintroduced and a test is added to not break it again by accident. --------- Co-authored-by: command-bot <> Co-authored-by:

Michal Kucharczyk <1728078+michalkucharczyk@users.noreply.github.com>

-

- Jan 17, 2025

-

-

PG Herveou authored

Add foundation for supporting call traces in pallet_revive Follow up: - PR #7167 Add changes to eth-rpc to introduce debug endpoint that will use pallet-revive tracing features - PR #6727 Add new RPC to the client and implement tracing runtime API that can capture traces on previous blocks --------- Co-authored-by:Alexander Theißen <alex.theissen@me.com>

-

Santi Balaguer authored

This adds a new Proxy type to Westend Runtime called ParaRegistration. This is related to: https://github.com/polkadot-fellows/runtimes/pull/520. This new proxy allows: 1. Reserve paraID 2. Register Parachain 3. Leverage Utilites pallet 4. Remove proxy. --------- Co-authored-by: command-bot <> Co-authored-by:Dónal Murray <donal.murray@parity.io>

-

Maksym H authored

Deprecation warning for old command bot

-

Yuri Volkov authored

Upgrading PAPI in review-bot: https://github.com/paritytech/review-bot/issues/140

-

Alexander Theißen authored

Update to PolkaVM `0.19`. This version renumbers the opcodes in order to be in-line with the grey paper. Hopefully, for the last time. This means that it breaks existing contracts. --------- Signed-off-by:

xermicus <cyrill@parity.io> Co-authored-by: command-bot <> Co-authored-by:

xermicus <cyrill@parity.io>

-

thiolliere authored

We already use it for lots of pallet. Keeping it feature gated by experimental means we lose the information of which pallet was using experimental before the migration to frame crate usage. We can consider `polkadot-sdk-frame` crate unstable but let's not use the feature `experimental`. --------- Co-authored-by: command-bot <>

-

- Jan 16, 2025

-

-

Ankan authored

Migrate staking currency from `traits::LockableCurrency` to `traits::fungible::holds`. Resolves part of https://github.com/paritytech/polkadot-sdk/issues/226. ## Changes ### Nomination Pool TransferStake is now incompatible with fungible migration as old pools were not meant to have additional ED. Since they are anyways deprecated, removed its usage from all test runtimes. ### Staking - Config: `Currency` becomes of type `Fungible` while `OldCurrency` is the `LockableCurrency` used before. - Lazy migration of accounts. Any ledger update will create a new hold with no extra reads/writes. A permissionless extrinsic `migrate_currency()` releases the old `lock` along with some housekeeping. - Staking now requires ED to be left free. It also adds no consumer to staking accounts. - If hold cannot be applied to all stake, the un-holdable part is force withdrawn from the ledger. ### Delegated Staking The pallet does not add provider for agents anymore. ## Migration stats ### Polkadot Total accounts that can be migrated: 59564 Accounts failing to migrate: 0 Accounts with stake force withdrawn greater than ED: 59 Total force withdrawal: 29591.26 DOT ### Kusama Total accounts that can be migrated: 26311 Accounts failing to migrate: 0 Accounts with stake force withdrawn greater than ED: 48 Total force withdrawal: 1036.05 KSM [Full logs here](https://hackmd.io/@ak0n/BklDuFra0). ## Note about locks (freeze) vs holds With locks or freezes, staking could use total balance of an account. But with holds, the account needs to be left with at least Existential Deposit in free balance. This would also affect nomination pools which till now has been able to stake all funds contributed to it. An alternate version of this PR is https://github.com/paritytech/polkadot-sdk/pull/5658 where staking pallet does not add any provider, but means pools and delegated-staking pallet has to provide for these accounts and makes the end to end logic (of provider and consumer ref) lot less intuitive and prone to bug. This PR now introduces requirement for stakers to maintain ED in their free balance. This helps with removing the bug prone incrementing and decrementing of consumers and providers. ## TODO - [x] Test: Vesting + governance locked funds can be staked. - [ ] can `Call::restore_ledger` be removed? @gpestana - [x] Ensure unclaimed withdrawals is not affected by no provider for pool accounts. - [x] Investigate kusama accounts with balance between 0 and ED. - [x] Permissionless call to release lock. - [x] Migration of consumer (dec) and provider (inc) for direct stakers. - [x] force unstake if hold cannot be applied to all stake. - [x] Fix try state checks (it thinks nothing is staked for unmigrated ledgers). - [x] Bench `migrate_currency`. - [x] Virtual Staker migration test. - [x] Ensure total issuance is upto date when minting rewards. ## Followup - https://github.com/paritytech/polkadot-sdk/issues/5742 --------- Co-authored-by: command-bot <>

-

chloefeal authored

✄ ----------------------------------------------------------------------------- Thank you for your Pull Request!

chloefeal <188809157+chloefeal@users.noreply.github.com>

-

Javier Viola authored

Zombienet substrate tests PoC (using native provider). cc: @emamihe @alvicsam

-

Dastan authored

closes https://github.com/paritytech/polkadot-sdk/issues/6911

-

Giuseppe Re authored

The changes from v0.10.3 are only related to dependencies version. This should fix some failing CIs. This PR also updates the Rust cache version in CI.

-

Liam Aharon authored

Closes #3149 ## Description This PR introduces `pallet-asset-rewards`, which allows accounts to be rewarded for freezing `fungible` tokens. The motivation for creating this pallet is to allow incentivising LPs. See the pallet docs for more info about the pallet. ## Runtime changes The pallet has been added to - `asset-hub-rococo` - `asset-hub-westend` The `NativeAndAssets` `fungibles` Union did not contain `PoolAssets`, so it has been renamed `NativeAndNonPoolAssets` A new `fungibles` Union `NativeAndAllAssets` was created to encompass all assets and the native token. ## TODO - [x] Emulation tests - [x] Fill in Freeze logic (blocked https://github.com/paritytech/polkadot-sdk/issues/3342) and re-run benchmarks --------- Co-authored-by: command-bot <> Co-authored-by:

Oliver Tale-Yazdi <oliver.tale-yazdi@parity.io> Co-authored-by:

muharem <ismailov.m.h@gmail.com> Co-authored-by:

Guillaume Thiolliere <gui.thiolliere@gmail.com>

-

- Jan 15, 2025

-

-

Michael Müller authored

Closes https://github.com/paritytech/polkadot-sdk/issues/6891. cc @athei @xermicus @pgherveou

-

PG Herveou authored

Remove all pallet::events except for the `ContractEmitted` event that is emitted by contracts --------- Co-authored-by: command-bot <> Co-authored-by:Alexander Theißen <alex.theissen@me.com>

-

PG Herveou authored

Remove the `debug_buffer` feature --------- Co-authored-by: command-bot <> Co-authored-by:

Cyrill Leutwiler <cyrill@parity.io> Co-authored-by:

Alexander Theißen <alex.theissen@me.com>

-

Alexandru Vasile authored

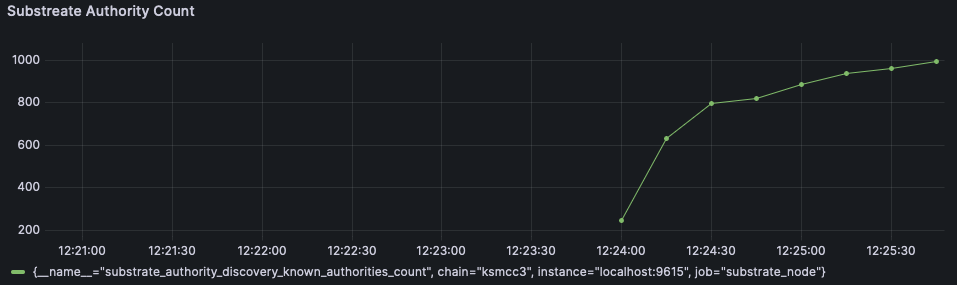

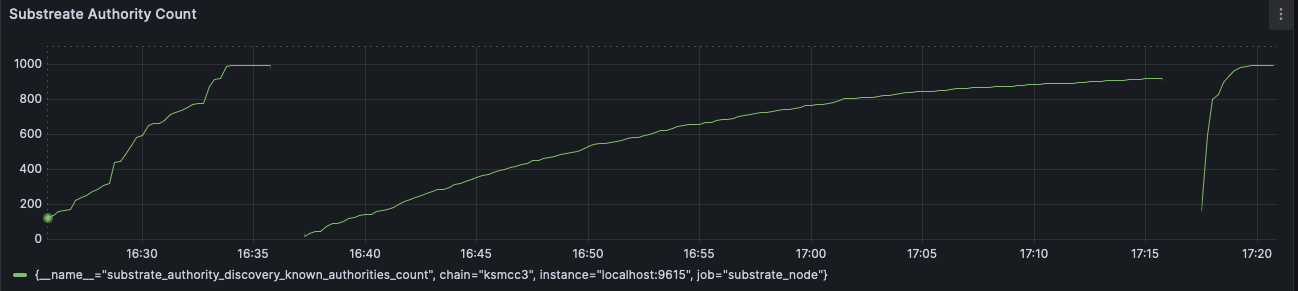

This PR provides the partial results of the `GetRecord` kademlia query. This significantly improves the authority discovery records, from ~37 minutes to ~2/3 minutes. In contrast, libp2p discovers authority records in around ~10 minutes. The authority discovery was slow because litep2p provided the records only after the Kademlia query was completed. A normal Kademlia query completes in around 40 seconds to a few minutes. In this PR, partial records are provided as soon as they are discovered from the network. ### Testing Done Started a node in Kusama with `--validator` and litep2p backend. The node discovered 996/1000 authority records in ~ 1 minute 45 seconds.  ### Before & After In this image, on the left side is libp2p, in the middle litep2p without this PR, on the right litep2p with this PR  Closes: https://github.com/paritytech/polkadot-sdk/issues/7077 cc @paritytech/networking --------- Signed-off-by:Alexandru Vasile <alexandru.vasile@parity.io>

-

PG Herveou authored

Bump asset-hub westend spec version --------- Co-authored-by: command-bot <>

-

Alexandre R. Baldé authored

Closes #6846 . --------- Signed-off-by:

xermicus <cyrill@parity.io> Co-authored-by: command-bot <> Co-authored-by:

Alexander Theißen <alex.theissen@me.com> Co-authored-by:

xermicus <cyrill@parity.io>

-

Michael Müller authored

Closes https://github.com/paritytech/polkadot-sdk/issues/6767. The return type of the host function `caller_is_root` was denoted as `u32` in `pallet_revive_uapi`. This PR fixes the return type to `bool`. As a drive-by, the PR re-exports `pallet_revive::exec::Origin` to extend what can be tested externally. --------- Co-authored-by:Cyrill Leutwiler <bigcyrill@hotmail.com>

-

Alexandru Vasile authored

This PR rejects inbound requests from banned peers (reputation is below the banned threshold). This mirrors the request-response implementation from the libp2p side. I won't expect this to get triggered too often, but we'll monitor this metric. While at it, have registered a new inbound failure metric to have visibility into this. Discovered during the investigation of: https://github.com/paritytech/polkadot-sdk/issues/7076#issuecomment-2589613046 cc @paritytech/networking --------- Signed-off-by:Alexandru Vasile <alexandru.vasile@parity.io>

-

Xavier Lau authored

Introduce `frame_system::Pallet::run_to_block`, `frame_system::Pallet::run_to_block_with`, and `frame_system::RunToBlockHooks` to establish a generic `run_to_block` mechanism for mock tests, minimizing redundant implementations across various pallets. Closes #299. --- Polkadot address: 156HGo9setPcU2qhFMVWLkcmtCEGySLwNqa3DaEiYSWtte4Y --------- Signed-off-by:

Xavier Lau <x@acg.box> Co-authored-by:

Bastian Köcher <git@kchr.de> Co-authored-by: command-bot <> Co-authored-by:

Guillaume Thiolliere <guillaume.thiolliere@parity.io> Co-authored-by:

Guillaume Thiolliere <gui.thiolliere@gmail.com>

-

Sebastian Kunert authored

Fix error message in `DispatchInfo` where post-dispatch and pre-dispatch weight was reversed. --------- Co-authored-by: command-bot <> Co-authored-by:Bastian Köcher <git@kchr.de>

-

Alexandru Gheorghe authored

Normally, approval-voting wouldn't receive duplicate assignments because approval-distribution makes sure of it, however in the situation where we restart we might receive the same assignment again and since approval-voting already persisted it we will end up inserting it twice in `ApprovalEntry.tranches.assignments` because that's an array. Fix this by making sure duplicate assignments are a noop if the validator already had an assignment imported at the same tranche. --------- Signed-off-by:

Alexandru Gheorghe <alexandru.gheorghe@parity.io> Co-authored-by:

ordian <write@reusable.software>

-

- Jan 14, 2025

-

-

Sebastian Kunert authored

closes #3967 ## Changes We now use relay chain slots to measure velocity on chain. Previously we were storing the current parachain slot. Then in `on_state_proof` of the `ConsensusHook` we were checking how many blocks were athored in the current parachain slot. This works well when the parachain slot time and relay chain slot time is the same. With elastic scaling, we can have parachain slot times lower than that of the relay chain. In these cases we want to measure velocity in relation to the relay chain. This PR adjusts that. ## Migration This PR includes a migration. Storage item `SlotInfo` of pallet `aura-ext` is renamed to `RelaySlotInfo` to better reflect its new content. A migration has been added that just kills the old storage item. `RelaySlotInfo` will be `None` initially but its value will be adjusted after one new relay chain slot arrives. --------- Co-authored-by: command-bot <> Co-authored-by:Bastian Köcher <git@kchr.de>

-

Bastian Köcher authored

-

Carlo Sala authored

Port #6459 changes to relays as well, which were probably forgotten in that PR. Thanks! --------- Co-authored-by:Francisco Aguirre <franciscoaguirreperez@gmail.com> Co-authored-by: command-bot <>

-

Sebastian Kunert authored

The umbrella crate quick-check was always failing whenever there was something misformated in the whole codebase. This leads to an error that indicates that a new crate was added, even when it was not. After this PR we only apply `cargo fmt` to the newly generated umbrella crate `polkadot-sdk`. This results in this check being independent from the fmt job which should check the entire codebase.

-

Alexandru Gheorghe authored

There is a problem on restart where nodes will not trigger their needed assignment if they were offline while the time of the assignment passed. That happens because after restart we will hit this condition https://github.com/paritytech/polkadot-sdk/blob/4e805ca0 /polkadot/node/core/approval-voting/src/lib.rs#L2495 and considered will be `tick_now` which is already higher than the tick of our assignment. The fix is to schedule a wakeup for untriggered assignments at restart and let the logic of processing an wakeup decide if it needs to trigger the assignment or not. One thing that we need to be careful here is to make sure we don't schedule the wake up immediately after restart because, the node would still be behind with all the assignments that should have received and might make it wrongfully decide it needs to trigger its assignment, so I added a `RESTART_WAKEUP_DELAY: Tick = 12` which should be more than enough for the node to catch up. --------- Signed-off-by:

Alexandru Gheorghe <alexandru.gheorghe@parity.io> Co-authored-by:

ordian <write@reusable.software> Co-authored-by:

Andrei Eres <eresav@me.com>

-

Alexandru Gheorghe authored

Recovering the POV can fail in situation where the node just restart and the DHT topology wasn't fully discovered yet, so the current node can't connect to most of its Peers. This is bad because for gossiping the assignment you need to be connected to just a few peers, so because we can't approve the candidate and other nodes will see this as a no show. This becomes bad in the scenario where you've got a lot of nodes restarting at the same time, so you end up having a lot of no-shows in the network that are never covered, in that case it makes sense for nodes to actually retry approving the candidate at a later data in time and retry several times if the block containing the candidate wasn't approved. ## TODO - [x] Add a subsystem test. --------- Signed-off-by:Alexandru Gheorghe <alexandru.gheorghe@parity.io>

-

PG Herveou authored

Add an option to persist EVM transaction hash to a SQL db. This should make it possible to run a full archive ETH RPC node (assuming the substrate node is also a full archive node) Some queries such as eth_getTransactionByHash, eth_getBlockTransactionCountByHash, and other need to work with a transaction hash indexes, which are not stored in Substrate and need to be stored by the eth-rpc proxy. The refactoring break down the Client into a `BlockInfoProvider` and `ReceiptProvider` - BlockInfoProvider does not need any persistence data, as we can fetch all block info from the source substrate chain - ReceiptProvider comes in two flavor, - An in memory cache implementation - This is the one we had so far. - A DB implementation - This one persist rows with the block_hash, the transaction_index and the transaction_hash, so that we can later fetch the block and extrinsic for that receipt and reconstruct the ReceiptInfo object. This PR also adds a new binary eth-indexer, that iterate past and new blocks and write the receipt hashes to the DB using the new ReceiptProvider. --------- Co-authored-by:GitHub Action <action@github.com> Co-authored-by: command-bot <>

-

Alexandru Vasile authored

This PR adds the `(litep2p)` suffix to the agent version (user agent) of the identify protocol. The change is needed to gain visibility into network backends and determine exactly the number of validators that are running litep2p. Using tools like subp2p-explorer, we can determine if the validators are running litep2p nodes. This reflects on the identify protocol: ``` info=Identify { protocol_version: Some("/substrate/1.0"), agent_version: Some("polkadot-parachain/v1.17.0-967989c5 (kusama-node-name-01) (litep2p)") ... } ``` cc @paritytech/networking --------- Signed-off-by:Alexandru Vasile <alexandru.vasile@parity.io>

-

Michal Kucharczyk authored

Higher-priority transactions can now replace lower-priority transactions even when the internal _tx_mem_pool_ is full. **Notes for reviewers:** - The _tx_mem_pool_ now maintains information about transaction priority. Although _tx_mem_pool_ itself is stateless, transaction priority is updated after submission to the view. An alternative approach could involve validating transactions at the `at` block, but this is computationally expensive. To avoid additional validation overhead, I opted to use the priority obtained from runtime during submission to the view. This is the rationale behind introducing the `SubmitOutcome` struct, which synchronously communicates transaction priority from the view to the pool. This results in a very brief window during which the transaction priority remains unknown - those transaction are not taken into consideration while dropping takes place. In the future, if needed, we could update transaction priority using view revalidation results to keep this information fully up-to-date (as priority of transaction may change with chain-state evolution). - When _tx_mem_pool_ becomes full (an event anticipated to be rare), transaction priority must be known to perform priority-based removal. In such cases, the most recent block known is utilized for validation. I think that speculative submission to the view and re-using the priority from this submission would be an unnecessary complication. - Once the priority is determined, lower-priority transactions whose cumulative size meets or exceeds the size of the new transaction are collected to ensure the pool size limit is not exceeded. - Transaction removed from _tx_mem_pool_ , also needs to be removed from all the views with appropriate event (which is done by `remove_transaction_subtree`). To ensure complete removal, the `PendingTxReplacement` struct was re-factored to more generic `PendingPreInsertTask` (introduced in #6405) which covers removal and submssion of transaction in the view which may be potentially created in the background. This is to ensure that removed transaction will not re-enter to the newly created view. - `submit_local` implementation was also improved to properly handle priorities in case when mempool is full. Some missing tests for this method were also added. Closes: #5809 --------- Co-authored-by: command-bot <> Co-authored-by:Iulian Barbu <14218860+iulianbarbu@users.noreply.github.com>

-

Alin Dima authored

-

- Jan 13, 2025

-

-

polka.dom authored

As per #3326, removes pallet::getter macro usage from pallet-grandpa. The syntax `StorageItem::<T, I>::get()` should be used instead. cc @muraca --------- Co-authored-by:Bastian Köcher <git@kchr.de>

-

Michal Kucharczyk authored

# Description This PR modifies the hard-coded size of extrinsics cache within [`PoolRotator`](https://github.com/paritytech/polkadot-sdk/blob/cdf107de/substrate/client/transaction-pool/src/graph/rotator.rs#L36-L45) to be inline with pool limits. The problem was, that due to small size (comparing to number of txs in single block) of hard coded size: https://github.com/paritytech/polkadot-sdk/blob/cdf107de/substrate/client/transaction-pool/src/graph/rotator.rs#L34 excessive number of unnecessary verification were performed in `prune_tags`: https://github.com/paritytech/polkadot-sdk/blob/cdf107de /substrate/client/transaction-pool/src/graph/pool.rs#L369-L370 This was resulting in quite long durations of `prune_tags` execution time (which was ok for 6s, but becomes noticable for 2s blocks): ``` Pruning at HashAndNumber { number: 83, ... }. Resubmitting transactions: 6142, reverification took: 237.818955ms Pruning at HashAndNumber { number: 84, ... }. Resubmitting transactions: 5985, reverification took: 222.118218ms Pruning at HashAndNumber { number: 85, ... }. Resubmitting transactions: 5981, reverification took: 215.546847ms ``` The fix reduces the overhead: ``` Pruning at HashAndNumber { number: 92, ... }. Resubmitting transactions: 6325, reverification took: 14.728354ms Pruning at HashAndNumber { number: 93, ... }. Resubmitting transactions: 7030, reverification took: 23.973607ms Pruning at HashAndNumber { number: 94, ... }. Resubmitting transactions: 4465, reverification took: 9.532472ms ``` ## Review Notes I decided to leave the hardocded `EXPECTED_SIZE` for the legacy transaction pool. Removing verification of transactions during re-submission may negatively impact the behavior of the legacy (single-state) pool. As in long-term we probably want to deprecate old pool, I did not invest time to assess the impact of rotator change in behavior of the legacy pool. --------- Co-authored-by: command-bot <> Co-authored-by:

Iulian Barbu <14218860+iulianbarbu@users.noreply.github.com>

-

Alexandru Gheorghe authored

Reference hardware requirements have been bumped to at least 8 cores so we can no allocate 50% of that capacity to PVF execution. --------- Signed-off-by:Alexandru Gheorghe <alexandru.gheorghe@parity.io>

-

PG Herveou authored

Update the current approach to attach the `ref_time`, `pov` and `deposit` parameters to an Ethereum transaction. Previously we will pass these 3 parameters along with the signed payload, and check that the fees resulting from `gas x gas_price` match the actual fees paid by the user for the extrinsic. This approach unfortunately can be attacked. A malicious actor could force such a transaction to fail by injecting low values for some of these extra parameters as they are not part of the signed payload. The new approach encodes these 3 extra parameters in the lower digits of the transaction gas, approximating the the log2 of the actual values to encode each components on 2 digits --------- Co-authored-by:GitHub Action <action@github.com> Co-authored-by: command-bot <>

-

Branislav Kontur authored

## Description This PR deprecates `UnpaidLocalExporter` in favor of the new `LocalExporter`. First, the name is misleading, as it can be used in both paid and unpaid scenarios. Second, it contains a hard-coded channel 0, whereas `LocalExporter` uses the same algorithm as `xcm-exporter`. ## Future Improvements Remove the `channel` argument and slightly modify the `ExportXcm::validate` signature as part of [this issue](https://github.com/orgs/paritytech/projects/145/views/8?pane=issue&itemId=84899273). --------- Co-authored-by: command-bot <>

-

Bastian Köcher authored

Closes: https://github.com/paritytech/polkadot-sdk/issues/7033

-